Understanding the Basics of Derivatives

Derivatives lie at the heart of calculus. They are essential in understanding how functions change and are crucial in fields like machine learning, where they help optimize models.

Introduction to Derivatives in Calculus

In calculus, derivatives measure how a function changes as its input changes. They provide insight into the rate of change, akin to velocity in physics.

The derivative of a function at a point is the slope of the tangent to the curve of the function at that point. Calculating derivatives involves various rules, such as the power rule, product rule, and chain rule. These rules simplify finding the derivative of more complex functions. Understanding these rules helps in solving real-world problems where change is a key factor.

The Power of Differentiation in Machine Learning

Differentiation uses derivatives to find optimal solutions. In machine learning, this helps in minimizing error and improving model accuracy. The concept of gradient descent, a technique that relies heavily on derivatives, is used to update model parameters correctly and efficiently.

By calculating gradients, models learn to adjust their predictions. This is especially important in neural networks, where differentiation enables the backpropagation algorithm to update weights and biases to minimize errors across training samples.

Calculus for Machine Learning: An Overview

Calculus is integral to machine learning, offering tools to solve many problems. Derivatives provide a way to understand how changes in input affect output, making them vital for tuning algorithms and models.

Functions common in machine learning, like activation functions, are often analyzed with derivatives to see how changes will influence outcomes.

A strong grasp of calculus allows one to understand and create models that can predict or adjust based on new data. Differentiation can shine in various applications, from simple linear regression to complex deep learning challenges, always helping optimize tasks and improve processes.

Calculation Techniques for Derivatives

Calculating derivatives is key in differential calculus, which is crucial for understanding the behavior of functions. Key techniques such as the basic rules of differentiation and the chain rule are foundational tools in solving complex problems.

Rules of Differentiation

Rules of differentiation provide the framework for finding derivatives of various types of functions.

Some fundamental rules include the power rule, product rule, and quotient rule.

The power rule is used when differentiating functions of the form ( f(x) = x^n ). Its derivative is ( f'(x) = nx^{n-1} ).

The product rule applies to functions that are products of two functions: ( f(x) = u(x)v(x) ). The derivative is found by ( f'(x) = u'(x)v(x) + u(x)v'(x) ).

For the quotient rule, used with functions that are quotients, ( f(x) = \frac{u(x)}{v(x)} ), the derivative is ( f'(x) = \frac{u'(x)v(x) – u(x)v'(x)}{[v(x)]^2} ).

These rules are essential for simplifying the differentiation process and understanding function behavior.

Applying the Chain Rule

The chain rule is a key technique used in differential calculus when differentiating composite functions. It is vital for dealing with nested functions.

When a function is composed as ( f(g(x)) ), the chain rule states that the derivative is ( f'(g(x)) \cdot g'(x) ). This means that the derivative of the outer function is evaluated at the inner function, then multiplied by the derivative of the inner function itself.

For instance, to differentiate ( h(x) = (3x^2 + 2)^5 ), apply the chain rule by finding the derivative of the outer function ( (u^5) ), then multiply it by the derivative of the inner function ( (3x^2 + 2) ).

Understanding the chain rule allows the differentiation of more complex structures often encountered in advanced calculus problems. This rule is often highlighted in the context of machine learning, where deep learning frameworks utilize automatic differentiation, according to a survey on automatic differentiation.

Essential Linear Algebra for Machine Learning

Understanding linear algebra is crucial for machine learning. It helps describe data through matrices and vectors, optimize algorithms, and transform data for better learning models. Several key concepts such as matrices, vectors, eigenvalues, eigenvectors, and linear transformations are fundamental to the field.

Understanding Matrices and Vectors

Matrices and vectors are the building blocks of linear algebra.

A matrix is a rectangular array of numbers, symbols, or expressions, arranged in rows and columns. These are used to represent data or weights in machine learning models.

Vectors are special matrices, consisting of a single column or row, and can represent data points or weights.

The importance lies in their ability to perform operations efficiently.

Matrix operations include addition, subtraction, and multiplication. Vectors are often used to describe data in multi-dimensional space. The dimensions of a matrix are referred to as its rank, indicating the number of independent rows or columns.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors play a crucial role in simplifying matrix operations.

An eigenvector of a matrix is a non-zero vector that remains in its span when a linear transformation is applied, only scaled by an eigenvalue. They are essential for understanding properties of matrices and are calculated from the characteristic equation of a matrix.

These concepts are vital in machine learning, as they help reduce dimensions in datasets, making computations more efficient. Principal component analysis (PCA), a common technique, uses eigenvectors to identify the most important features.

Linear Transformations and Their Importance

Linear transformations refer to changes in data achieved through matrix multiplication. They allow for scaling, rotating, and shifting data, which is essential for tuning machine learning algorithms.

These transformations maintain vector space structure, making them predictable and allowing for easier optimization.

Their importance in machine learning lies in representing data transformations efficiently. By applying linear transformations, it becomes possible to manipulate and adjust data to improve model performance. Understanding these transformations aids in better designing architectures such as neural networks, where data is transformed at each layer.

The Role of Multivariable Calculus

Multivariable calculus is crucial in machine learning, especially when dealing with functions that have multiple inputs or dimensions. This field is essential for understanding how changes in input variables affect outcomes, which aids prediction and optimization tasks.

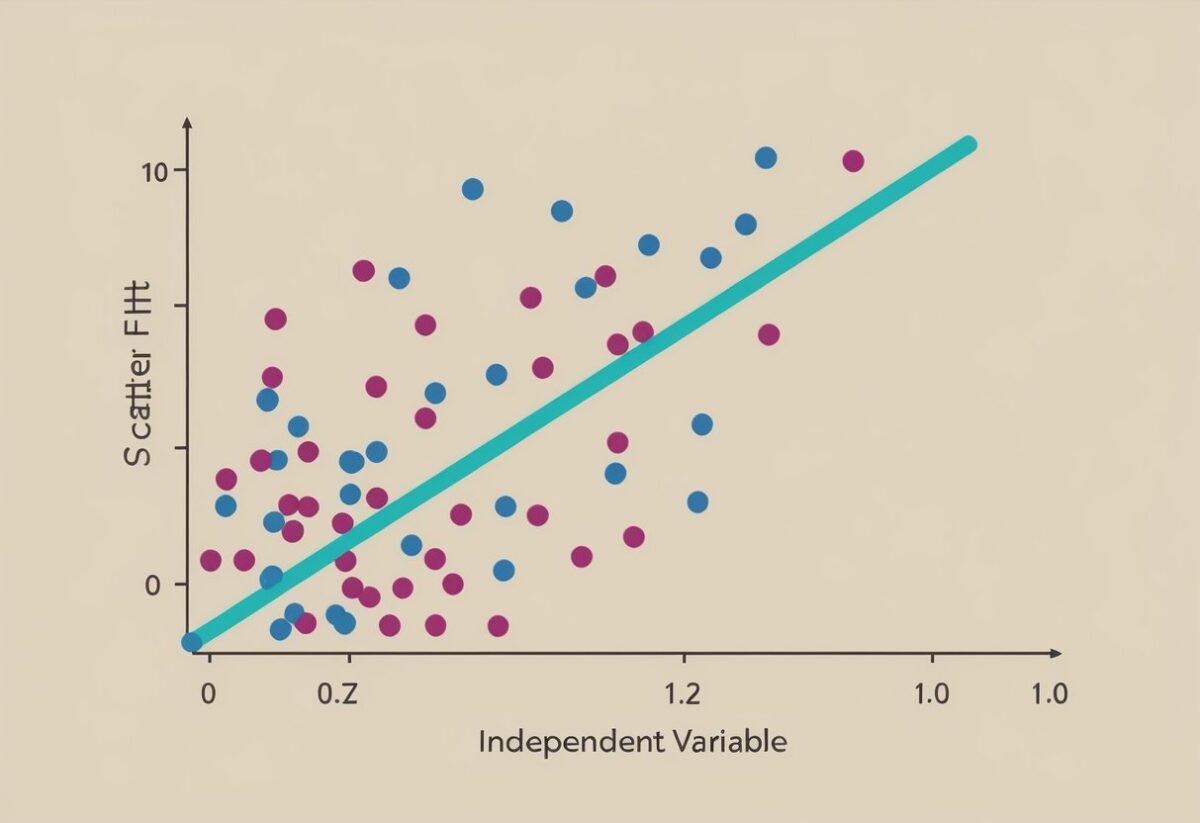

Partial Derivatives and Predictions

Partial derivatives are fundamental in multivariable calculus when exploring how a change in one variable impacts a function, while other variables remain constant.

In machine learning, this concept helps predict outcomes by analyzing various inputs.

Partial derivatives can help identify the rate of change of a function in a particular direction, providing insight into the relationship between variables and the function. This is especially useful in complex models where multiple inputs interact with each other. In such cases, understanding the influence of each input becomes crucial.

The concept of directionality given by partial derivatives is vital in predictive modeling. It helps in assessing which features are more important and why certain predictions are made. By understanding this, models can be fine-tuned to improve accuracy and performance.

Optimization in Higher Dimensions

Optimization plays a critical role in enhancing the efficiency and effectiveness of machine learning models, particularly in higher dimensions. Multivariable calculus provides tools for finding optimal solutions in these complex scenarios.

In optimizing functions with multiple inputs, gradient descent is often employed. This method uses partial derivatives to navigate the multidimensional space, seeking minima or maxima of a function.

Optimization in higher dimensions involves minimizing errors and enhancing the model’s success rate. By understanding the landscape created by multiple variables, it becomes easier to navigate towards solutions that improve predictions, ensuring that machine learning models perform at their best. Multivariable calculus provides the mathematical basis for these applications, making it indispensable.

Application of Derivatives in AI and Neural Networks

Derivatives play a crucial role in AI and neural networks by enabling optimization through algorithms like gradient descent and facilitating the learning process via backpropagation. These techniques are essential for training deep learning models.

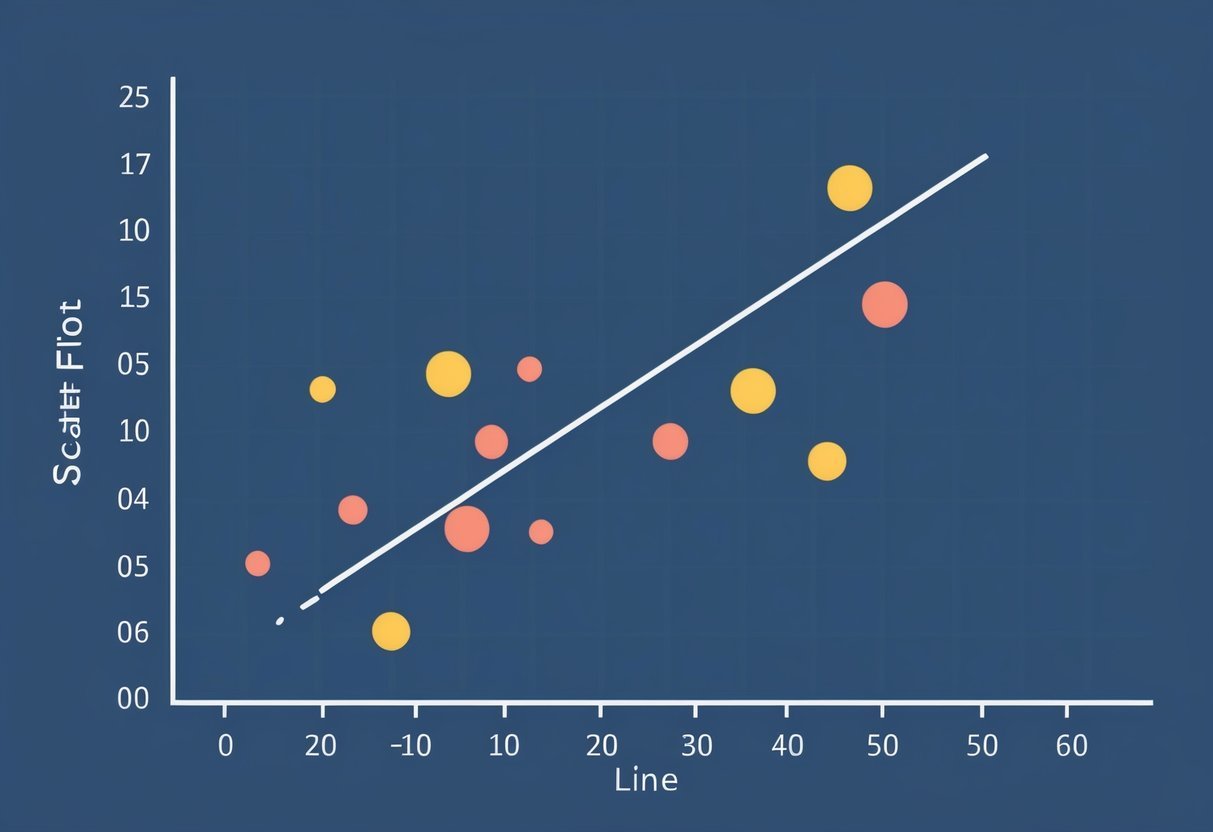

Gradient Descent Algorithm

The gradient descent algorithm is fundamental in optimizing neural networks. It helps in minimizing the loss function by adjusting the weights of the network.

At each step, the algorithm calculates the derivative of the loss function with respect to the network parameters. This derivative indicates the direction in which the weights should be adjusted to decrease the error.

Gradients are calculated using techniques like automatic differentiation. This ensures precise updates in large and complex models.

Learning rate, a key parameter, influences the size of the steps taken towards the minimum. Proper tuning of the learning rate is essential to avoid overshooting or slow convergence.

This method allows AI models to learn from data effectively by iteratively improving their performance.

Understanding Backpropagation

Backpropagation is essential for training deep learning models. This method updates weights in neural networks by calculating the error gradient across network layers.

By using derivatives, backpropagation efficiently propagates errors from the output layer back to the previous layers, allowing adjustments to be made at each step.

This process relies heavily on partial derivatives to manage the contribution of each parameter to the output error.

Through these adjustments, the algorithm ensures that the model learns to produce accurate predictions. Understanding this concept is critical for those working with neural networks, as it directly impacts the accuracy and effectiveness of AI models.

Statistics and Probability in Machine Learning

Statistics and probability are key components in the field of machine learning. These mathematical concepts help in predicting outcomes, understanding data patterns, and estimating uncertainties.

Probability Theory and Expectation

Probability theory is crucial for making predictions in machine learning. It involves calculating the likelihood of different outcomes.

For example, a model might predict whether an email is spam based on prior data. Probabilities are often expressed as values between 0 and 1, indicating the chance that a specific event will occur.

Expectation is another important concept. It provides the average outcome if an experiment is repeated many times.

In machine learning, expectation helps in improving model accuracy by estimating the expected loss or error, thus guiding optimization processes.

Statistical Implications of Derivatives

Derivatives in machine learning are not limited to optimization; they have statistical implications too. They are used in statistical models to describe changes.

For example, derivatives can indicate how a small change in input affects the output prediction of a model.

Understanding these derivatives helps in adjusting models for better accuracy. The process involves differentiating various statistical functions and applying them to real-world data.

This assists in creating more reliable and precise predictions, enhancing the overall effectiveness of machine learning models.

Optimizing Algorithms for Machine Learning

Optimizing algorithms is essential for enhancing the performance of machine learning models.

Effective optimization deals with refining computational processes and improving model accuracy in both small scale and large scale scenarios.

Solving Optimization Problems

Optimization problems in machine learning focus on minimizing or maximizing a function to improve model performance.

Key techniques include gradient descent, which iteratively adjusts parameters to find optimal solutions. Variations like stochastic gradient descent can handle noisy data effectively.

Incorporating regularization techniques like L1 and L2 helps prevent overfitting by penalizing large coefficients.

Setting appropriate learning rates is vital for convergence.

Adaptive learning rate methods, such as Adam and RMSprop, adjust steps during training based on data gradients.

Tracking loss functions helps in determining when optimization has achieved desired accuracy. Training eventually stops when improvements become marginal or convergence criteria are met.

Large-Scale Optimization Techniques

Large-scale optimization requires handling extensive datasets and complex models efficiently.

Distributing computations across multiple processors or GPUs reduces execution time and enhances performance. Techniques like MapReduce and Hadoop manage large-scale data processing effectively.

Parallel optimization approaches, such as distributed gradient descent, update model parameters concurrently on different nodes.

This distribution reduces computation time significantly.

Streaming data allows algorithms to process data in real-time, keeping models updated with minimal latency.

Using approximate methods can also help manage extensive computations. Techniques like randomized algorithms simplify complex computations while maintaining acceptable accuracy levels for large datasets.

Programming Tools for Derivatives and Differentiation

Understanding programming tools is crucial for handling derivatives and differentiation effectively. Python stands out for mathematical calculations, while TensorFlow and PyTorch provide robust libraries for machine learning tasks.

Leveraging Python for Mathematical Calculations

Python is a versatile language that excels in mathematical computations due to its vast library ecosystem.

NumPy is one of its core libraries, offering a powerful array object and tools for working with these arrays. It supports operations like element-wise math, matrix operations, and, importantly, differentiation.

For derivatives, SymPy is particularly useful. It enables symbolic computations that allow users to perform algebraic calculations, including derivatives.

SymPy’s ability to express and manipulate mathematical expressions symbolically is a significant advantage when dealing with complex math in machine learning.

Another helpful package is SciPy, which builds on NumPy and provides additional tools for optimization, differentiation, and integration. Its functions can handle tasks like finding derivatives over complex systems efficiently.

Python’s extensive community support and documentation make it easier to learn and implement differentiation in real-world applications.

Introduction to TensorFlow and PyTorch Libraries

TensorFlow is a popular framework for building machine learning models. It employs automatic differentiation, making it easier to calculate gradients for optimization tasks.

TensorFlow’s GradientTape is particularly handy, allowing dynamic computation of gradients during runtime, which is critical in training neural networks.

PyTorch, another leading library, offers a dynamic computation graph, making it intuitive for developers. Its autograd feature automatically computes derivatives, which simplifies backpropagation in deep learning models.

PyTorch is often praised for its ease of use and flexibility, making it a favorite among researchers experimenting with new ideas.

Both TensorFlow and PyTorch integrate well with Python, offering extensive functionalities for machine learning. These libraries are not only essential for calculating derivatives but also for implementing entire learning models.

Their active communities and comprehensive documentation provide ample support for developers navigating complex differentiation tasks.

Advanced Topics in Derivatives for ML

Advanced derivative techniques in machine learning involve the use of the Jacobian matrix and automatic differentiation. These topics enhance the efficiency and accuracy of machine learning models.

Understanding the Jacobian Matrix

The Jacobian matrix is a crucial tool in machine learning, especially in optimization problems. It represents the first-order partial derivatives of a vector-valued function. The Jacobian helps determine how changes in inputs affect outputs.

Example: In neural networks, the Jacobian is used to compute gradients, essential for updating weights during training. By analyzing the Jacobian, one can understand sensitivity and stability in systems.

The structure of the Jacobian is a matrix where each element represents the rate of change of an output variable with respect to an input variable. This makes it vital in backpropagation and in algorithms where precise gradient calculation is necessary. It is also key in linearizing nonlinear systems around operating points.

Automatic Differentiation Techniques

Automatic differentiation (AD) is a method to compute derivatives efficiently. Unlike symbolic differentiation, which can become complex, or numerical differentiation, which can introduce errors, AD offers a balance of accuracy and efficiency.

AD works by breaking down functions into operations for which derivatives are known. Using the chain rule, derivatives of complex functions are calculated.

This process is automatic and does not require analytical computation by the user.

Common libraries like TensorFlow and PyTorch utilize AD to perform gradient calculations quickly and accurately, supporting the training of large-scale models.

AD is integral for neural network frameworks, allowing for seamless integration with gradient-based optimizers, making it indispensable for modern machine learning applications.

Practical Uses of Calculus in Data Science

Calculus plays an essential role in data science by allowing data scientists to model and understand complex changes in data. It also provides tools to optimize algorithms, especially in machine learning.

Data Scientists and Calculus

Data scientists use calculus to handle data changes and trends effectively. Calculus enables them to create predictive models and perform optimization.

For instance, partial derivatives help in calculating the rate of change in multivariable functions, which is crucial for building accurate models. This is particularly important in training algorithms where precision is key.

Moreover, data scientists use differential calculus to understand relationships between variables. By analyzing how one variable affects another, they can derive insights and suggest data-driven decisions.

Understanding these changes allows data scientists to make informed predictions, enhancing analytical accuracy and effectiveness.

Machine Learning Engineering Challenges

Machine learning engineers often face challenges that require calculus-based solutions.

For example, finding the minimum or maximum of functions is essential in machine learning models. Calculus tools, like derivatives, help engineers optimize algorithms by adjusting parameters to achieve desired outputs.

Calculus also assists in gradient descent, a method used to minimize a function by iteratively moving toward the steepest descent. This technique is crucial in neural network training and other machine learning frameworks.

Calculus concepts underpin machine learning techniques like backpropagation, where understanding the chain rule allows for efficient computation of gradients, crucial for learning and improving model predictions.

Specialized Applications of Derivatives

Derivatives play a crucial role in various machine learning tasks. They are especially useful in specialized fields like computer vision and natural language processing, where they enhance the model’s ability to learn and improve.

Tackling Computer Vision with Geometry and Calculus

In computer vision, derivatives help machines understand and interpret visual information. They are essential for edge detection, where they identify changes in image intensity.

By calculating the gradient of pixel values, algorithms can pinpoint edges in images, which are important for identifying shapes and objects.

Derivatives also aid in feature extraction. They help in transforming raw image data into meaningful information by analyzing texture and patterns.

This enables algorithms to classify and recognize objects accurately. For instance, convolutional neural networks (CNNs) rely heavily on derivatives to optimize image recognition through backpropagation. This process adjusts the network’s weights by minimizing error, enhancing the model’s performance and precision.

Incorporating Calculus in Natural Language Processing

Natural language processing (NLP) uses derivatives to refine text analysis and understanding.

Calculus helps in training models like recurrent neural networks (RNNs) and transformers by optimizing their learning processes.

When applied to NLP, derivatives contribute to adjusting model parameters, which is vital for improving language translation, sentiment analysis, and text generation tasks.

Derivatives are also vital for gradient-based optimization methods used in NLP. For instance, they aid in fine-tuning models by adjusting learning rates according to the nature of the dataset.

This results in more efficient learning and better handling of large and complex datasets, enhancing the machine’s ability to comprehend and generate human language effectively.

Learning Resources for Machine Learning

Finding the right resources for learning the math behind machine learning involves exploring ebooks and online platforms. Ebooks provide structured content, while online platforms, like deeplearning.ai, offer interactive courses to help learners build strong math skills.

Selecting the Right Mathematics Ebook

Choosing a well-structured mathematics ebook can greatly enhance one’s learning journey in machine learning.

It’s important for the ebook to cover fundamental topics such as derivatives and differentiation. A good ebook should also present concepts in a clear, step-by-step manner, integrating examples and exercises to reinforce learning.

Basic prerequisites include understanding calculus and linear algebra. Books like “Mathematics for Machine Learning” are designed to bridge gaps between high school math and complex machine learning concepts.

Ebooks often offer the advantage of portability and interactive elements, such as embedded quizzes or links to further resources, enhancing the learning experience.

Benefiting from Online Platforms like deeplearning.ai

Online platforms like deeplearning.ai provide a comprehensive approach to learning machine learning math.

These platforms offer courses that cover essential math skills, presented by industry experts. They often include video lectures, hands-on projects, and quizzes to test understanding.

Learners benefit from a flexible schedule, allowing them to learn at their own pace. Discussion forums and peer collaborations on these platforms foster a community learning environment.

This interactive learning can help solidify mathematical concepts through practical applications, making it an effective supplement to more traditional learning resources like ebooks.

Frequently Asked Questions

In the field of machine learning, understanding derivatives and differentiation is crucial for optimizing models and applying algorithms effectively. Several mathematical concepts are essential, especially when dealing with neural networks and matrix calculus.

What is the significance of derivatives in optimizing neural network models?

Derivatives play a key role in adjusting neural network weights. By calculating derivatives, one determines how much to change weights to minimize errors. This allows the model to learn from data quickly and efficiently.

How do gradient descent algorithms utilize differentiation?

Gradient descent algorithms use differentiation to find the best parameters for a model. By following the slope given by derivatives, these algorithms can identify the direction in which to adjust parameters, gradually reducing the model’s error term.

What are the fundamental calculus concepts necessary for understanding deep learning?

Basic calculus concepts such as limits, derivatives, and integrals are important for deep learning. They help in understanding how neural networks are trained through backpropagation, which relies heavily on these calculus principles.

In what ways do matrix calculus and derivatives intersect in machine learning?

Matrix calculus is crucial for dealing with neural networks that involve multiple input and output nodes. Differentiation in this context helps manage the computations of multivariable functions, which is typical in machine learning models.

Can a strong grasp of derivatives predict success in learning machine learning algorithms?

A strong understanding of derivatives can greatly enhance one’s ability to grasp machine learning concepts. It enables efficient learning and implementation of optimization techniques, a core part of most machine learning algorithms.

What prerequisites in mathematics should one have to effectively tackle the concept of differentiation in machine learning?

A solid foundation in algebra, calculus, and linear algebra is essential. Knowledge of these areas will make it easier to understand how differentiation operates within various machine learning models. This includes training deep learning networks.