Understanding SQL Rollup

SQL Rollup is a tool used in database queries to summarize data and calculate subtotals and grand totals efficiently. It allows for various levels of aggregation within a hierarchy, simplifying complex data into more digestible forms.

Defining Rollup

Rollup is an extension of the SQL GROUP BY clause. It simplifies data analysis by providing aggregate data across multiple dimensions.

For instance, when dealing with sales data for various products, Rollup can compute subtotals for each product category and a grand total for all sales.

This is beneficial in organizing and interpreting large datasets.

The syntax usually involves listing columns in a hierarchy, such as ROLLUP(A, B, C), where SQL processes the data by grouping and aggregating based on this order. This creates multiple grouping sets, which include all possible combinations of these columns, along with the overall total.

The Purpose of Rollup in SQL

Rollup serves the essential function of data aggregation. When a database contains hierarchical data, Rollup efficiently computes subtotals at each level of the hierarchy.

For example, in a sales report, it can generate totals for each region, then for each country within a region, and finally a grand total for all regions. This is particularly useful for reports that require data to be summed up at different levels.

The SQL ROLLUP also aids in generating these comprehensive reports by calculating necessary subtotals and the grand sum without manually writing multiple queries, thus saving time and reducing complexity.

SQL Rollup Syntax

The SQL Rollup provides a way to create summaries in query results by adding subtotals and grand totals. Understanding its syntax helps users create efficient data aggregations and can be particularly useful in reporting scenarios.

Basic Rollup Syntax

In SQL, the Rollup syntax is used within the GROUP BY clause. It allows the user to generate summary rows in the result set. The basic format is as follows:

SELECT column1, column2, aggregate_function(column3)

FROM table_name

GROUP BY ROLLUP (column1, column2);

When using Rollup, it processes columns inside the parentheses from left to right. This generates aggregate data, like subtotals, for each level of hierarchy in those columns. The final result includes these subtotal rows and a grand total row, if applicable.

Mastering this syntax enables analysts to quickly produce complex reports.

Rollup with Group By Clause

When using Rollup with a GROUP BY clause, the Rollup is an extension that simplifies creating multiple grouping sets. While a regular GROUP BY groups the data by each unique set, adding Rollup expands this by including additional subtotal rows for each level and a grand total.

The Rollup option does not produce all possible combinations of groupings like the CUBE function. Instead, it controls the hierarchical grouping, ensuring efficient computation.

In SQL Server, it’s important to note that Rollup uses existing columns and extends their groupings without changing the original order. This feature makes it a valuable tool for summarizing complex datasets efficiently in business environments.

Working with Aggregate Functions

In SQL, aggregate functions like SUM, COUNT, and AVG are powerful tools for summarizing large datasets. These functions, combined with ROLLUP, can generate important insights, such as subtotals and grand totals, to aid decision-making.

Using Sum with Rollup

The SUM function is essential for adding values in a dataset. When used with ROLLUP, it can provide both subtotals for groups and a grand total. This feature is useful for generating sales reports or financial summaries.

For instance, to calculate the total sales per product category and overall, the query might look like this:

SELECT category, SUM(sales)

FROM sales_data

GROUP BY ROLLUP(category);

In this example, each category’s total sales are calculated, and ROLLUP adds an extra row showing the total sales for all categories combined. This method simplifies understanding of both detailed and aggregate sales figures, making data evaluation more efficient.

Count, Avg, and Other Aggregates

Aggregate functions such as COUNT and AVG also benefit from using ROLLUP. The COUNT function is used to tally items in a dataset, while AVG calculates average values.

For example, using COUNT with ROLLUP helps analyze customer visits per store, then add a grand total of all visits:

SELECT store, COUNT(customer_id)

FROM visits

GROUP BY ROLLUP(store);

Similarly, AVG with ROLLUP provides average sales data per region, with an overall average row. These applications are invaluable in identifying trends and assessing performance across categories. By leveraging these functions with ROLLUP, SQL users can efficiently interpret various data points.

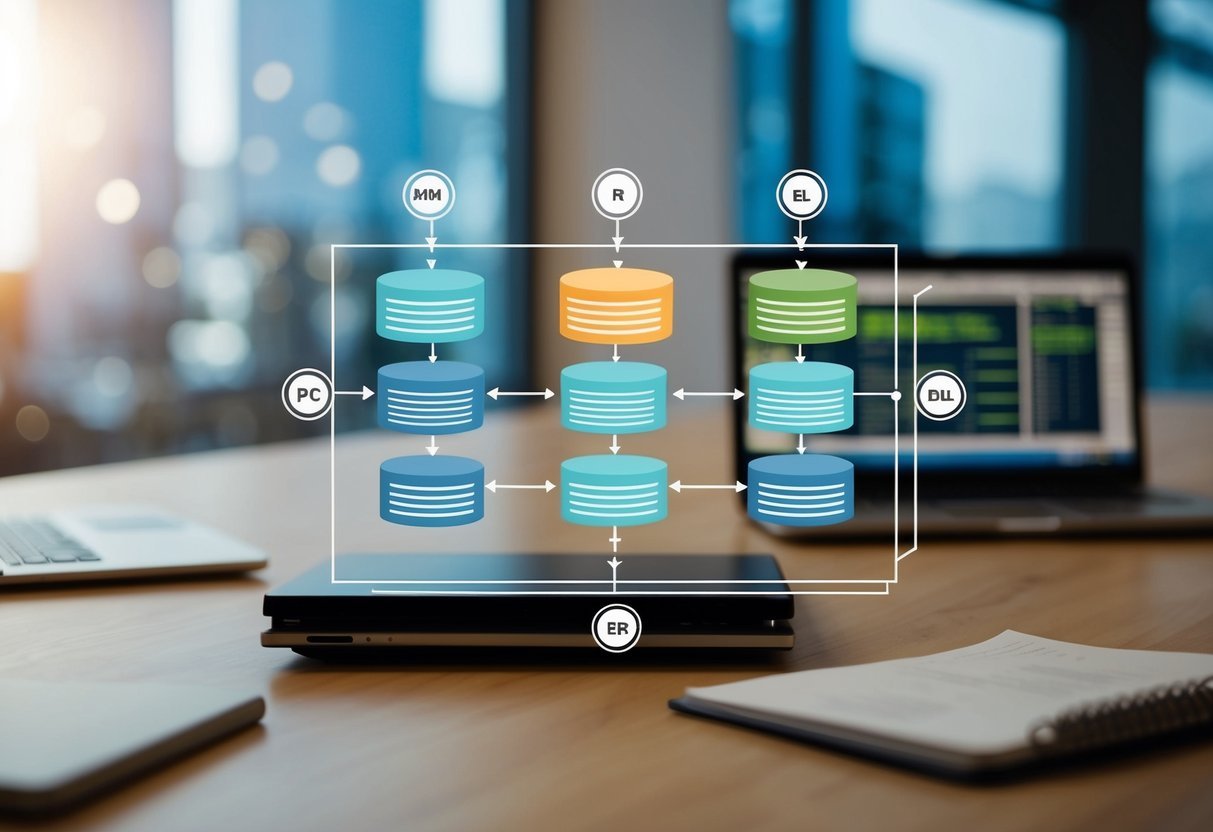

Hierarchical Data and Rollups

In SQL, the handling of hierarchical data often involves organizing data into different levels. This organization can make data analysis more structured and meaningful. Rollups play a crucial role in this process by simplifying the generation of summary rows for various levels within a hierarchy.

Understanding Hierarchical Aggregation

Hierarchical aggregation involves arranging data into a parent-child structure. This is common in business settings where information is segmented by categories such as regions, product types, or time periods. SQL Rollup can simplify aggregating data at each hierarchical level, providing subtotals that enhance decision-making.

For example, in a sales database, employees might be grouped by department, and those departments grouped by region. Using Rollup, SQL can automatically calculate totals at each level. This includes department sales within each region and overall sales for all regions. The Rollup feature in SQL extends the GROUP BY clause, allowing you to see these various levels without manual calculations.

Rollup with Hierarchical Categorization

Rollup is especially useful when data is categorically hierarchical, meaning categories exist within other categories. By using Rollup, users can define data groupings and easily generate reports that include both subtotals and grand totals.

In a retail scenario with products categorized by electronics, each electronic type might further split into brands. With SQL Server Rollup, this hierarchy can be represented efficiently, by summarizing sales figures first by electronic type, then by individual brand, and finally, for total electronic sales. This layered aggregation helps businesses understand performance across different dimensions without complex SQL queries. To learn more about SQL Rollups, visit SQL Server ROLLUP with simple examples for a practical application of these concepts.

Using Rollup for Subtotals and Grand Totals

In SQL, the ROLLUP function helps to create subtotals and a grand total row when dealing with grouped data. This can be particularly useful for analyzing sales data where aggregated results are necessary for decision-making.

Generating Subtotals

When using ROLLUP in SQL, subtotals are generated by applying aggregate functions on grouped data. For instance, in a sales database, if data is grouped by product and then by month, ROLLUP can calculate the sum of sales for each month per product.

To implement this, the query will use the GROUP BY clause with ROLLUP to create grouping sets. This results in subtotals for each category, detailing how much each product sold per month.

For example, the SQL snippet might look like:

SELECT Product, Month, SUM(Sales) AS TotalSales

FROM SalesData

GROUP BY Product, Month WITH ROLLUP;

This query aggregates sales while showing subtotals for each product. The sub-total rows reveal insights about sales distribution across different time periods.

Calculating Grand Totals

The grand total row is added at the end of the result set using ROLLUP. This row gives an overall sum of all sales figures included in the data set. A grand total helps in understanding the complete picture, summing up sales across all categories.

In the previous example, as the query processes the groups, ROLLUP computes and includes a final grand total row. This includes the cumulative sales data without any specific grouping column labels, effectively showing the sum for the entire data set. Implementation of this approach aids decision-making by providing a comprehensive view of total sales performance.

Advanced Rollup Operations

Advanced Rollup operations in SQL allow users to create reports with various levels of aggregation. By mastering these techniques, one can efficiently summarize data and generate comprehensive insights for data analysis.

Partial Roll-ups

A partial roll-up focuses on summarizing data for a specific subset of columns. This allows the user to gain insights without needing a full roll-up, which can be especially useful when dealing with large datasets. The rollup operator extends the GROUP BY clause, generating super-aggregate rows at different levels.

The partial roll-up can be achieved by specifying fewer columns than usual. For instance, applying a roll-up on columns A and B but not C allows results to show totals for different combinations of A and B, without aggregating C. This behavior resembles the flexibility provided by grouping sets, and it is useful in complex queries where certain dimensions need more focus than others.

Rollup Combined with Cube

Combining rollup with a cube operation offers even broader insights, as it allows for aggregation across multiple dimensions. While a rollup provides a hierarchical level of data aggregation, a cube offers a comprehensive cross-tabulation of all possible combinations.

Using both operators, one can gain a complete picture of how different factors influence the metrics being analyzed. The rollup operator simplifies hierarchical data, while the cube allows for a more detailed cross-section. Such operations are valuable in scenarios where businesses require detailed reports involving various factors. Combining these techniques can help achieve a balanced mix of aggregated data without overwhelming complexity.

Group By Enhancements with Rollup

The Group By clause in SQL can be enhanced using Rollup, which is used to create subtotals and grand totals. These functionalities help in making complex data analysis easier and more streamlined.

Grouping Sets and Rollup

A grouping set is a tool that enhances the Group By statement by allowing multiple groupings in a single query. It is especially helpful in SQL Server for simplifying aggregate calculations. The Rollup option expands the capabilities of grouping sets by automatically calculating subtotals along with the final grand total.

In MySQL, the Rollup modifier can be added to the Group By clause to enhance multi-level data analysis. By using Rollup, SQL queries can generate additional rows showing the subtotal of each grouping set, thus providing more detailed summaries of data.

Group By with Super-aggregate Rows

The Rollup function is a powerful extension of the SQL Group By clause. It not only helps in grouping data but also in creating super-aggregate rows, which include various dimensions and hierarchical levels. These rows represent subtotals of grouped data, and the final row is the grand total.

Using Rollup in SQL Server, users can streamline data analysis by combining different dimensions. The grand total row is particularly useful for overseeing overall data trends. When used correctly, it can greatly enhance the clarity and depth of data analysis within a single SQL query.

Implementing Rollup in SQL Databases

SQL Rollup is an important tool for generating detailed reports by summarizing data. It extends the functionality of the GROUP BY clause, making it easier to calculate subtotals and grand totals in databases like Microsoft SQL Server and MySQL.

Rollup in Microsoft SQL Server

In Microsoft SQL Server, the Rollup is a subclause that simplifies generating multiple grouping sets. When an SQL query includes a Rollup, it creates summary rows, providing subtotals and a grand total row. This allows users to quickly analyze different levels of data aggregation within a single query.

For example, consider a sales table. By using Rollup, one can calculate total sales for each product category along with a cumulative total. This reduces the number of queries needed and increases efficiency.

Rollup is ideal for creating hierarchical reports that need different granularities of data.

Rollup in MySQL and Other Databases

In MySQL, Rollup is also used to generate aggregated results with subtotals and a grand total. Implementing Rollup in MySQL involves adding the Rollup operator to the GROUP BY clause in an SQL query, allowing the extension of summary data efficiently. This is especially useful for databases that require data to be grouped by different dimensions.

Rollup can provide insights by showing detailed data alongside summaries for evaluation. For other databases, the process might vary, but the core functionality remains consistent.

Ensuring that queries are well-structured can make data analysis more intuitive and informative.

Filtering Aggregated Data

When working with SQL Rollups, filtering the aggregated data correctly is crucial. This process often involves using specific clauses to refine results and ensure meaningful data presentation.

Using Having with Rollup

The HAVING clause is a vital tool when filtering aggregated data in SQL, especially when using Rollup. Unlike the WHERE clause, which filters rows before aggregation, the HAVING clause applies conditions after data aggregation. This allows users to set conditions on the result of aggregate functions like SUM or COUNT.

For instance, when calculating total sales per product, HAVING can be used to show only those products with sales exceeding a specific threshold. This approach is useful in scenarios where users want to highlight significant data points without being affected by less relevant information.

Understanding the distinction and correct application of HAVING ensures precise data filtering after running rollup operations, which helps in generating cleaner and more readable reports.

Order By and Rollup

The ORDER BY clause enhances data presentation by arranging the output in a specified sequence. When combined with Rollup, it becomes even more powerful. This clause helps in sorting the final result set of aggregated data, allowing for easy comparison and analysis.

For example, after using Rollup to get sales totals per product, ORDER BY can sort these subtotals in either ascending or descending order. This clarity aids users in identifying patterns or trends within the dataset more quickly.

It is important to remember that logical sorting enhances the overall understanding of data, making it a key part of data analysis tasks.

Incorporating ORDER BY effectively ensures that the result set is not only complete but also arranged in a way that enhances interpretation and presentation.

Rollup in Business Intelligence Reporting

SQL rollups are essential in business intelligence for simplifying data and presenting clear insights. They’re used to create structured reports, analyzing sales trends, and streamlining inventory management by facilitating aggregate data analysis.

Constructing Sales Reports

In crafting sales reports, SQL rollups help summarize data by various dimensions, such as year, category, and region. This technique allows organizations to assess trends efficiently.

For example, a rollup can show sales by year, breaking down numbers into more detailed views, like sales by quarter or month, providing a clear timeline of performance. This enables businesses to evaluate seasonal trends and allocate resources effectively.

Additionally, analyzing sales by category can identify which products or brands are driving growth. It can highlight the success of specific marketing campaigns or the performance of various departments.

Rollups allow a detailed comparison of these dimensions, contributing to strategic decision-making by focusing on what’s most important.

Finally, using rollup in sales reports aids in constructing comprehensive dashboards that reflect key business insights, offering a bird’s-eye view while retaining the ability to drill down into specifics.

Rollup and Inventory Analysis

Rollup usage is significant in inventory management as well. It aggregates data across product lines, helping optimize stock levels. By summarizing data on stock counts by category or brand, managers can make informed decisions about restocking and discontinuation.

For example, understanding inventory levels across multiple locations can prevent stockouts and reduce excessive stock, saving costs.

Departments responsible for managing inventory can use rollups to analyze patterns, such as which items frequently run out or those with surplus stock. This is crucial for meeting demand without overstocking, which ties up capital.

Additionally, rollups can assist in forecasting future inventory requirements by analyzing past sales patterns and inventory turnover rates. They enable more precise predictions about which products need more attention in terms of supply chain and logistical planning.

Data Analysis with SQL Rollup

SQL Rollup is an advanced feature that enhances data analysis by allowing efficient aggregation across multiple dimensions. It streamlines the reporting process and improves the ability to perform detailed data insights.

Multi-level Analyses

The rollup operator is integral for performing multi-level analyses in SQL queries. It functions by creating a series of subtotals that lead to a grand total, helping to break down complex data into more understandable parts.

For instance, in sales data, it can offer aggregated totals by product, month, and year. This technique saves time by eliminating the need for multiple queries for summary results, as seen in projects such as the SQL Pizza case study.

Using aggregate functions like SUM or AVG in ROLLUP operations helps generate different levels of aggregation. These functions allow users to gain valuable insights without extensive manual calculations.

The ROLLUP feature benefits businesses by offering summarized views that support informed decision-making.

Data Insight and Reporting Efficiency

The ROLLUP operator improves the reporting process by providing hierarchical grouping and easy-to-read insights. It efficiently handles large datasets by automatically grouping and summarizing data, which simplifies complex analyses.

For example, it can condense multiple sales metrics into summary tables, enhancing reporting efficiency.

By using the ROLLUP feature, businesses can not only save time but also improve accuracy. It removes the need for repetitive coding, making reports more efficient and insightful.

This structured approach allows analysts to focus on interpreting data trends rather than spending excessive time on data preparation.

Frequently Asked Questions

The ROLLUP operation in SQL enables efficient data analysis by creating hierarchical summaries. It serves different purposes than the CUBE operation and is useful in situations where simple aggregation is needed over specific dimensions.

How does the ROLLUP operation function within GROUP BY in SQL?

The ROLLUP operation works as an extension of the GROUP BY clause in SQL. It allows for aggregated results to be calculated across multiple levels of a dimension hierarchy. By adding ROLLUP to GROUP BY, SQL creates subtotal and grand total summaries for the specified columns.

Can you provide an example of using ROLLUP in SQL?

Consider a sales database for an electronics store. Using ROLLUP, you can generate a report that includes sums of sales for each product category and a grand total.

For example, SELECT Category, SUM(Sales) FROM SalesData GROUP BY ROLLUP(Category) produces subtotals for each category and a single grand total row.

What is the difference between ROLLUP and CUBE operations in SQL?

While ROLLUP generates subtotal rows moving upwards in a hierarchy, CUBE provides a broader analysis. CUBE calculates all possible combinations of aggregations based on the given columns, effectively creating a multi-dimensional summary. This results in more detailed and varied grouping compared to ROLLUP.

What are the primary purposes of using a ROLLUP in SQL queries?

ROLLUP is primarily used for generating hierarchical data summaries. It helps in creating reports that include intermediate totals and a grand total, making it simpler to understand aggregated data.

This feature is essential for producing business reports and financial summaries where clarity and detail are necessary.

In what scenarios is it preferable to use ROLLUP over CUBE in SQL?

ROLLUP is preferable in scenarios where a straightforward hierarchy or a step-by-step summarization is needed. It is particularly useful when dealing with reports that require fewer aggregate calculations, such as sales by month followed by a yearly total, without needing all possible group combinations like CUBE.

How does the ROLLUP operation impact the result set in a SQL GROUP BY clause?

Using the ROLLUP operation, the result set from a GROUP BY clause includes additional rows for subtotal and total summaries. These rows contain aggregated data that are not available in a standard GROUP BY query.

This simplifies data analysis by providing clear insights at different levels of aggregation.