Understanding Legends in Matplotlib

Legends play a crucial role in data visualization by providing clarity and context.

In Matplotlib, understanding how to effectively style and use legends enhances the visual representation of data, ensuring that users can easily interpret complex plots.

The Role of Legends in Data Visualization

Legends are essential tools in data visualization. They help to identify different data series or categories in a chart.

By using clear labels and symbols, legends make charts more accessible, allowing viewers to quickly understand the presented information.

In Matplotlib, legends are automatic but can be customized. They can specify the data categories, making complex plots easier to read.

For instance, using custom labels or adjusting the position assists in delivering the intended message through visual cues.

When working with multiple data series, organizing a clear and concise legend is vital. This ensures the audience grasps data comparisons without confusion. Legends not only enhance aesthetics but also boost the data’s interpretative value.

Basic Components of Matplotlib Legends

In Matplotlib, legends consist of essential components that define data series. Each component, such as labels, handles, and location, serves a specific purpose.

Labels describe data sets, while handles display symbols or lines associated with each label.

Matplotlib legends can be positioned using the loc keyword to control where the legend appears on the plot.

The legend location can be adjusted manually to fit specific areas of a chart. The bbox_to_anchor allows further customization for precise placement.

Other style adjustments include changing font size, color, and frame visibility to suit specific presentation needs. Understanding these components helps create a neat and informative legend, enhancing the overall data visualization experience.

Setting Up Your Environment

To start using Matplotlib for creating legends and customizing plots, one must prepare their Python environment. This involves installing the necessary libraries and importing them correctly to ensure smooth execution of code.

Installing Matplotlib and NumPy

To create plots and add custom elements using Matplotlib, it’s crucial to have Python installed. Once Python is available, install both Matplotlib and NumPy, as they work together for numerical data handling and visualization tasks.

Use the Python package manager, pip, to install these libraries:

pip install matplotlib numpy

This command will download and install the latest versions of Matplotlib and NumPy. Keeping these libraries updated ensures access to their newest features and improvements.

Check the installation by using:

import matplotlib

import numpy

print(matplotlib.__version__)

print(numpy.__version__)

These commands will print the current library versions if installed correctly. This setup forms the basis for creating plots and configuring various elements, including custom legends.

Importing Necessary Libraries

Begin script development by importing Matplotlib and NumPy at the start of any Python file. Importing these libraries allows direct access to their functions and modules.

The following code snippet shows how to import them:

import matplotlib.pyplot as plt

import numpy as np

Using these aliases—plt for Matplotlib and np for NumPy—helps in writing concise and readable code. The plt module in Matplotlib is particularly important for accessing functions related to plotting, such as creating plots, adding labels, and customizing legends.

Subsequently, developers can utilize the imported libraries to plot data, manage array operations with NumPy, and enhance visualization with Matplotlib’s styling options.

Creating a Simple Plot

Matplotlib is a powerful tool for creating simple plots with clear labels and aesthetically pleasing designs. Understanding how to effectively plot data and adjust plot aesthetics can greatly enhance the presentation of information in visual form.

Plotting Data with Labels

To create a basic plot, begin by importing necessary libraries such as matplotlib.pyplot and numpy. Generate data using numpy functions, like numpy.linspace for evenly spaced values or numpy.random for random data.

Use plt.plot() to graph these values, specifying the x and y coordinates.

Labels are essential for clarity. Apply the plt.xlabel() and plt.ylabel() functions to add labels to the axes.

For a descriptive plot title, use plt.title(). Adding a legend for multiple data series improves understanding; achieve this through plt.legend(), which describes each line or marker, enhancing the interpretability of the plot.

Adjusting Plot Aesthetics

Enhancing the visual appeal of Matplotlib plots involves customizing colors, line styles, and markers.

Use parameters within plt.plot(), such as color='r' or linestyle='--', to specify color and style preferences. The plt.grid() function can add a grid, helping in data visualization.

The figure size and resolution can be modified with plt.figure(figsize=(width, height)).

Additionally, the plt.tight_layout() function adjusts subplots to fit neatly within the figure area.

Focus on these formatting tools to ensure the plot is not only informative but also visually engaging, conveying information clearly and attractively.

Customizing Legend Appearance

When creating a plot in Matplotlib, customizing the legend is crucial for effective data visualization. This includes modifying labels and titles, adjusting font properties, and changing colors and background.

Modifying Legend Labels and Titles

Adjusting the labels and titles in a legend helps clarify the data being displayed.

Users can modify legend labels directly by providing a list of strings to the legend() method. This allows specifying what each element in the plot represents.

Adding a title to the legend provides context and improves readability. The title parameter in the legend() function allows users to set a descriptive title. This feature is helpful when multiple datasets are being compared.

Custom labels and titles play a significant role in improving the plot’s communicative power by making it easier for the viewer to understand the data relationships.

Altering Legend Font Properties

Altering font properties in the legend can greatly improve its readability and integration into the overall plot theme.

Users can change the font size using the fontsize parameter within the legend() method. Setting fontsize to ‘small’, ‘medium’, or ‘large’ helps match the rest of the plot’s appearance.

Changing the font style is also possible by using font properties like bold or italic. This adjustment makes the legend stand out or blend in as needed.

For further customization, the FontProperties module can be imported, allowing users to specify advanced font styles and weights, which ensures the text complements the plot design effectively.

Changing Legend Colors and Background

Adjusting legend colors involves modifying its lines, markers, and text to match the corresponding plot elements. This consistency is crucial for intuitive data interpretation.

The edgecolor and facecolor parameters in Matplotlib allow users to set the legend’s border and background colors.

For transparency effects, the framealpha parameter lets users make the legend background see-through, which can be useful in dense plots.

By altering the colors and background, the legend can be both aesthetically pleasing and functional, enhancing the overall visual comprehension of the plot. To learn more, examples from Python Graph Gallery provide practical applications of these techniques.

Enhancing Legend Functionality

Understanding how to enhance the functionality of legends in Matplotlib can greatly improve the clarity of a plot. This involves using legend handlers to manage how legend entries appear, and incorporating more than one legend in a plot when needed.

Utilizing Legend Handlers

Legend handlers in Matplotlib allow customization of what appears in a legend entry. They use functions like get_legend_handler_map() to specify how different types of plot elements should be displayed in the legend.

By attaching custom handlers, users can ensure that each legend entry best represents its corresponding data series. This is particularly useful for complex plots where standard legend representations might not suffice.

For example, users looking to customize the appearance of scatter plots can modify how each legend entry is depicted.

By utilizing legend handlers, specific details, such as marker size or line style, can be more accurately reflected. This fine-tuning helps in conveying the right message or key insights from the visualized data.

Incorporating Multiple Legends

Sometimes, a single legend doesn’t adequately describe all elements within a plot. In such cases, multiple legends can be added using the legend() function multiple times.

For instance, when a plot involves various types of data, like lines and points, distinct legends can highlight each type effectively. This separation enhances readability by clearly distinguishing different data groups.

Creating multiple legends involves adding additional legend calls after plotting the relevant data. Each call can focus on specific plot elements to ensure clear and concise communication.

By tailoring multiple legends, the viewer gains a better understanding of the diverse aspects of the data presented.

Positioning Legends

Positioning the legend within a Matplotlib plot is crucial for clarity and readability. Common placement options are available, but manual adjustments can be achieved through advanced techniques. Properly positioning legends helps in interpreting data accurately without cluttering the visual space.

Standard Legend Positions

In Matplotlib, users can easily place legends using the loc parameter in the legend() function. This parameter accepts a variety of location codes such as “upper right”, “lower left”, or “center”.

These codes provide an intuitive way to set the legend’s position relative to the axes. For example, placing a legend at “upper right” ensures it is in a common position that is usually less obstructive.

Here’s a simple usage example:

ax.legend(loc='upper right')positions the legend in the upper right corner.

Using these codes, users can control the primary location without manually specifying coordinates. This method is straightforward and effective for many plots, ensuring that the legend does not interfere with the data visualization.

Advanced Placement with bbox_to_anchor

For precise control over legend placement, the bbox_to_anchor parameter can be used. This method allows the user to specify an exact position, which is particularly helpful when standard positions aren’t suitable.

By providing a tuple, such as (1.05, 1), users position the legend relative to the axes, taking full control over its location.

Example usage:

ax.legend(bbox_to_anchor=(1.05, 1), loc='upper left')places the legend outside the axes to the top right.

This technique is useful for complex layouts where space is limited or for positioning the legend outside of the plotting area completely. Using bbox_to_anchor, users can avoid overlapping elements, making the plot cleaner and more legible.

Styling Legends with RC Parameters

RC (runtime configuration) parameters in Matplotlib allow for comprehensive customization of plot elements, including legend styling. This section explores how to leverage rcParams to enhance legend appearance by adjusting properties like font size, edgecolor, and more.

Fundamentals of rc Configuration

RC parameters are essential for customizing Matplotlib plots. They are defined in matplotlibrc files and enable global adjustments.

Users can control properties such as font size, line width, and edgecolor. These settings allow for broad customization while maintaining consistency across plots.

When it comes to legends, specific rc settings like legend.fontsize and legend.edgecolor directly influence their appearance.

Adjusting these values tailors the visual aspects of legends, ensuring they fit the desired aesthetic and functional requirements.

Applying Styles to Legends

Enhancing legend style involves using various rc parameters.

Options like legend.shadow and legend.fancybox add features such as a shadow and rounded box edges, improving readability.

Parameters like bold and italic can be added to text for emphasis, making key information stand out.

Different styles, such as legend.fontsize, affect how text appears within legends.

By modifying these parameters, it’s possible to create distinct and visually appealing legends that integrate seamlessly into the plot design.

Experimenting with various rc settings offers a flexible approach to legend customization, aligning them with both aesthetic goals and interpretative clarity.

Users can refer to Matplotlib’s customizing legends for further exploration and examples.

Legend Customization Techniques

Customizing legends in Matplotlib involves adjusting elements like shadows, transparency, and spacing.

These techniques enhance the clarity and visual appeal of plots, providing a more professional presentation.

Adding Shadows and Borders

Adding shadows to legends can make them pop on the plot.

In Matplotlib, the shadow parameter in the legend() function adds a shadow effect. This effect helps the legend stand out, especially in dense graphs.

The use of fancybox rounds the corners, giving a softer appearance. The edgecolor parameter changes the border color, adding contrast and helping the legend box better integrate with the plot’s style. These customization options support the visual distinction of different graph elements.

Setting Legend Transparency with framealpha

Adjusting the transparency of a legend is useful for better integrating it into a plot.

The framealpha parameter in Matplotlib controls this level of transparency.

By setting framealpha to values between 0 and 1, users can tweak the legend’s visibility.

A lower setting allows the plot elements behind the legend to remain visible, which is beneficial when space is tight.

Transparency offers a sleek look, making the overall design of a graph more refined and accommodating multiple data points.

Controlling Spacing with borderaxespad

Spacing around legends ensures that they don’t overlap with data points or other plot elements.

The borderaxespad parameter handles this spacing by setting the padding between the legend and the surrounding axes.

By increasing the borderaxespad value, users can create more space around the legend, keeping graphs tidy and straightforward to interpret.

Proper spacing enhances readability, ensuring that the legend items are easily distinguishable from the plot itself, maintaining clarity and effectiveness in data communication.

Organizing Plot Elements

Organizing plot elements is crucial for clear and informative visualizations.

Effective arrangement of axes and subplots can enhance the overall understanding of complex data.

Proper coordination of legends with subplots improves readability and aids in data interpretation.

Working with Axes and Subplots

Axes and subplots are fundamental to organizing data visually.

In Matplotlib, axes refer to the area where data is plotted, such as a line or bar chart. Subplots are multiple plots arranged within a single figure. Each subplot can have its own axes, catering to different data sets or comparisons.

Using plt.subplots() allows users to create a grid of plots. This function helps in comparing different data sets on the same graph.

It’s crucial to manage the x-axis and y-axis to avoid clutter. By setting axis limits and labels, the data becomes more accessible. Adding titles and annotations further clarifies the context of each plot.

Coordinating Legends with Subplots

Coordinating legends with subplots ensures that each graph is clearly understood.

Legends provide context, helping viewers distinguish between various data lines or bars.

Matplotlib offers flexibility to position legends to avoid overlapping with the main plot elements.

Custom positioning allows placing legends outside the graph, maintaining a clean look. Legends can be fine-tuned for font size and color, aligning with the subplot’s visual style. This is detailed in resources like this guide on customizing legends.

When dealing with multiple subplots, ensuring legends are consistent across plots is vital. This coordination enhances readability and ensures the viewer can easily compare data across different graphs.

Legend Options and Functionality

In Matplotlib, legends offer various customization options to enhance plot readability. This section delves into managing the number of legend columns and tailoring the appearance with different modes.

Determining Number of Columns with ncol

When plotting with Matplotlib, managing the legend’s layout is crucial for clarity.

The ncol parameter helps define how many columns the legend should have.

A single column might suffice for a few items, but for multiple items, spreading them across two or more columns can make the plot look neat.

Setting ncol=2 in the legend() function will organize the legend entries in two columns. This is handy for fitting legends in narrow spaces without overlapping the plot. The control over legend layout by adjusting columns enables clear, compact representation.

Customizing Legend Appearance with mode

The mode parameter offers further customization by controlling how the legend expands.

When set to “expand,” legends use all available horizontal space, distributing items evenly.

This can combine well with the ncol setting to spread out entries elegantly.

Using mode='expand', especially with multiple columns, aids in utilizing plot space effectively to convey information without clutter. Adjusting the mode is a practical approach to achieve a polished appearance that improves readability and presentation.

Interactivity with Legends

In Matplotlib, legends can be made interactive, allowing users to engage with the plot dynamically. Such interactivity can involve responding to user actions like clicks, enhancing the usability of the graph.

Responding to User Events

Interactive legends can respond to user events, like mouse clicks, to offer a more engaging experience.

This feature is handy for plots with multiple data sets, where clarity is crucial.

When a user clicks on a legend item, corresponding plot elements can show or hide. This makes data exploration easier by focusing on specific parts of the graph without changing the plot itself.

Using mpld3, an extension of Matplotlib, enables such features. It converts static plots into interactive web-based visualizations. Functionality such as hovering for additional data points further enriches the interactive experience, making graphs more user-friendly and informative.

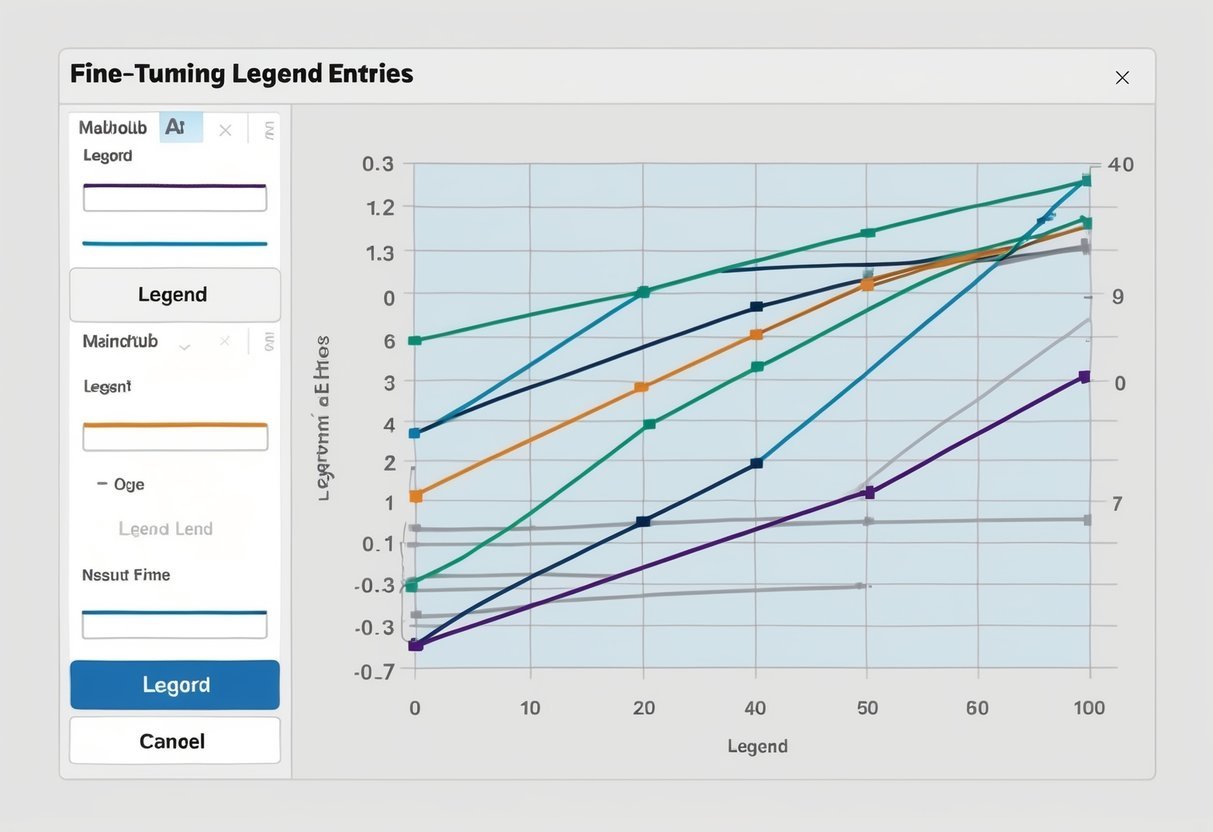

Fine-tuning Legend Entries

Fine-tuning legends in Matplotlib is essential for clear data presentation. Adjustments can be made to the order of items and the appearance of markers and lines, enhancing plot readability.

Setting the Order of Legend Entries

In Matplotlib, controlling the order of legend entries can simplify plot interpretation.

By specifying the legend_labels parameter, users can decide which data series appear in the legend. The set_label method allows for customizing the labels of individual plot elements.

To display certain entries first, use numpoints to control marker repetition within the legend.

Arranging markers with markerfirst either before or after the label offers flexibility in display order. When necessary, a manual legend can be crafted for fine-tuned control.

Customizing Markers and Lines

Customizing markers and lines in legend entries is crucial for enhancing visual appeal.

The legend_labels parameter helps specify which plot items should be included and how they should appear.

Various styles, such as dashed or solid lines, can be assigned to different data series.

Markers can be adjusted using the markerfirst parameter to position before or after the text. The frameon option allows toggling of the legend’s border for better readability. Through detailed adjustments, the legend becomes not only informative but also visually coherent.

Frequently Asked Questions

Matplotlib offers many styling options for customizing legends. Understanding how to adjust the appearance, position, and formatting of legends can greatly enhance the readability and professionalism of plots.

How can one customize the appearance of the legend in Matplotlib plots?

Matplotlib provides many options for customizing legend appearance.

Users can change the legend’s font size, color, and style. Options also include adding borders or shadows. These customizations help make the information clear and matches the plot’s theme.

What methods are available for positioning the legend outside a Matplotlib plot?

Legends can be positioned outside the plot using the bbox_to_anchor parameter.

This allows users to manually place the legend at specific locations, like the top-right of a figure.

Adjusting the loc parameter provides several predefined positions, making it easy to achieve the desired layout.

Which parameters control the number of columns in a Matplotlib legend?

The ncol parameter in Matplotlib’s legend function allows setting the number of columns.

This is useful for arranging legend items in a more compact or spread-out manner, depending on the plot’s design and the number of entries.

How do you add and style a title for a legend in Matplotlib?

To add a title to a legend, use the title parameter within the legend function.

This title can be styled using font properties, such as font size and typeface. Styling options make the legend title prominent, enhancing plot clarity.

What are the options for adjusting the font size of legend text in Matplotlib?

The fontsize parameter in the legend function allows users to change the size of the legend text.

This can be a specific size or a string value like ‘small’, ‘medium’, or ‘large’, offering flexibility to fit different plot sizes and styles.

Can you provide an example of configuring the border and background of a legend in Matplotlib?

To configure a legend’s border and background, use parameters like frameon, edgecolor, and facecolor.

For example, setting edgecolor changes the border color, while facecolor adjusts the background color.

This customization adds a professional touch to the plot, ensuring the legend stands out appropriately.