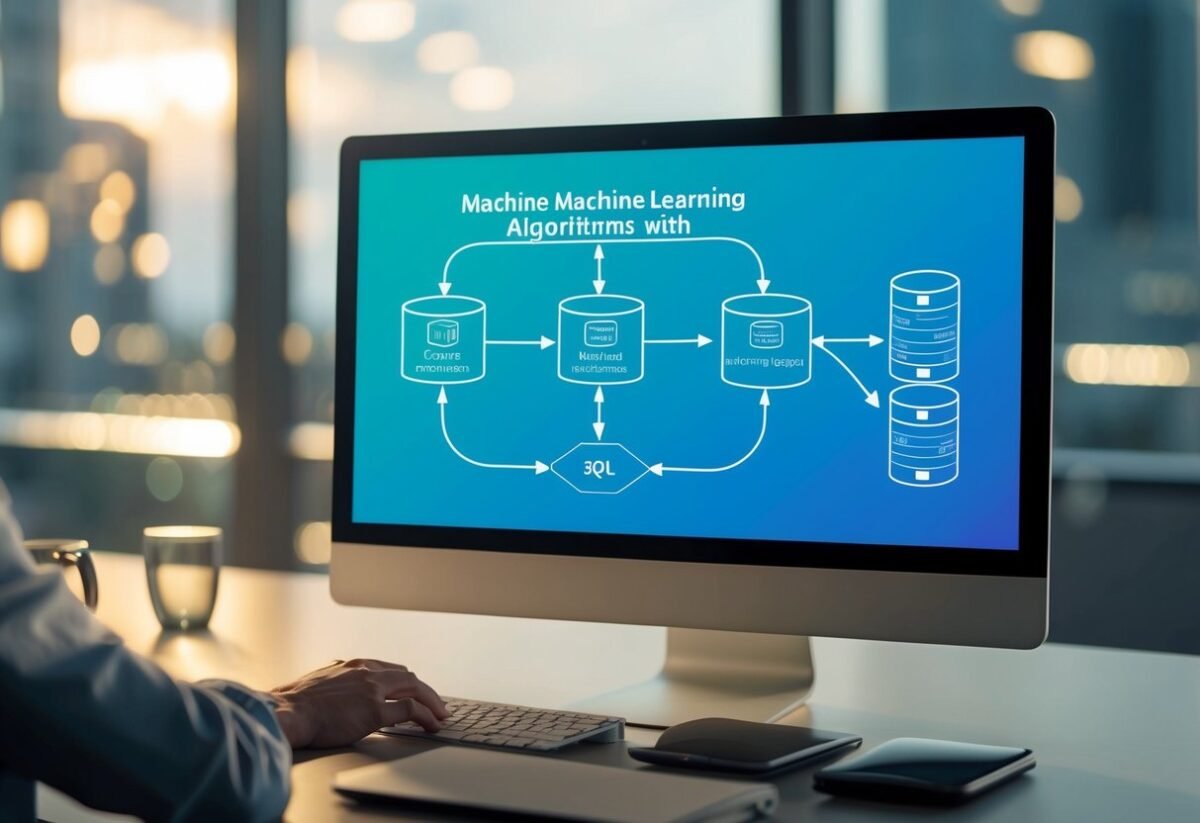

Integrating machine learning with SQL can transform how organizations handle data-driven tasks.

SQL enables seamless data extraction, while machine learning offers the capability to draw valuable insights from that data.

Combining these technologies can improve decision-making and business operations.

Companies that adopt this approach can harness the predictive power of machine learning within a familiar SQL environment.

The integration process involves setting up an environment where SQL statements and machine learning models work together.

Tools like SQL Server Machine Learning Services allow for running scripts in languages like Python and R alongside SQL data.

Organizations can utilize both open-source and proprietary packages to enhance their analytical capabilities.

Successful integration of machine learning models with SQL databases leads to efficient, scalable, and actionable data analytics solutions.

This makes it possible to leverage data effectively, reducing the time to gain actionable insights.

This streamlined approach helps companies stay competitive in an increasingly data-driven world.

Key Takeaways

- SQL and machine learning together boost data-driven insights.

- Machine learning models can be integrated into SQL services.

- Using both technologies enhances business decisions.

Understanding SQL

SQL, or Structured Query Language, is a powerful tool used to interact with relational databases.

It enables users to manage and manipulate data effectively, using commands and functions to retrieve, update, and delete data.

Fundamentals of SQL

SQL enables users to interact with data stored in relational databases with precision. It is used for defining data structures and editing database records.

The language consists of statements that can create tables, add records, and perform complex queries.

Familiarity with SQL syntax is essential because it includes keywords such as SELECT, INSERT, and UPDATE.

Clear understanding of data types and constraints is necessary. These define the type of data that can be stored in database columns.

Constraints such as PRIMARY KEY and NOT NULL ensure data integrity.

SQL Commands and Functions

SQL commands are the backbone of database operations. They are divided into categories like Data Query Language (DQL), Data Definition Language (DDL), Data Control Language (DCL), and Data Manipulation Language (DML).

Common commands include SELECT for querying data and INSERT for adding records.

SQL functions enhance data retrieval by performing calculations and grouping data. Functions such as COUNT, SUM, and AVG assist in aggregating data.

String functions, like CONCAT and LENGTH, help manipulate text data, while date functions allow for handling of time-based data.

Relational Databases

Relational databases organize data into tables that relate to each other, making data organized and accessible.

These tables consist of rows and columns, where each row represents a record and each column represents a data field. The relational model promotes data integrity and minimizes redundancy.

Relational databases use keys to link tables. Primary keys uniquely identify records within a table, while foreign keys link tables.

This structure allows for complex queries involving multiple tables, enhancing data analysis capabilities.

Understanding the relational model is crucial for efficient SQL use, ensuring that databases are scalable and maintainable.

Fundamentals of Machine Learning

Machine learning involves using algorithms to find patterns in data, enabling predictions and decisions without explicit programming. Key concepts include different algorithm types, a structured workflow, and various models to tackle tasks like regression, clustering, and classification.

Types of Machine Learning Algorithms

Machine learning can be divided into three main categories: supervised, unsupervised, and reinforcement learning.

Supervised learning involves labeled data and aims to predict outcomes like in regression and classification tasks.

Unsupervised learning works with unlabeled data, identifying patterns or groupings, such as clustering.

Reinforcement learning involves an agent learning to make decisions by receiving feedback through rewards or penalties, often used in gaming and simulations.

Selecting the right machine learning algorithm depends on the problem’s nature and data availability.

The Machine Learning Workflow

The machine learning workflow consists of several critical steps.

First, data collection gathers insights for the task. Then, data preprocessing ensures the information is clean and ready for analysis by handling missing values and normalizing data.

After that, selecting the appropriate machine learning algorithm takes center stage, followed by model training with a segment of the data.

The trained model is then tested with unseen data to evaluate its performance.

Model evaluation often uses metrics like accuracy, precision, or recall, depending on the task.

Refining the model through hyperparameter tuning can enhance its accuracy before applying it to real-world scenarios.

Common Machine Learning Models

Common models in machine learning address various tasks.

Regression models, like linear and logistic regression, predict continuous outcomes based on input variables.

Clustering models, such as k-means and hierarchical clustering, group data points based on similarities.

Classification models include decision trees, support vector machines, and neural networks, which assign data to distinct categories.

Each model type applies to specific use cases and comes with strengths and limitations. Understanding these models helps in choosing the right one based on the problem and dataset characteristics, leading to better analysis and predictive accuracy.

Machine Learning Tools and Packages

Machine learning tools are pivotal for analyzing large datasets and extracting valuable insights. Python and R are significant in this field, with each offering unique capabilities. Both languages provide a variety of libraries and frameworks essential for efficient machine learning.

Python in Machine Learning

Python is widely used in machine learning due to its simplicity and robust libraries. Scikit-Learn is a key library for implementing various algorithms, such as classification and clustering. It is ideal for beginners and experts alike.

TensorFlow and PyTorch are popular for deep learning tasks. Both offer dynamic computational graphs, making them flexible for research and production.

Anaconda is frequently used as a distribution platform, simplifying package management and deployment of Python environments.

R for Data Analysis

R is a powerful tool for data analysis and statistical modeling. It’s known for its comprehensive collection of packages for data manipulation and visualization. Microsoft R Open enhances R’s performance and provides additional features for reproducibility.

The language offers numerous packages to support machine learning, including the popular Caret package, which simplifies the process of creating predictive models.

R’s integration with SQL Server allows for seamless in-database analytics, ensuring efficient data processing.

Essential Machine Learning Libraries

A variety of libraries are essential in the machine learning landscape, facilitating diverse tasks.

Pandas is crucial for data manipulation in Python, enabling users to handle datasets of different sizes and complexities effectively.

Both TensorFlow and PyTorch are integral for developing machine learning models, supporting various layers and architectures necessary for feature extraction and prediction.

Additionally, Anaconda helps in managing libraries and dependencies, ensuring that data scientists can focus on model development without technical disruptions.

Data Preprocessing and Analysis

Effective integration of machine learning with SQL begins with a strong foundation in data preprocessing and analysis. Key elements include data cleaning techniques, feature selection and engineering, and utilizing SQL and Python for robust data analysis.

Data Cleaning Techniques

Data cleaning is crucial for reliable machine learning results. Common techniques include handling missing data, removing duplicates, and correcting inconsistencies.

Missing values can be addressed by using methods like mean substitution or median interpolation. Identifying outliers is also vital, as these can distort model predictions.

SQL offers powerful commands for data filtering and cleaning operations. Functions like COALESCE allow easy handling of null values, while GROUP BY assists in identifying duplicates. Regular expressions can detect inconsistencies, ensuring a clean dataset ready for analysis.

Feature Selection and Engineering

Feature selection reduces data dimensionality, improving model performance and preventing overfitting. Techniques such as recursive feature elimination or correlation-based selection can be used.

Feature engineering involves creating new input variables from the existing data, which can boost model accuracy. This may include techniques like scaling, normalizing, or encoding categorical data.

SQL is handy for these tasks, using CASE statements or joins for feature creation. Coupled with Python’s data libraries, such as Pandas, more complex operations, like polynomial feature creation, can be performed to enhance the dataset for machine learning purposes.

Data Analysis in SQL and Python

Data analysis with SQL focuses on querying databases to uncover trends and patterns. SQL queries, including aggregations with SUM, AVG, and COUNT, extract valuable insights from big data. It helps in structuring data for further analysis.

Python, with libraries like NumPy and Pandas, complements SQL by performing intricate statistical analyses on dataframes.

The integration allows users to maintain large data sets in SQL, run complex analyses in Python, and optimize data manipulation across both platforms. This approach leverages the strengths of each tool, ensuring efficient and comprehensive data understanding for machine learning applications.

SQL Server Machine Learning Services

SQL Server Machine Learning Services allows users to run Python and R scripts directly in SQL Server. It integrates with SQL Server to enhance data analysis and predictive modeling.

Introduction to SQL Server ML Services

SQL Server Machine Learning Services provides an extensibility framework that supports running Python and R scripts within the database. It allows data scientists and developers to easily execute machine learning algorithms without moving data out of the database.

With these services, SQL Server combines traditional database functions with new predictive tools, enabling advanced analytics and data processing. Key components include the ability to integrate scripts and a focus on data security and performance.

Configuring ML Services in SQL Server

Configuring Machine Learning Services involves installing the necessary components during SQL Server setup.

Ensure Machine Learning Services are not installed on a domain controller, as this can cause configuration issues. This service should also not be on the same instance as the shared features, to avoid resource contention.

Administrators can configure these services through SQL Server Management Studio, allowing them to allocate resources like CPU and memory.

Proper setup optimizes machine learning models’ performance and makes sure analysis tasks run smoothly.

Running Python and R Scripts

Python and R scripts can be executed in SQL Server as external scripts. These scripts leverage SQL Server’s processing power, allowing complex data analysis directly within the database environment.

By using external scripts, Machine Learning Services execute models efficiently. This approach is particularly useful for large datasets, as it minimizes data movement.

Supported tools include Jupyter Notebooks and SQL Server Management Studio, making script execution and development accessible to both Python and SQL developers.

Training Machine Learning Models

SQL can play a crucial role in training machine learning models by managing and retrieving large datasets needed for model development. Key processes involve preparing the data, splitting it into training and testing sets, and using SQL commands to evaluate model performance.

Model Training and Evaluation

Model training involves preparing the dataset to create a predictive model. Data is often divided into a Train-Test-Split format. This method involves splitting the dataset into two parts: training data and test data. The training portion is used to build the model, while the test data assesses its performance.

Evaluation metrics such as accuracy, precision, and recall help determine how well the model forecasts outcomes.

SQL queries can retrieve these metrics, aiding in a precise understanding of model effectiveness. By executing the right queries, users can refine their models to improve predictions thoroughly.

Machine Learning Techniques in SQL

SQL facilitates integrating machine learning techniques like regression models directly within databases.

Common methods include implementing a Logistic Regression Model when working with categorical data.

SQL’s ability to execute R and Python scripts enables these analyses within the database engine.

Additionally, platforms like PostgreSQL allow the execution of machine learning algorithms via queries.

Users can leverage SQL to run predictive models without leaving the database, which streamlines the process and reduces overhead. This direct interaction ensures efficient model application and management, becoming indispensable for data-driven businesses.

Deploying Machine Learning Models

Deploying machine learning models involves ensuring they work seamlessly within the production environment. This requires attention to integration with database engines and enabling real-time predictions.

Integration with Database Engine

To deploy machine learning models effectively, it’s essential to ensure seamless integration with the database engine. This involves configuring the data flow between the machine learning model and the database.

Many use SQL databases for their robust data storage and querying capabilities. Tools like MLflow can facilitate saving and deploying models in such environments.

Configuration plays a critical role. The database must efficiently handle model inputs and outputs. For models trained using platforms like PostgresML, deploying becomes part of querying, ensuring users can leverage SQL for executing predictive tasks.

This setup must be scalable, accommodating data growth without compromising performance.

Real-Time Predictions

Real-time predictions require the model to process data as it streams through the system. This is crucial for applications that depend on up-to-the-moment insights, such as recommendation systems or monitoring tools.

The deployed model must be capable of handling requests swiftly to maintain service performance.

In such environments, scalability is essential. Models used for online prediction on Google Cloud need to be optimized to handle fluctuating loads.

Proper configuration will ensure responses stay fast while managing large volumes of data, ensuring the system remains responsive and reliable.

Predictive Analytics and Business Applications

Predictive analytics uses data, statistical algorithms, and machine learning to identify future outcomes based on historical data. It is powerful in various business applications, including identifying customer churn, analyzing customer sentiment, and creating recommendation systems.

Customer Churn Prediction

Predictive analytics helps businesses anticipate customer churn by analyzing purchasing patterns, engagement levels, and other behaviors. Companies can develop tailored strategies by understanding the warning signs that might lead a customer to leave.

For instance, a spike in customer complaints could signal dissatisfaction. By addressing these issues proactively, businesses can improve retention rates.

Leveraging predictively-driven insights also aids in customer segmentation. This allows for personalized marketing efforts and better resource allocation. Incorporating models such as logistic regression or decision trees can enhance the accuracy of these predictions.

Sentiment Analysis

Sentiment analysis interprets and classifies emotions expressed in text data. By using predictive analytics and machine learning, businesses can extract opinions from customer feedback, social media, and surveys. This helps organizations grasp how customers feel about their products or services.

Techniques like natural language processing (NLP) play a crucial role. Businesses can detect sentiment trends and respond swiftly to customer needs.

For example, a surge in negative sentiment on a social media post can trigger an immediate response from the customer service team to prevent reputational damage.

Recommendation Systems

Recommendation systems use predictive analytics to offer personalized product or service suggestions to customers. By analyzing user data such as past purchases and browsing behavior, businesses can predict what customers might be interested in next.

These systems are widely used in online platforms like streaming services and e-commerce sites. Collaborative filtering and content-based filtering are common techniques.

Recommendations not only enhance the user experience by making relevant suggestions but also drive sales by increasing customer engagement.

Advanced Topics in Machine Learning

Machine learning has expanded its scope beyond basic algorithms. It encompasses deep learning applications, natural language processing interlinked with SQL, and ethical considerations of machine learning. Each area plays a crucial role in advancing technology while maintaining ethical standards.

Deep Learning Applications

Deep learning is a subfield of machine learning that uses neural networks to process large datasets. These networks consist of layers that transform input data into meaningful outputs.

A common use is in image recognition, where deep learning models identify patterns and objects in images with high accuracy. GPUs and TPUs often enhance the speed and efficiency of training these models.

Deep learning’s flexibility allows it to adapt to various data types, making it indispensable in fields like healthcare and autonomous vehicles.

Natural Language Processing and SQL

Natural language processing (NLP) interacts with databases through SQL, enabling machines to understand human language. By integrating machine learning with SQL, organizations can automate tasks like sentiment analysis, chatbots, and voice recognition.

SQL’s ability to query and manage structured data complements NLP’s focus on unstructured text, providing a powerful tool for data analysis. This partnership enhances data-driven decision-making by allowing machines to extract insights from textual data stored in SQL databases.

Ethical Considerations of ML

As machine learning advances, ethical considerations become critical. Issues like bias and privacy risks are significant concerns.

Algorithms must be designed to minimize bias, ensuring equal treatment for all users. Privacy laws demand that data used in training machine learning models be handled responsibly.

Researchers and developers must adhere to ethical standards, fostering trust in AI technologies. Transparency in algorithm design and decision-making processes can mitigate risks, building public confidence in machine learning applications.

Development Best Practices

Effective development practices ensure seamless integration of machine learning with SQL, enhancing both maintainability and performance. It’s crucial to focus on code and query quality while optimizing for better speed and resource usage.

Maintaining Code and Query Quality

Maintaining high-quality code and queries is essential for reliable machine learning processes. Developers should use SQL Server Management Studio or Azure Data Studio for an organized development environment.

Ensuring external scripts are enabled allows the use of languages like Python for complex calculations, adding flexibility.

Consistent code format and clear commenting can prevent errors. Implementing version control helps track changes and manage collaboration efficiently. Using a T-SQL structured approach can also enhance readability and maintainability.

Regular reviews and refactoring help identify and correct inefficient parts of the code, promoting stability.

Performance Optimization

Optimizing performance is key for efficient machine learning tasks. Use indexing effectively to speed up data retrieval.

Azure Data Studio provides insights on query performance that can help identify bottlenecks.

Batch processing can minimize resource usage, especially when handling large data sets. Enabling external scripts allows integration with Python packages which can handle heavy computations outside SQL, reducing the load.

Keeping queries as specific as possible reduces data overhead and improves speed.

Regular performance monitoring ensures queries run optimally, allowing for timely adjustments.

Installation and Setup

For efficient integration of machine learning with SQL Server, start by installing SQL Server with Machine Learning Services. Ensure you have the right version, such as SQL Server 2022, which supports R and Python scripts. Check compatibility and system requirements before beginning the setup.

Step-by-step installation:

- Begin your SQL Server setup and choose the Machine Learning Services feature.

- Select the languages you want to enable, like Python.

To configure Python, you may need additional packages. Microsoft Python Packages are essential for enhanced functionality. This includes libraries like RevoScalePy for scalable computations and MicrosoftML for machine learning tasks.

During setup, verify essential components. These might include the Database Engine and Machine Learning Services.

Always ensure components like Machine Learning Services are enabled before proceeding.

For those setting up without internet access, an offline installation method is available. This requires pre-downloaded files for installing runtimes. Refer to the offline install guide for specific steps.

After installation, restart your SQL Server instance to apply changes. This step is crucial for full integration.

Ensure this is done to activate the machine learning scripts within the server environment.

This setup allows SQL Server to execute R and Python scripts, enabling advanced data analysis capabilities directly within the database.

Frequently Asked Questions

Integrating machine learning with SQL involves combining relational database capabilities with predictive analytics. This can offer robust tools for data analysis and real-time decision-making.

How can machine learning models be integrated with SQL databases?

Machine learning models can be integrated with SQL databases by using stored procedures to call machine learning algorithms. Tools like Python or R can be used to connect to SQL databases, allowing for seamless interaction between data storage and machine learning processing.

What are examples of implementing machine learning within SQL Server?

SQL Server provides features like SQL Server Machine Learning Services, which allow models written in Python or R to run within the server. This setup lets users perform complex data analysis and predictions directly within the database environment.

In what ways is SQL critical for developing machine learning applications?

SQL is important for managing the large datasets needed for machine learning. It efficiently handles data extraction, transformation, and loading (ETL) processes, which are essential for preparing and maintaining datasets for training machine learning models.

How to connect a machine learning model with an SQL database for real-time predictions?

To connect a model with an SQL database for real-time predictions, machine learning models can be deployed as web services. These services can then be called from SQL queries using APIs, enabling real-time prediction capabilities directly from the database.

What are the steps to deploy a Python-based machine learning model in SQL environments?

Deploying a Python-based model in SQL involves training the model using Python libraries and then integrating it with SQL Server Machine Learning Services. This allows for running the model’s predictions through SQL queries, leveraging the server’s computational power.

Can SQL be utilized effectively for AI applications, and how?

Yes, SQL can be effectively used for AI applications by serving as a backend for data storage and retrieval.

SQL’s ability to handle complex queries and large datasets makes it a powerful tool in the preprocessing and serving phases of AI applications.