Understanding Tree Data Structures in Python

This section explores how tree data structures are organized in Python, breaking down key components and terminology.

Trees allow for efficient data organization and retrieval, crucial for various applications.

Definition of Tree Structures

A tree is a data structure that models hierarchical relationships. It consists of a set of connected nodes. The connection between nodes is through edges.

Unlike linear data structures like arrays, trees are non-linear, making them ideal for representing hierarchical data like family trees or organization charts. Each tree has a single root node from which all other nodes descend. This root establishes the base of the hierarchy, with each element connected in a parent-child relationship.

Components of a Tree: Nodes and Edges

In a tree, nodes are the fundamental components. They store data and can also link to other nodes.

Edges are the connections between nodes, representing the relationship. Each node in the tree may have zero or more child nodes. If a node has no child nodes, it is called a leaf node. The topmost node is often referred to as the root node, serving as the starting point of the structure. Internal nodes are those with at least one child node.

Tree Terminology: Root, Parent, Child, Leaf, and Subtree

The root node is the top node, where every tree begins. Every node that connects directly to another is called a parent node, while nodes connected downward are termed child nodes. If a node does not have children, it is a leaf node.

Nodes between the root and the leaves are known as internal nodes. A subtree represents any node and all its descendants, forming a smaller tree within the larger structure. Understanding these terms is vital for implementing a tree in Python effectively.

Types of Trees and Their Characteristics

Various tree structures are used in programming to organize data efficiently. Some of the most common types include binary trees, ternary trees, and n-ary trees, each with distinct features and uses.

Binary Trees and Their Properties

A binary tree is a structure where each node has at most two children, named left and right. This makes binary trees a useful way to represent hierarchies. Each level of a binary tree can have up to (2^n) nodes, with (n) representing the level number starting from zero.

A special type, the complete binary tree, ensures all levels are completely filled except possibly the last, which is filled from left to right.

Binary trees help in simple and fast data retrieval. A common variant is the binary search tree (BST), where each left child node is less than its parent node, and each right child is greater. This arrangement enables quick search operations and efficient data sorting.

Variations of Binary Trees

Binary search trees are a key variation, ensuring that nodes follow specific ordering rules suitable for searching tasks.

Another type is the AVL tree, which maintains balance through rotations, enhancing the performance of operations by preventing skewed structures.

The red-black tree is another balanced binary tree form that uses color properties to maintain balance during insertions and deletions. Red-black trees ensure the longest path is no more than twice as long as the shortest. This characteristic makes them ideal for applications requiring frequent insertions and deletions, such as in databases or memory management.

Ternary Trees and n-ary Trees

Ternary trees extend binary trees by allowing up to three children per node. This structure is useful in cases requiring more complex hierarchical data representation, such as multi-way tries.

n-ary trees generalize this concept further by permitting nodes to have (n) children. They are particularly effective in scenarios requiring expansive branching, like representing complex hierarchical data such as file systems or organizational structures. Each node in an n-ary tree can have multiple children, making it flexible for different applications and enabling efficient representation of wide-ranging data networks.

Implementing Tree Nodes and Classes in Python

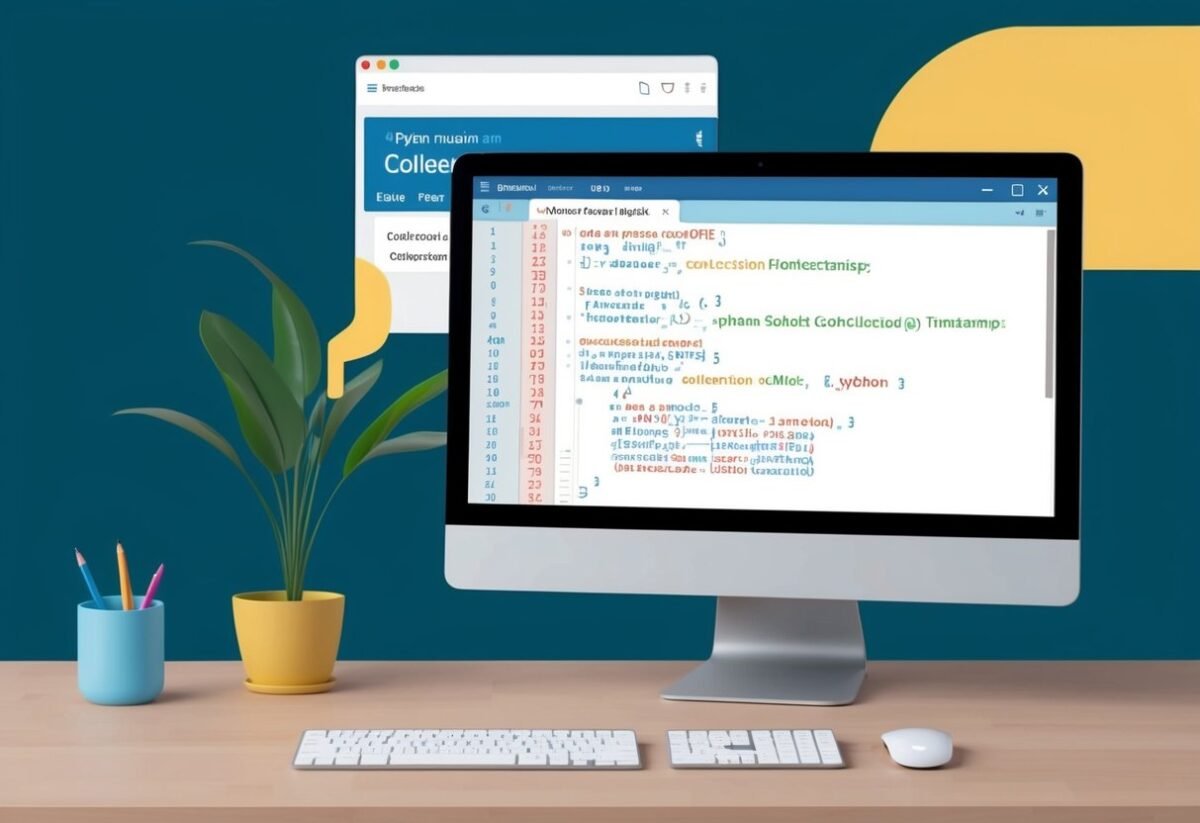

Tree representation in Python involves creating nodes and classes that can hold data and relationships within a tree structure. This section covers how to design a basic node class, implement a tree node for representation, and explain the constructor’s role in setting up node instances.

Designing a Node Class

Creating a node class is fundamental when implementing tree structures in Python. A node typically consists of two main parts: data and links to other nodes (children).

In Python, a node class often starts with defining attributes for storing data and child pointers. For example, a simple node can have attributes like value for the node’s data and pointers such as left and right for binary trees.

Here’s a simple illustration of a node class:

class Node:

def __init__(self, value):

self.value = value

self.left = None

self.right = None

This structure is flexible for binary trees, where each node can connect to two children, left and right.

Creating a TreeNode Class for Tree Representation

The TreeNode class represents a node within the tree and is essential for organizing the tree structure. This class can include methods for operations like adding children, searching, or traversing the tree.

Each TreeNode holds data and usually tracks its children using lists or direct references. In practice, this allows for building complex trees needed in applications like decision trees or hierarchical data representation.

class TreeNode:

def __init__(self, value):

self.value = value

self.children = []

def add_child(self, node):

self.children.append(node)

This design supports trees of any size and shape by enabling dynamic addition and management of child nodes.

The Role of Constructor in Node Instantiation

The constructor in a node or a tree node class plays a critical role in initializing the object’s properties. It sets up initial values and ensures that each node is ready for use within the tree structure.

In the context of node classes, constructors (__init__ methods in Python) define initial values of node attributes, like value and connections. This setup ensures that every node starts with the necessary data and empty links, ready to receive connections or data alteration.

The constructor provides the flexibility to assign initial values and configure nodes as they are instantiated, making it a cornerstone in Python’s tree data structure implementation.

Constructing Trees with Python Data Types

Constructing tree structures in Python often involves using built-in data types to create flexible and efficient representations. Lists and sets can be utilized to structure data and ensure uniqueness, respectively, providing distinct advantages in tree implementations.

Utilizing Lists for Tree Implementation

Lists are a fundamental data structure in Python, making them ideal for representing hierarchical tree structures. Each node of a tree can contain a list that represents its children, allowing for dynamic and flexible growth of the tree.

One common method involves creating a node class, where each instance has a list attribute to hold references to child nodes. This approach offers simplicity and efficiency, as lists in Python are capable of dynamically resizing, making it easy to add or remove nodes as necessary.

Moreover, lists allow easy traversal of tree nodes using loops or recursion, essential for tasks like searching or modifying the tree.

When modeling trees with lists, it’s important to manage memory carefully, especially in large trees, to prevent unnecessary data storage or loss of performance.

Practical examples and methods of implementing trees with lists can be found in resources like the Stack Overflow discussion on tree implementation.

Using Sets for Uniqueness in Trees

Sets provide another useful Python data structure for ensuring uniqueness within trees. Unlike lists, sets automatically handle duplicates, which is helpful when a tree must maintain unique elements.

When constructing trees where each node should represent a distinct element, using sets as containers is advantageous. They help in quick membership testing and can be useful in scenarios like maintaining a set of visited nodes in traversal algorithms.

A simple application might involve adding unique node identifiers to a set, enabling rapid lookup and verification of node presence. This is particularly efficient in operations where duplicates could complicate tree integrity.

Although sets are unordered, they complement tree representation by managing node uniqueness, useful in tasks involving checking and balancing duplicate entries in trees.

Adding and Removing Nodes in a Tree

In Python, handling nodes is crucial for managing tree data structures. Understanding how to effectively add and remove these nodes enhances the efficiency of various operations.

Insertion of Nodes

Inserting nodes in trees involves placing new data at the correct location to maintain the tree’s order. A node is typically inserted by comparing its value with existing nodes.

For example, in a binary search tree, new nodes are added by comparing with the root node, then proceeding to the left or right child, depending on the value. A node can have multiple child nodes except when it’s a leaf node, which has no children.

Different tree types may use unique rules for insertion, so understanding the specific data structure is essential.

Deletion Mechanisms in Trees

Deleting nodes from a tree can be more complex due to the need to maintain the structure. There are generally three scenarios: when the node to be deleted is a leaf, has one child, or has two children.

If a node is a leaf, it’s simply removed. When it has one child, the child replaces the node. For nodes with two children, typically the smallest node in the right subtree or the largest in the left subtree replaces it to maintain the tree order.

Navigating Trees: Traversal Algorithms

Tree traversal is a way to visit nodes in a structured order. Two major types include depth-first and breadth-first search. They help access and process nodes in memory efficient ways.

Depth-First Search and its Types

Depth-first search (DFS) focuses on exploring as far as possible along one branch before backtracking. It utilizes stacks, either explicitly or through recursion. There are three main types of DFS traversal: in-order, pre-order, and post-order.

-

Pre-order Traversal: Visits the root, explores the left subtree, and then the right. This can be useful for creating a copy of the tree or getting a prefix expression.

-

In-order Traversal: Explores the left subtree first, visits the root, then explores the right subtree. This method retrieves nodes in non-decreasing order for binary search trees.

Using DFS involves manageable stack size and is useful in scenarios like parsing expressions or solving puzzles like mazes. The choice between in-order, pre-order, or post-order depends on the problem’s requirements.

Breadth-First Search Using Queues

Breadth-first search (BFS) explores all nodes at the present depth before moving on to the nodes at the next depth level. This method uses queues to keep track of tree levels.

BFS is particularly effective in finding the shortest path in unweighted trees, such as traversing a tree using queues.

Each node is visited layer by layer, ensuring complete exploration of one level before proceeding.

BFS is beneficial in applications like network broadcasting or finding the shortest path in graphs. While it may require more memory than DFS, its systematic approach makes it ideal for specific types of search problems.

Binary Search Trees (BST) Operations

Binary Search Trees allow efficient data operations due to their hierarchical structure.

Key operations include inserting new values and searching for existing ones, which are fundamental in managing and retrieving data.

Inserting into a BST

Inserting a value into a binary search tree involves positioning it according to the tree’s properties.

Each node has a value, and every node’s left child contains smaller values, while the right child contains larger values.

To insert a new value, start from the root. Compare the value with the root’s value. If it’s smaller, move to the left child; if larger, move to the right child.

This process continues until an empty spot is found, and the value is inserted as a new node.

This method ensures that the BST structure is maintained, enabling efficient lookup and other operations later.

Searching for a Value in a BST

Searching in a binary search tree involves traversing the tree from the root and navigating through the child nodes.

Begin by comparing the target value to the root’s value. If they match, the search is successful. If the target value is smaller, move to the left subtree. If larger, proceed to the right subtree.

Repeat this step for each subtree until the value is found or a leaf node is reached without a match.

This process uses the ordered structure of BSTs to guide the search path efficiently, minimizing the number of comparisons needed.

Searching is typically faster in a BST compared to unsorted data structures due to its organized layout.

Tree Performance and Optimization

When implementing tree structures like binary search trees in Python, performance and optimization play crucial roles.

Important considerations include balancing trees to ensure efficient search times and evaluating the complexity of various tree operations.

Balancing Trees for Optimal Search Times

In a binary search tree (BST), balancing is key to achieving efficient search, insert, and delete operations.

Unbalanced trees can degrade to linked lists, leading to O(n) complexity for operations. To prevent this, implementing a complete binary tree ensures that all levels are fully filled except the last, which should be filled from left to right.

Balanced trees, like AVL and Red-Black trees, automatically adjust to maintain similar height across subtrees, ensuring operations remain close to O(log n) complexity.

These trees achieve balance by rotating nodes on inserts and deletions, keeping height difference within a specified range.

By maintaining balance, the performance of binary search trees remains optimized across different operations.

Complexity Analysis for Tree Operations

Analyzing the complexity of operations in binary trees helps understand performance implications.

In a balanced binary search tree, searching, inserting, and deleting all have a time complexity of O(log n). This efficiency comes from the tree’s structure, which reduces the number of comparisons.

For unbalanced trees, operations can degrade to O(n) due to linear structure formation.

It’s important to choose appropriate tree types based on specific needs.

For example, balanced trees like AVL or Red-Black trees are preferable when consistent speed is necessary.

Implementing these trees in Python involves using libraries or manual coding to ensure automatic balancing and optimal performance across tree operations.

Advanced Tree Concepts and Usage

In advanced tree concepts, understanding the height of a node and performing tree rotations for rebalancing is crucial. These aspects impact the efficiency of operations within a tree data structure, especially when dealing with internal and leaf nodes.

Height of a Node and Impact on Tree

The height of a node is a key aspect that affects the structure and performance of a tree data structure.

It is defined as the longest path from the node to a leaf node. Knowing the node height helps in assessing the balance of the tree, which is important for maintaining efficiency.

In practical terms, a balanced tree ensures faster search operations. For instance, an imbalanced tree could degrade to a list, making operations slower.

Therefore, understanding the height of every node helps keep the tree balanced, optimizing tasks like insertion and search.

Understanding Tree Rotations and Rebalancing

Tree rotations are techniques used to restore balance in a tree after modifications like insertions or deletions.

A balanced tree provides efficient access times, typically O(log n). Rotations adjust the structure by rearranging nodes while maintaining the in-order sequence of values in a binary search tree.

There are four main types of rotations: left rotation, right rotation, left-right rotation, and right-left rotation.

These adjustments help maintain balanced heights across the tree, leading to optimal performance.

Implementing rotations ensures that trees remain efficient, particularly after a node change disrupts the balance. Proper balance impacts both the internal nodes and leaf nodes, ensuring the tree structure performs well.

Visualizing Tree Structures for Better Understanding

Visualizing tree structures helps turn complex data into clear, easy-to-understand diagrams. These diagrams highlight the hierarchical nature and allow users to spot patterns and relationships within the data.

Graphical Representation Techniques

Tree structures, a type of non-linear data structure, can be visualized using various techniques.

One common approach is to use graphs to display nodes and edges. Each node represents an element, while edges show relationships between elements.

These graphs can become intricate, especially with deep hierarchies. To handle complexity, techniques like collapsible trees help manage what parts of the tree are visible.

Collapsible trees offer a dynamic way to explore the structure without overwhelming the viewer, making them essential tools for dealing with large datasets.

Using External Libraries like anytree for Visualization

anytree is a popular Python library for visualizing hierarchical data.

It simplifies the creation of tree representations by providing easy-to-use functions. Developers can build both simple and complex trees with minimal code.

With anytree, visualizations become more adaptable. It supports various layouts and allows users to customize the views to fit specific needs.

This flexibility makes anytree especially useful when dealing with dynamic or evolving datasets.

Incorporating libraries like anytree not only saves development time but also enhances the clarity and functionality of tree visualizations. This ensures that users can effectively interpret and interact with their data.

Frequently Asked Questions

Implementing trees in Python involves understanding data structures like binary trees, visualization methods, and using appropriate libraries. Each of these components plays a critical role for anyone working with tree data structures in Python.

How can one create a binary tree in Python?

To create a binary tree, you can use a class to define nodes and their connections.

Each node should have a value, along with pointers to its left and right children. This setup allows for various operations like insertion and traversal.

What are the common methods for visualizing a tree structure in Python?

Tree structures can be visualized using libraries such as Matplotlib or Graphviz. These tools help display the tree in a graphical format, making it easier to understand the relationships between nodes and branches.

Which Python libraries are best suited for tree data structure operations?

Libraries like bigtree and NetworkX support operations on tree structures.

They offer functionalities for creating, modifying, and managing different types of trees, including binary and N-ary trees.

How is the TreeNode class implemented in Python?

The TreeNode class typically contains attributes for the node’s value and references to its child nodes.

This implementation facilitates the creation of binary trees by allowing each node to manage its connections to other nodes within the tree.

What approaches are used to display tree structures in Python?

Various approaches such as using text-based representations or graphical output with libraries like Matplotlib can be employed.

These methods help users to visualize the tree’s structure and hierarchy more clearly, enabling easier analysis and debugging.

How do you define and manage the root node in a tree-based data structure in Python?

The root node is the topmost node in a tree and acts as the entry point for most operations.

Managing it involves initializing it properly and ensuring it links correctly to its child nodes. This setup is crucial for maintaining the integrity and efficiency of tree operations.