Understanding OOP with Python

Object-Oriented Programming (OOP) in Python organizes code by bundling properties and behaviors into objects. This helps in creating more manageable and reusable code.

It uses concepts like classes, objects, methods, attributes, inheritance, and more, allowing developers to model real-world entities and relationships.

Basic OOP Concepts

OOP revolves around four main ideas: encapsulation, inheritance, polymorphism, and abstraction.

Encapsulation hides internal states and requires all interaction to occur through defined methods.

Inheritance allows a class to inherit features from another class, enabling code reuse.

Polymorphism enables methods to do different tasks based on the objects they are acting upon. Lastly, abstraction simplifies complex realities by modeling classes based on essential properties and actions.

Python OOP Introduction

Python makes it easy to work with OOP due to its simple syntax. In Python, a class serves as a blueprint for objects, defining attributes and methods.

Objects are instances of classes, representing specific items or concepts.

Methods define behaviors, and attributes represent the state. For example, a Car class might have methods like drive and stop and attributes like color and model.

Python 3 Essentials

In Python 3, several features aid OOP, including more refined class definitions and the super() function, which simplifies calling methods from parent classes.

Python 3 supports creating multiple classes, allowing inheritance and ensuring polymorphism is effectively managed.

With improved data handling and an emphasis on clean, readable code, Python 3 is well-equipped for designing intricate object-oriented systems.

Understanding these essentials is key to harnessing the full power of OOP in Python.

Setting Up Your Development Environment

Setting up a proper development environment is crucial for working effectively on Python projects. Understanding how to choose the right IDE or editor and manage dependencies with Anaconda can streamline your software development process.

Choosing an IDE or Editor

Selecting an integrated development environment (IDE) or text editor is a major decision for any developer. Features like syntax highlighting, code completion, and debugging tools can greatly enhance productivity.

Popular choices among Python developers include PyCharm, VS Code, and Jupyter Notebook.

PyCharm is highly regarded for its robust features tailored for Python, such as intelligent code analysis and a seamless user interface. It’s an excellent choice for complex projects that require advanced tools.

VS Code is a versatile editor, offering numerous extensions, including Python-specific ones, making it suitable for many types of projects. Its flexibility makes it favored by developers who work across different languages.

Jupyter Notebook, integrated within many scientific computing environments, is ideal for data science projects. It allows for the easy sharing of code snippets, visualizations, and markdown notes within a single document.

Anaconda Package and Dependency Management

Anaconda is a powerful tool for package and dependency management in Python development. It simplifies software installation and maintenance, which is vital when working with multiple dependencies in sophisticated Python projects.

Using Anaconda, developers can create isolated environments for different projects. This avoids conflicts between package versions and ensures projects can run independently.

This is particularly useful when managing various Python projects that require distinct library versions.

In addition to managing dependencies, Anaconda provides Conda, its package manager. Conda allows users to install packages, manage environments, and ensure compatibility across different systems efficiently.

This can be a game-changer for developers working on projects that leverage heavy computation libraries or need specific runtime environments.

Python Projects: From Simple to Complex

Exploring different Python projects helps in understanding how to apply object-oriented programming (OOP) principles effectively. These projects progress from simple games to more complex applications, helping developers gain a solid grasp of OOP.

Building a Tic Tac Toe Game

A Tic Tac Toe game is a great starting point for practicing Python OOP concepts. It involves creating a board, defining players, and implementing the rules of the game.

Developers can create classes for the game board and players. The board class manages the grid and checks for win or draw conditions. The player class handles user input and alternates turns.

This project reinforces the understanding of class interactions and methods within OOP. By focusing on these components, students can improve their skills and gain confidence.

Designing a Card Game

Designing a card game in Python introduces more complexity. This project involves creating a deck of cards, shuffling, and dealing them to players.

A class can represent the deck, encapsulating methods to shuffle and draw cards. Another class for players manages their cards and actions.

Using OOP here enables a clean and organized structure, making it easier to add game rules or special cards. This project solidifies knowledge of inheritance and encapsulation in OOP, allowing students to apply these concepts effectively.

Developing a Countdown Timer

Creating a countdown timer involves more than just basic OOP but also introduces time-based functions. Its core involves designing a class that manages the timer’s state and updates.

The timer class uses Python’s built-in time module to track and display the remaining time. Methods can start, stop, and reset the timer.

This project requires handling state changes and ensuring the timer updates accurately, offering practical experience in managing state and interactions in OOP. Moreover, it serves as a foundation for creating more advanced time management tools.

Creating a Music Player

A music player is a complex project that demonstrates the power of Python OOP projects. This involves handling audio files, user playlists, and player controls like play, pause, and stop.

The audio player class can encapsulate these functionalities and manage audio output using libraries such as Pygame or PyDub.

Designing this project demands a strong grasp of OOP concepts to integrate different functionalities smoothly. It’s an excellent opportunity for learners to tackle file handling and user interface integration, making it a comprehensive project for advanced Python enthusiasts.

Structuring Classes and Objects

When building projects using Python’s object-oriented programming, effectively organizing classes and objects is essential. This process involves setting up class structures, managing attributes, and creating instances that mirror real-life entities efficiently.

Creating Classes

In Python, creating classes is a fundamental step in organizing code. A class serves as a blueprint for objects, defining their properties and behaviors.

Each class begins with the class keyword followed by its name, conventionally written in PascalCase. Inside, we use methods, like __init__(), to initialize attributes that every object should have. This setup helps in developing code that is reusable and easy to manage.

For example:

class NewsArticle:

def __init__(self, title, content):

self.title = title

self.content = content

Here, NewsArticle is a class that models a news article, providing an outline for its properties, such as title and content.

Defining Attributes and Properties

Attributes in classes are variables that hold data related to an object, while properties provide a way of controlling access to them.

Attributes are typically initialized within the __init__() method. Meanwhile, properties can include additional functionality using getter and setter methods, which manage data access and modification.

Using Python’s @property decorator, one can create computed attributes that appear as regular attributes. This technique offers more control and safety over the class’s data.

For example, a class might have a full_title property derived from a title and subtitle attribute.

class NewsArticle:

# ...

@property

def full_title(self):

return f"{self.title} - Latest News"

Properties allow objects to maintain a clean interface while encapsulating complex logic.

Understanding Instances

Instances are individual objects created from a class. They hold specific data and can interact with other instances by calling methods defined in their class.

Each instance operates independently, with its data stored in unique memory areas. Instances help model real-world entities, enabling complex systems like object-oriented projects to be built using clear, logical steps.

Creating an instance involves calling the class as if it were a function:

article = NewsArticle("Python OOP", "Learning object-oriented design.")

Here, article is an instance of NewsArticle, embodying both title and content attributes specific to this object. Instances allow developers to organize applications into manageable, interconnected parts.

Advanced OOP Features in Python

Advanced Object-Oriented Programming (OOP) in Python introduces key concepts that enhance the design and functionality of software. These include inheritance for creating hierarchies, polymorphism for flexible code operation, and encapsulation for controlling access to data within objects.

Exploring Inheritance

Inheritance allows a class, known as a child class, to inherit attributes and methods from another class, called a parent class. This promotes code reuse and establishes a relationship between classes.

In Python, inheritance is easy to implement. By defining a parent class and having a child class inherit from it, methods and properties become accessible to the child class. This arrangement helps in creating hierarchies and streamlining code maintenance.

Inheritance also allows for method overriding, where a child class can provide its own specific implementation of a method already defined in its parent class. This is particularly useful for extending or modifying behavior without altering existing code.

Delving into Polymorphism

Polymorphism enables methods to perform different tasks based on the object using them. In Python, this often occurs via method overloading and method overriding.

While Python doesn’t support method overloading strictly, it accomplishes similar functionality using default parameters in methods.

Method overriding is a core aspect, where a child class alters an inherited method’s behavior. This promotes flexibility and allows the same method name to function differently depending on the object type.

It is useful in creating interfaces in Python, which standardize method use across different classes.

Polymorphism fosters flexibility, enabling Python programs to work seamlessly with objects of various classes as long as they follow the same interface protocols.

Implementing Encapsulation

Encapsulation is the practice of wrapping data and the methods that operate on that data within a single unit or class. This concept restricts access to some components of an object, thus maintaining control over the data.

In Python, private and protected members are used to achieve encapsulation. By prefixing an attribute or method with an underscore (_ or __), developers can influence its accessibility level.

Encapsulation ensures data integrity and protects object states by preventing external interference and misuse.

Through encapsulation, Python allows for the implementation of properties using decorators like @property. This enables the transformation of method calls into attribute access, keeping a clean and intuitive interface for modifying object data safely.

Writing and Calling Methods

In this section, the focus is on creating and using methods within classes. This includes defining methods that handle tasks and utilizing different types of methods to manage class and instance interactions.

Defining Class Methods

Class methods in Python are defined to perform actions relevant to instances of that class. They are defined using the def keyword inside a class. The first parameter should always be self to refer to the instance itself.

For example:

class NewsFetcher:

def fetch_news(self):

print("Fetching news articles")

In this example, fetch_news is a simple method that prints a message. To call it, an object of NewsFetcher must be created:

news = NewsFetcher()

news.fetch_news()

Calling methods involves using the dot syntax on the class instance, which tells Python to execute the method on that specific object.

Using Static and Class Methods

Static methods are defined using the @staticmethod decorator. They do not access or modify the class state, making them useful for utility functions.

For example:

class NewsUtils:

@staticmethod

def format_article(article):

# Formatting logic here

return formatted_article

Static methods are called directly on the class without creating an instance:

formatted = NewsUtils.format_article(article)

Class methods are marked with the @classmethod decorator and take cls as the first parameter, which represents the class itself. They are useful for factory methods that instantiate the class:

class NewsFetcher:

@classmethod

def from_api(cls, api_key):

return cls(api_key)

fetcher = NewsFetcher.from_api("API_KEY")

Both static and class methods extend the versatility of a class by offering additional layers of functionality.

Utilizing Data Structures in OOP

In object-oriented programming, data structures are essential for organizing and managing data effectively.

Lists and dictionaries can enhance the functionality of OOP projects by efficiently storing and managing objects and their attributes.

Working with Lists and Dictionaries

In OOP, lists and dictionaries are often used to manage collections of objects or related data. A list is ideal for storing objects of the same type, allowing iteration and easy access by index.

For instance, a list can hold multiple instances of a class such as Car, enabling operations over all car objects.

Dictionaries are useful when data needs to be associated with a unique key. They allow for quick lookups and updates, making them suitable for scenarios like a directory of objects where each item has a unique identifier.

In a news application, a dictionary might store articles, with each article’s title serving as the key.

Both lists and dictionaries support operations that modify data, such as adding, removing, or updating items, aligning with OOP principles by maintaining encapsulation and data integrity.

Storing Objects in Data Structures

Objects can be stored in either lists or dictionaries to benefit from their unique features.

In a list, objects are stored in sequence, useful for ordered operations. This setup allows easy iteration and manipulation of the object collection.

When working with dictionaries, objects are stored with a key-value pair, which is critical when retrieval speed is important.

In a news application, storing article objects in a dictionary with a keyword as the key can facilitate quick access for updates or searches.

Using these structures effectively enhances the flexibility and performance of OOP systems, making it easier to manage complex data relations within a program.

Best Practices for Python OOP

When practicing Object-Oriented Programming (OOP) in Python, focusing on reusability, modularity, and enhancing coding skills is crucial. Also, adhering to OOP guidelines ensures code efficiency and clarity.

Reusability and Modularity

Reusability is a core benefit of OOP. By creating classes and objects, developers can easily reuse code without duplicating efforts.

For instance, a class that defines a Car can be reused for different car models, reducing redundancy.

Modularity is about organizing code into smaller, manageable sections. This makes it easier to maintain and update programs.

In Python, using modular design allows developers to isolate changes. For example, modifying the Car class to add new features won’t affect other parts of the program.

By designing reusable classes and focusing on modularity, developers improve code efficiency and scalability.

Coding Skills Enhancement

Practicing Python OOP improves coding skills significantly. By working with classes and objects, programmers gain a deeper understanding of data abstraction and encapsulation. These concepts help in organizing complex systems neatly.

Engaging in OOP projects, like building a news aggregator, encourages problem-solving. Developers learn to break down large tasks into smaller functions and methods. This approach makes debugging and extending applications more manageable.

Developers also enhance their skills by understanding the relationships between objects. Implementing inheritance, for instance, allows them to utilize existing code effectively. Practicing these concepts increases adaptability to different programming challenges.

OOP Guidelines Section

Adhering to OOP guidelines is essential for writing effective Python code.

These guidelines include principles like DRY (Don’t Repeat Yourself), which advocates for reducing code duplication through the use of functions and methods.

Design patterns, such as the Singleton or Observer pattern, are vital in maintaining code structure. These patterns provide solutions to common design problems, improving maintainability.

Following naming conventions for classes and methods enhances code readability, making it easier for team collaboration.

Writing clean, well-documented code is another guideline that supports long-term project success. Proper documentation ensures that others can understand and modify the code efficiently.

By following these guidelines, developers produce robust and adaptable Python applications.

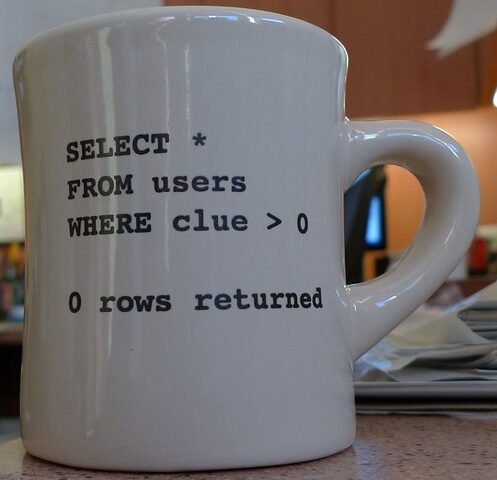

Developing Web Applications with Django and OOP

Developing web applications with Django involves using its framework to apply object-oriented programming principles. By leveraging Django, software engineers can efficiently structure Python projects, focusing on reusability and scalability. Two key areas to understand are the framework itself and the process of building a well-structured project.

Introduction to Django Framework

Django is a high-level framework that facilitates the development of web applications in Python. It follows the Model-View-Template (MVT) architecture, which separates code into distinct components. This separation aligns with object-oriented programming (OOP) by allowing developers to create reusable and maintainable code.

Key Features of Django:

- Admin Interface: Automatically generated and customizable.

- ORM: Facilitates database interactions using Python classes.

- Built-in Security: Guards against threats like SQL injection.

Understanding these features helps developers utilize Django effectively in OOP projects. The framework provides extensive documentation and community support, making it an excellent choice for both beginners and experienced software engineers.

Building and Structuring a Django Project

Creating a Django project involves setting up a structured directory format and utilizing its management commands. Engineers start by creating a new project and then adding applications, which are modular components of the project. This modular approach supports OOP by dividing functionality into separate, manageable parts.

Basic Project Structure:

- manage.py: Command-line utility.

- settings.py: Configuration file.

- urls.py: URL dispatcher.

Each application contains its own models, views, and templates, adhering to OOP principles by encapsulating functionality. Developers manage changes through migrations, which track database schema alterations.

By structuring projects this way, engineers can maintain clean and efficient codebases, beneficial for scalable and robust software development.

For a guide on creating an app in Django, GeeksforGeeks provides an insightful article on Python web development with Django.

Practical OOP Project Ideas

Practicing object-oriented programming by creating projects can boost understanding of design patterns and class structures. Two engaging projects include making a Tetris game and an Expense Tracker application, both using Python.

Creating a Tetris Game

Developing a Tetris game with Python allows programmers to practice using classes and objects creatively. The game needs classes for different shapes, the game board, and score-keeping.

In Tetris, each shape can be defined as a class with properties like rotation and position. The game board can also be a class that manages the grid and checks for filled lines.

Using event-driven programming, players can rotate and move shapes with keyboard inputs, enhancing coding skills in interactive applications.

Another important aspect is collision detection. As shapes fall, the program should detect collisions with the stack or bottom. This logic requires condition checks and method interactions, tying together several OOP concepts.

A project like this is not only enjoyable but also solidifies understanding of object interactions and inheritance.

Expense Tracker Application

An Expense Tracker application helps users manage their finances, providing a practical use case for OOP projects. Key features may include adding expenses, viewing summaries, and categorizing transactions.

By creating an Expense class, individual transactions can include attributes like amount, date, and category. A Budget class could manage these expenses, updating the total amount available and issuing alerts for overspending.

The program could also have a User Interface (UI) to enhance user interaction. For instance, using a simple command-line or a GUI library, users can enter details and view reports.

This application teaches how to manage data using collections like lists or dictionaries, and emphasizes the importance of maintaining data integrity through encapsulation.

Frequently Asked Questions

When developing a project that fetches news using Python and Object-Oriented Programming (OOP), beginners can explore structuring classes efficiently, handle API limits, and gain insights from open-source examples. Here are answers to common inquiries.

How can I use Python OOP for creating a news aggregator?

Python OOP can be used to design a news aggregator by creating classes for different components like news sources, articles, and a manager to organize these elements. This structure allows for easy updates and maintenance as new features are added.

What are some beginner-friendly Python OOP projects involving news APIs?

Beginners can start with projects like building a simple news headline fetcher or a categorized news display using free news APIs. Such projects involve creating classes to manage API requests and process data efficiently.

Where can I find Python OOP project examples with source code specifically for news collection?

Platforms like GitHub host numerous Python OOP projects focusing on news gathering. These examples often include source code for parsing news data effectively. Additionally, sites like Codecademy offer guided projects to practice these skills.

What are best practices for structuring classes in a Python news fetching project?

Best practices include defining clear responsibilities for each class, such as separating data fetching, parsing, and storage logic. Use inheritance for common features across different news sources and ensure that classes are modular for scalability.

Can you suggest any open-source Python OOP projects that focus on news gathering?

There are many projects on repositories like GitHub that focus on open-source news gathering. Reviewing these projects can provide insights into effective code structure and improve one’s ability to implement similar features.

How do I handle API rate limits when building a news-related Python OOP project?

Handling API rate limits involves implementing checks to control the frequency of API requests.

Strategies include caching results to reduce calls and using a scheduler to manage request intervals. These methods help in maintaining compliance with most API usage policies.