Foundations of Linear Algebra for Data Science

Linear algebra forms a crucial part of data science. It offers tools for representing data and operations efficiently, providing a foundation for machine learning algorithms.

Importance of Vectors and Matrices

Vectors and matrices are central in linear algebra and data science.

A vector, often represented as a list of numbers, describes quantities that have both magnitude and direction. In data science, vectors represent data points, with each element of the vector representing a feature.

Matrices are grid-like structures that organize numbers in rows and columns. They are used to transform linear equations and data sets into a form that can be manipulated easily.

For example, performing matrix multiplication can apply transformations such as rotations and scaling to data sets. Understanding these structures allows data scientists to manage and analyze data efficiently.

Understanding Linear Transformations

Linear transformations involve mapping data from one vector space to another using matrices. These transformations include translations, rotations, and scaling, critical for various algorithms in data science.

By applying transformations, data can be positioned into a more usable form, making machine learning processes like classification or regression possible.

Linear transformations help simplify complex data by maintaining the operations that define the structure of vector spaces. They play a vital role when analyzing large data sets, allowing data scientists to model real-world scenarios with greater precision. Understanding these transformations enables effective data manipulation and is essential in many applications within the field.

Key Concepts of Eigendecomposition

Eigendecomposition is a fundamental concept in linear algebra that simplifies complex matrix operations. By breaking down matrices into eigenvalues and eigenvectors, it reveals important properties and behaviors in mathematical modeling and analysis.

Defining Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are at the heart of eigendecomposition. An eigenvalue is a scalar that indicates how much the direction of an eigenvector is stretched or compressed during a linear transformation. An eigenvector is a non-zero vector that remains parallel to its original direction even after transformation.

The relationship between a matrix A, an eigenvector v, and an eigenvalue λ is expressed as: A * v = λ * v. This equation suggests that applying the matrix A to v scales the vector without changing its direction. Understanding these components is crucial for tasks such as data analysis and machine learning, where matrix transformations play a vital role.

The Characteristic Equation in Eigendecomposition

The characteristic equation is an essential tool in finding eigenvalues. Given a square matrix A, the characteristic equation is derived from det(A – λI) = 0, where I is the identity matrix.

Solving this equation yields the eigenvalues of the matrix.

The roots of the characteristic equation, known as eigenvalues, help determine how transformations affect vector spaces. These insights are crucial in applications like Principal Component Analysis, where dimensionality reduction is required. By grasping the concept of the characteristic equation, learners can better understand the dynamics of linear transformations and their applications in data science.

Practical Applications of Eigendecomposition in Data Science

Eigendecomposition is a crucial technique in data science that helps with a variety of tasks, especially in understanding and simplifying complex data. Two primary areas where eigendecomposition is extensively applied are reducing the dimensionality of data and enhancing the effectiveness of machine learning models.

Facilitating Dimensionality Reduction

Dimensionality reduction is a key step in data preprocessing that helps make data easier to visualize and analyze.

Eigendecomposition plays a significant role by breaking down data matrices to reveal key patterns. This process involves identifying eigenvectors and eigenvalues, which can simplify a dataset while maintaining its core information.

Principal Component Analysis (PCA) is a common method for dimensionality reduction that relies on eigendecomposition. By transforming data into principal components, PCA captures the most important variance in the data, making it simpler to interpret.

This is especially useful in handling large datasets with many variables, making analysis more efficient.

For example, PCA helps in image processing by reducing noise and compressing data, which speeds up further processing. In this way, eigendecomposition allows data scientists to focus on the most relevant features without getting bogged down by unnecessary details.

Improving Machine Learning Algorithms

Machine learning algorithms benefit greatly from the use of eigendecomposition, especially in enhancing model accuracy and computational efficiency.

Eigendecomposition helps improve techniques like support vector machines (SVM) and clustering by transforming data into a more manageable format.

In clustering, for instance, eigendecomposition aids in understanding the structure of data clusters, helping algorithms to perform better. The features extracted through eigendecomposition can lead to better classification and prediction results.

Moreover, in neural networks, eigendecomposition is used to optimize weight matrices, leading to faster convergence during training. As a result, models become more robust and accurate. These enhancements make machine learning solutions more effective, highlighting the importance of eigendecomposition in data science.

Diving Into Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a technique used in data science to simplify datasets by transforming them into principal components. It helps in reducing dimensionality while retaining most of the data’s variance, making it valuable for both data compression and exploratory analysis.

PCA and Data Compression

In data science, PCA is crucial for data compression. It reduces the number of variables in a dataset without losing important information.

By identifying the directions known as principal components, PCA captures the maximum variance in the data. This transformation leads to a smaller set of variables, often with minimal loss of information.

Compression through PCA is highly beneficial in fields such as image and signal processing, where large data volumes can be problematic.

Through this technique, PCA transforms the original data into a few uncorrelated variables that store most of the essential information. The first few components usually explain a large portion of the variance, which makes it easier to handle and analyze.

PCA is particularly useful for reducing storage needs and speeding up data processing tasks.

Exploratory Data Analysis with PCA

PCA is also a powerful tool for exploratory data analysis. It helps data scientists visualize complex data by projecting it into a lower-dimensional space.

This can reveal patterns, trends, and outliers that are not apparent in higher dimensions. For instance, when dealing with datasets involving numerous features, PCA simplifies the data to just two or three dimensions for easy visualization.

Furthermore, PCA helps identify relationships between variables by reducing noise and focusing on the most significant data aspects. This can make it easier to interpret data and design machine learning models.

The reduction in dimensions does not only simplify datasets but also enhances model performance by minimizing overfitting and improving generalization to new data.

Understanding Matrix Operations and Decompositions

Matrix operations and decompositions are key concepts in linear algebra, playing a vital role in simplifying complex mathematical problems. Key methods include eigendecomposition and singular value decomposition, both of which break down matrices into simpler, more manageable parts.

Operations Integral to Eigendecomposition

Eigendecomposition involves breaking a matrix into its eigenvectors and eigenvalues. This decomposition allows for simpler analysis and manipulation of matrices in various applications.

Eigenvectors are vectors that do not change direction during a transformation, and eigenvalues scale them.

To perform eigendecomposition, calculate the eigenvalues as roots of the characteristic polynomial. Once eigenvalues are identified, corresponding eigenvectors are determined.

These eigenvectors form a matrix, often denoted as P, while eigenvalues create a diagonal matrix, D. The relationship can be expressed as A = PDP⁻¹, where A is the original matrix.

Comparison of Eigendecomposition and Singular Value Decomposition

Although similar, eigendecomposition and singular value decomposition (SVD) have distinct purposes.

SVD applies to any matrix, regardless of being square or not, making it versatile. It decomposes a matrix into three other matrices: U, Σ, and Vᵀ, where U and V are orthogonal, and Σ is diagonal with singular values.

Eigendecomposition, on the other hand, is specific to square matrices. It provides insight into matrix properties and transformations.

In comparing SVD and eigendecomposition, SVD excels in handling non-square matrices and is often used in data reduction and noise reduction. Eigendecomposition is favored for matrices where eigenvalues and eigenvectors directly provide useful information.

The Role of Linear Algebra in Machine Learning

Linear algebra is the backbone of many machine learning techniques. It helps by simplifying complex calculations and enhancing data interpretations, making it an essential tool for data scientists and engineers.

Using Algebraic Methods for Feature Extraction

Linear algebra techniques like singular value decomposition (SVD) and principal component analysis (PCA) are pivotal in feature extraction.

PCA, for instance, is a technique that reduces the dimensions of data by transforming it into a set of linearly uncorrelated variables called principal components. This helps in highlighting the most important features of the data while discarding noise.

SVD also finds widespread use in these processes. It’s particularly valuable for making sense of large matrices by breaking them into smaller, manageable ones.

This decomposition aids in compressing and simplifying datasets, which is crucial for enhancing computational efficiency. By distilling data into its fundamental components, linear algebra not only makes machine learning tasks faster but also more accurate.

Algebraic Intuition in Machine Learning Algorithms

Linear algebra gives deep insights into understanding and developing machine learning algorithms.

Neural networks, for example, rely heavily on operations involving matrices and vectors. The weight matrices and input data vectors undergo multiple algebraic transformations, which is foundational to the network’s learning process.

Support vector machines (SVMs) also use linear algebra concepts like dot products and matrices to separate classes with a hyperplane in high-dimensional spaces. Such operations allow algorithms to predict outcomes effectively.

By using algebraic principles, machine learning models optimize learning, enabling precise and efficient predictions. Insights gained from linear algebra thus empower developers, helping to enhance their models and tackle diverse data challenges.

Leveraging Python for Linear Algebra Computations

Python is a powerful tool for handling linear algebra tasks, thanks to its robust libraries. Numpy stands out for efficient operations on arrays and matrices, while eigenvalue algorithms simplify tasks like eigendecomposition.

Utilizing Numpy for Efficient Computations

Numpy is widely recognized for its efficiency in linear algebra computations. It provides tools to work with vectors and matrices, essential for data science tasks.

Using functions like numpy.dot() or numpy.matmul(), users can perform matrix multiplication easily. The library also supports operations such as solving linear equations and finding determinants with numpy.linalg.solve() and numpy.linalg.det(), respectively.

With its focus on speed and efficiency, Numpy makes it feasible to handle large-scale data. It abstracts complex mathematical operations, allowing users to focus on problem-solving rather than computational details.

The library’s seamless integration with other Python libraries enhances its utility in various data science and machine learning projects.

Implementing Algorithms for Eigendecomposition

Eigendecomposition involves breaking down matrices into their eigenvalues and eigenvectors, a critical operation in many scientific fields.

Within Python, Numpy’s numpy.linalg.eig() function facilitates the decomposition of square matrices. This function returns a tuple containing arrays of eigenvalues and their corresponding eigenvectors.

These eigenvalues and vectors are essential in tasks such as dimensionality reduction. They are often used in machine learning and principal component analysis (PCA).

Efficient algorithms within libraries like Numpy enable users to compute these values rapidly, handling both real and complex matrices.

Python’s user-friendly syntax and powerful library support make it an excellent choice for implementing such algorithms. It simplifies advanced mathematical computations for users.

Geometric Interpretation of Eigendecomposition

Eigendecomposition is a method in linear algebra providing insight into the structure of linear transformations. It helps to explain how movements and changes in vector spaces can be understood visually and mathematically.

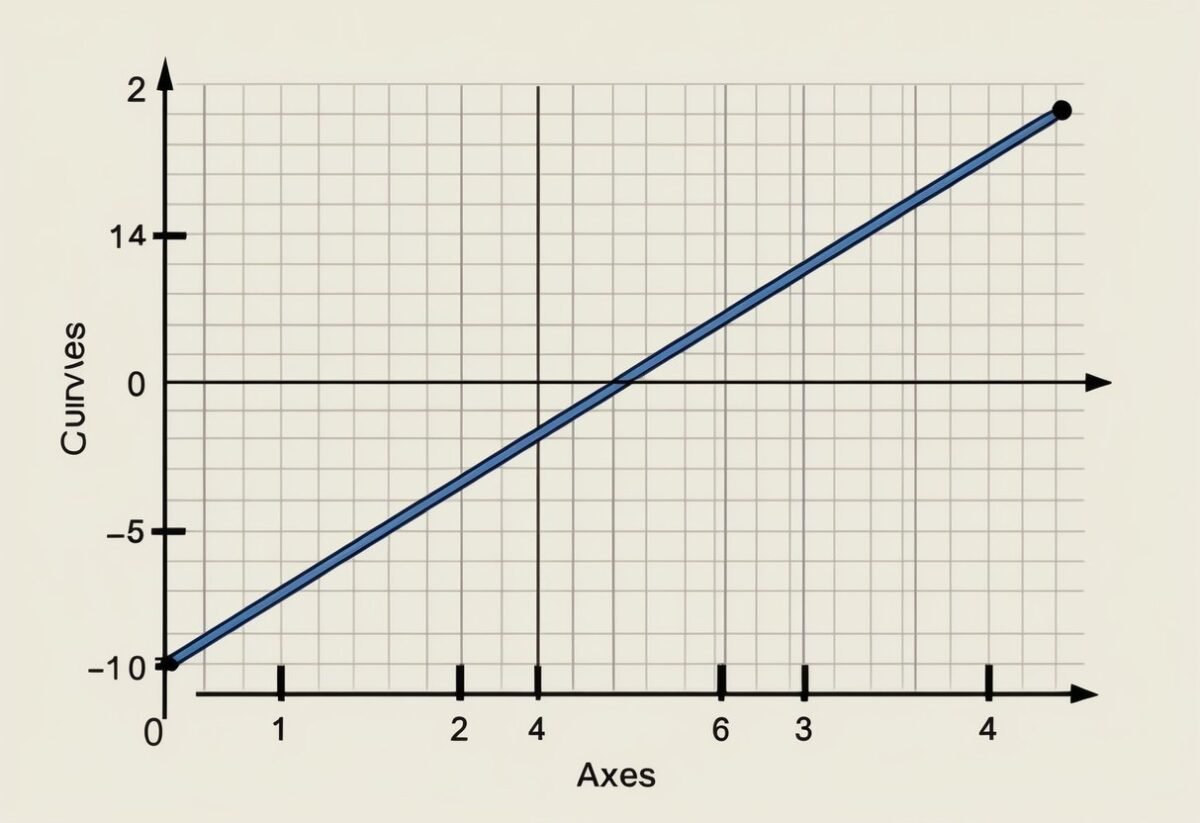

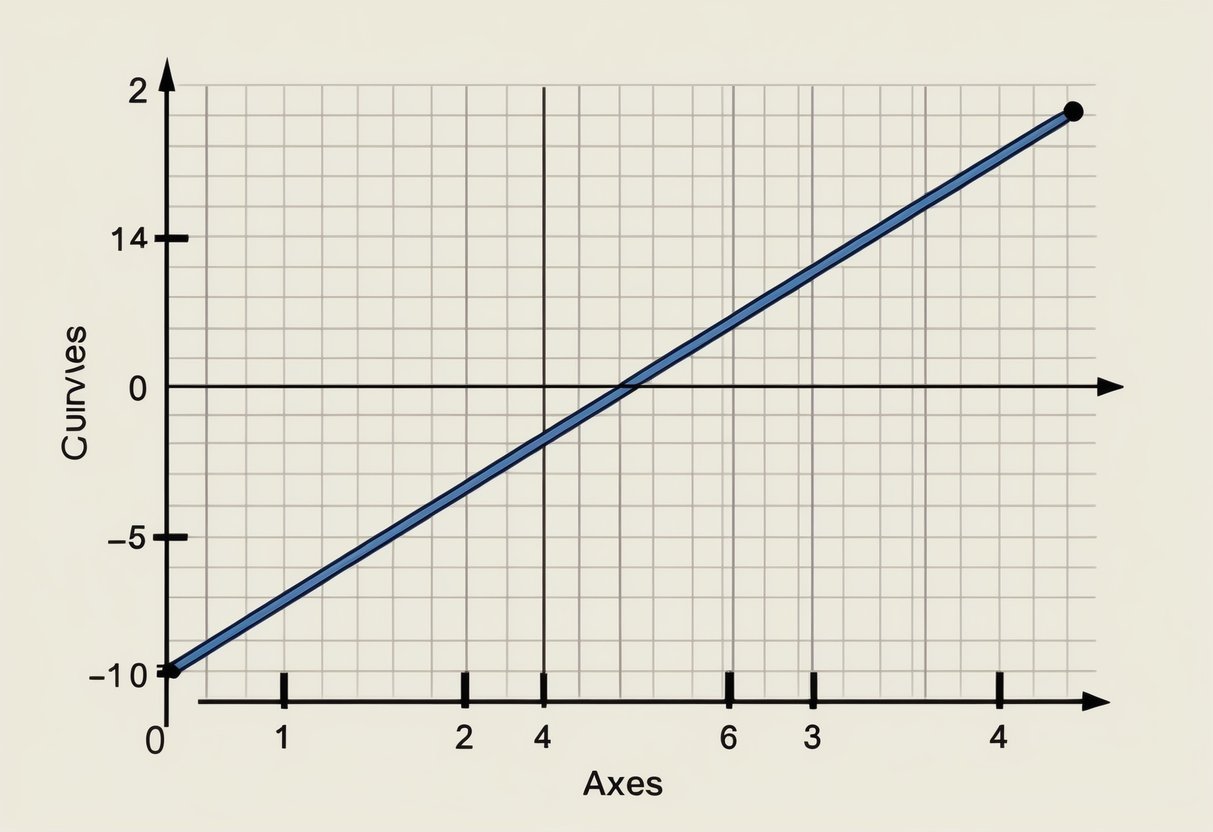

Visualizing Vector Spaces and Transformations

Eigendecomposition involves breaking down matrices into their eigenvectors and eigenvalues, which represent directions and scaling factors in the space.

In a geometric sense, eigenvectors indicate directions that remain unchanged during transformation, while eigenvalues indicate how much those directions stretch or shrink.

When you apply eigendecomposition, it’s like discovering a matrix’s secret basis that reveals how it twists and turns space.

In linear transformations, certain vectors might rotate or scale, but eigenvectors maintain their direction, providing a clear picture of these transformations.

Understanding the Geometric Implications of PCA

Principal Component Analysis (PCA) uses eigendecomposition to reduce the dimensionality of data. This technique helps in identifying the principal components, which are the directions of maximum variance in the data.

By focusing on these key directions, PCA simplifies complex datasets, making it easier to visualize and interpret high-dimensional data.

In PCA, the covariance matrix is decomposed into its eigenvectors and eigenvalues. Here, eigenvectors represent the axes of the new feature space, and eigenvalues reflect the importance of each axis.

This geometric understanding aids data scientists in recognizing key patterns and structures in data, enhancing both analysis and decision-making processes.

Mathematical and Statistical Principles in Data Science

Understanding the mathematical and statistical principles within data science involves exploring concepts such as covariance matrices and dimensionality reduction. These ideas play a key role in transforming complex data into understandable insights.

Relating Covariance Matrices to Eigenproblems

A covariance matrix is a fundamental tool in data analysis, capturing how variables in a dataset vary in relation to each other. It’s essential for understanding data spread and correlation.

When dealing with multidimensional data, it’s often helpful to find directions in which the data has the most variance.

This is where eigenproblems come in. By solving them, particularly through eigendecomposition, the principal components of a dataset can be identified.

These components are directions that capture the largest variance, and are found using eigenvectors and eigenvalues from the covariance matrix.

Applications such as linear transformations and data projections heavily rely on these principles. They help in simplifying datasets while maintaining their essential characteristics. Identifying these directions aids in understanding the intrinsic geometry of the data.

Statistical Foundations for Dimensionality Reduction

Dimensionality reduction aims to reduce the number of variables under consideration and can improve data processing efficiency. It is crucial in dealing with high-dimensional data, which is often computationally demanding.

Statistics offer several methods for this, including Principal Component Analysis (PCA), which uses the principles of covariance matrices.

PCA is a technique that transforms the data into a new coordinate system. The greatest variance by any projection of the data lies on the first coordinate (called the principal component), the second greatest variance on the second coordinate, and so on.

This method enables effective data simplification and visualization.

Dimensionality reduction allows for efficient storage and analysis while minimizing the risk of overfitting. Techniques like these are foundational in data science, providing tools to handle and interpret massive datasets effectively. They are indispensable in scenarios ranging from image processing to predictive modeling.

Advanced Topics in Linear Algebra for Data Science

Understanding advanced topics in linear algebra is crucial for applying these concepts in data science. This section explores diagonal matrices, orthogonality, and the role of basis vectors, fundamental elements enhancing data analysis techniques.

Exploring Diagonal Matrix and Orthogonality

Diagonal matrices are a special type of square matrix where non-diagonal elements are zero. This simplicity makes them easy to compute, particularly in operations like matrix multiplication or finding the inverse.

They are often used in eigendecomposition, simplifying complex calculations in machine learning algorithms.

Orthogonality refers to the fact that two vectors are orthogonal if their dot product is zero.

In data science, this concept is vital because orthogonal vectors form the basis for defining independent features, reducing redundancy in data.

Orthogonal matrices, having orthonormal rows and columns, preserve vector lengths and angles, aiding in transformations and data rotation.

The Significance of Basis Vectors

Basis vectors are a set of vectors in a vector space that are linearly independent and span the space. They are essential because any vector in the space can be expressed as a linear combination of the basis vectors.

In data science, understanding basis vectors helps in techniques like principal component analysis (PCA), which reduces data dimensions while preserving variance.

A clear choice of basis vectors can simplify complex data sets, making analysis more efficient. They provide a framework for understanding how different features influence data and model outcomes. This understanding is critical in making informed decisions about data transformations and model interpretations.

Frequently Asked Questions

This section addresses the role of eigendecomposition in data science, its applications in machine learning algorithms, and the importance of certain linear algebra concepts for data scientists’ tasks. Discover how these mathematical tools are used to enhance data models and everyday operations.

What are the practical applications of eigenvectors and eigenvalues in data science?

Eigenvectors and eigenvalues are essential for data simplification. They help in reducing data dimensions, making complex datasets easier to analyze.

They are widely used in techniques like Principal Component Analysis (PCA) to identify patterns and trends in large datasets.

What are the best resources for understanding linear algebra in the context of data science?

Online platforms such as DataCamp offer tutorials on eigenvectors and eigenvalues, providing key insights relevant to data science.

Books and in-depth courses that focus on the application of linear algebra in machine learning can also be helpful.

How is eigendecomposition applied in machine learning algorithms?

Eigendecomposition plays a crucial role in algorithms that require data transformation or compression. It helps in breaking down matrices into simpler forms, which enhances the algorithm’s efficiency in processing data, such as in the Singular Value Decomposition (SVD).

Why is understanding the eigendecomposition crucial for improving data models?

Eigendecomposition provides insights into the properties of matrices, which are central in modeling relationships between variables.

By understanding these properties, data scientists can refine models to better capture underlying data patterns and improve predictive accuracy.

Which concepts in linear algebra are most essential for proficiency in data science?

Key concepts include matrices, vectors, eigenvalues, eigenvectors, and matrix decompositions like eigendecomposition.

Mastery of these allows for effective manipulation and interpretation of data, crucial for designing algorithms used in machine learning and data analysis.

How can learning linear algebra benefit a data scientist in their everyday tasks?

Proficiency in linear algebra aids in performing complex data transformations. It also enhances data visualization and optimization tasks. Data scientists use these skills daily to build and refine predictive models. This ensures their outcomes are precise and reliable.