Understanding the SQL Fundamentals

SQL is an essential tool for managing and interacting with data. It helps in querying databases and analyzing large data sets effectively.

Mastering SQL involves learning its syntax, understanding data types, and executing basic queries.

SQL Basics and Syntax

SQL, or Structured Query Language, is used to interact with databases. It has a specific syntax that dictates how commands are written and executed.

This syntax is crucial because it ensures consistency and accuracy in database operations.

Commands in SQL are often used to create, update, or delete data. Knowing the correct structure of each command allows users to perform database tasks efficiently.

Understanding SQL syntax helps users interact with databases and retrieve meaningful data quickly and accurately.

Data Types and Structures

Data types are critical in SQL as they define the kind of data that a table column can hold. They ensure that data is stored in an organized and structured manner.

Common data types include integers, decimals, and strings.

Utilizing the right data type is important for optimizing database performance and storage efficiency.

SQL structures such as tables, rows, and columns provide a framework for storing and organizing data. This structured approach allows for efficient data retrieval and manipulation, which is vital for data-driven tasks.

Basic SQL Queries: Select, From, Where

Basic SQL queries often involve the use of the SELECT, FROM, and WHERE clauses, which are fundamental in data retrieval.

The SELECT statement is used to specify the columns to be displayed.

The FROM clause indicates the table from which to retrieve data. Meanwhile, the WHERE clause is used to filter records based on specific conditions.

These commands form the backbone of most SQL operations, allowing users to fetch and analyze data with precision. Knowing how to construct these queries is important for gaining insights from databases.

Setting up the SQL Environment

Setting up a SQL environment involves selecting an appropriate database, following proper installation procedures, and choosing the right management tools. These steps ensure a robust foundation for working with data in the field.

Choosing the Right Database

Selecting the right database system can significantly impact a data engineer’s workflow. For beginner-friendly systems, PostgreSQL and MySQL are popular choices.

PostgreSQL is known for its advanced features and extensions, making it suitable for complex applications. MySQL, on the other hand, offers a simpler setup with a focus on speed and reliability.

Cloud platforms like AWS and Azure provide scalable solutions for database hosting. AWS offers managed services like RDS for both PostgreSQL and MySQL. Azure Database also supports these systems, allowing data engineers to leverage cloud-based resources effectively.

Installation and Configuration

The installation and configuration process varies depending on the chosen database.

PostgreSQL installation on personal computers involves downloading the installer from the official website and following the setup wizard steps. Command-line tools like psql are included, which are essential for database management.

MySQL installation follows a similar path. The installer guides users through setting up essential configurations like root passwords and initial databases.

Cloud platforms like AWS and Azure offer powerful alternatives, where databases can be set up in a managed environment without local installations.

Both platforms provide detailed documentation and support for installation, ensuring smooth setup.

For those using development platforms like GitHub Codespaces, database configurations can be pre-set, speeding up the initiation of projects and minimizing local setup requirements.

Database Management Tools

Effective management of databases often requires specialized tools.

Popular options for PostgreSQL include pgAdmin, a feature-rich graphical interface, and DBeaver, which supports multiple databases. MySQL users widely favor MySQL Workbench for its intuitive design and powerful features.

Cloud management tools in AWS and Azure offer dashboards for database monitoring and administration. These interfaces simplify tasks such as backups, scaling, and performance tuning.

Integrating these tools into a data engineer’s workflow ensures efficient database management, whether hosted locally or in the cloud. These tools support both beginner and advanced needs, providing flexibility and control over database systems.

Database Design Principles

Database design ensures efficient data management and retrieval. It is essential for creating a robust structure for storing data. Focusing on relational databases, database schemas, and normalization can more effectively utilize data resources.

Understanding Relational Databases

Relational databases store data in a structured format, using tables that relate to each other through keys. They follow a model that organizes data into one or more tables, also known as relations, each consisting of rows and columns.

- Tables: Essential building blocks that represent data entities.

- Primary Keys: Unique identifiers for table records.

- Foreign Keys: Connect tables by linking primary keys from different tables.

Using these components helps maintain data integrity and reduces redundancy.

Database Schemas

A database schema is the blueprint of how data is organized. It defines tables, fields, relationships, and other elements like views and indexes.

Visualizing schemas through diagrams helps in understanding data flow and constraints.

Schema Design Steps:

- Identify entities, attributes, and relationships.

- Define each table with primary keys.

- Set relationships through foreign keys.

A well-structured schema ensures efficient queries and data access, enhancing overall database performance.

Normalization and Constraints

Normalization is the process of organizing data to reduce duplication and ensure data integrity. It involves dividing a database into two or more tables and defining relationships between them.

Normalization is done in stages, known as normal forms, each with specific requirements.

- First Normal Form (1NF): Eliminates duplicate columns from the same table.

- Second Normal Form (2NF): Removes subsets of data that apply to multiple rows of a table.

- Third Normal Form (3NF): Eliminates tables that contain non-primary keys.

Constraints, like primary, foreign keys, and unique constraints, enforce the rules of data integrity. They ensure correct data entry and maintain error-free tables, which is crucial for reliable database systems.

Writing Advanced SQL Queries

Advanced SQL skills include sophisticated techniques such as joins, subqueries, and window functions to handle complex data processing tasks. These methods allow data engineers to efficiently retrieve and manipulate large datasets, which is essential for in-depth data analysis and management.

Joins and Subqueries

Joins and subqueries play a crucial role in accessing and combining data from multiple tables.

Joins, such as INNER, LEFT, RIGHT, and FULL, create meaningful connections between datasets based on common fields. This technique enhances the ability to view related data in one unified result set.

For example, an INNER JOIN retrieves records with matching values in both tables, ideal for detailed comparisons.

Subqueries, or nested queries, allow one query to depend on the results of another. These are particularly useful for filtering data.

For instance, a subquery can identify a list of customers who have purchased a specific product, which can then be used by the main query to fetch detailed purchase histories.

Both joins and subqueries are indispensable for advanced data extraction and analysis tasks.

Group By and Having Clauses

The GROUP BY clause is used to organize data into groups based on specified columns. It aggregates data such as summing sales for each region. This is crucial for summarizing large datasets efficiently.

For example, using GROUP BY with functions like SUM or AVG generates aggregate values that provide insights into data trends.

The HAVING clause filters groups created by GROUP BY based on a specified condition.

Unlike WHERE, which filters rows before any groupings, HAVING applies conditions to aggregated data.

This allows users to, for example, display only regions with total sales exceeding a certain amount.

The combination of GROUP BY and HAVING is powerful for producing concise and meaningful summary reports.

Window Functions and CTEs

Window functions, like ROW_NUMBER(), RANK(), and SUM(), operate over a set of rows related to the current row, allowing calculations across the result set without collapsing data into a single row per group.

They enable complex analytics like running totals or rankings in a seamless manner.

Window functions thus offer nuanced insights without cumbersome self-joins or subqueries.

Common Table Expressions (CTEs) provide temporary result sets referred to within a SELECT, INSERT, UPDATE, or DELETE command.

They make queries more readable and manage recursive data search tasks.

For instance, a CTE can simplify a complex join operation by breaking it into simple, reusable parts.

Both window functions and CTEs elevate SQL’s capability to manage intricate queries with clarity and efficiency.

SQL Functions and Operations

Understanding SQL functions and operations is crucial for working with databases. This includes using aggregation functions to handle collections of data, manipulating data with string, date, and number functions, and utilizing logical operators and set operations to refine data analysis.

Aggregation Functions

Aggregation functions are essential for summarizing data in SQL. SUM, COUNT, and AVG are some of the most common functions used.

- SUM: Calculates the total of a numerical column. For example, calculating the total sales in a dataset.

- COUNT: Returns the number of rows in a dataset, which helps in scenarios like determining the total number of employees in a database.

- AVG: Computes the average of a numerical column, useful for analyzing average temperature data over time.

These functions often work with GROUP BY to classify results into specified groups, providing insights into data subsets.

String, Date, and Number Functions

SQL offers a variety of functions to manipulate strings, dates, and numbers.

String functions like CONCAT or SUBSTRING are useful for managing text data.

- Date functions: Provide ways to extract or calculate date values. Functions like DATEPART can extract year, month, or day from a date.

- Number functions: Such as ROUND or CEILING, help adjust numerical values as needed.

These operations allow more control over data presentation and transformation, making it easier to achieve precise results.

Logical Operators and Set Operations

Logical operators, including AND, OR, and NOT, assist in forming SQL queries that refine results based on multiple conditions. They are crucial for filtering data based on complex conditions.

Set operations like UNION and INTERSECT allow combining results from multiple queries.

For example, UNION merges datasets with similar structures, useful for joining tables with consistent schema. ORDER BY can then sort the final output, enhancing data organization.

These tools make SQL a robust language for complex queries and data retrieval tasks.

Data Manipulation and CRUD Operations

Data manipulation in SQL allows users to interact with database tables efficiently. Key operations include inserting data, updating and deleting records, and managing transactions. These actions are crucial for maintaining and managing data in any database system.

Inserting Data

Inserting data is a fundamental operation where new records are added to a database. This is commonly done using the INSERT statement.

The INSERT command lets users add one or multiple rows into a table.

When inserting records, it is crucial to specify the correct table and ensure data aligns with column formats.

For example, to insert a new student record, users might enter:

INSERT INTO students (name, age, grade) VALUES ('John Doe', 15, '10th');

Properly inserting data also involves handling any constraints like primary keys or foreign keys to avoid errors and ensure meaningful relationships between tables.

Updating and Deleting Records

Updating and deleting records are essential for keeping the database current.

The UPDATE statement is used to modify existing data. Users must specify which records to update using conditions with the WHERE clause.

For instance:

UPDATE students SET grade = '11th' WHERE name = 'John Doe';

Deleting records involves the DELETE statement, which removes records from a table. Using DELETE requires caution as it permanently removes data.

Always specify conditions with WHERE to avoid losing all records in a table:

DELETE FROM students WHERE name = 'John Doe';

Transaction Management

Transaction management ensures data integrity during multiple SQL operations. A transaction is a sequence of operations executed as a single unit.

The BEGIN TRANSACTION command starts a transaction, followed by the desired SQL statements. Changes made can be committed using COMMIT to save permanently or rolled back with ROLLBACK to revert to the last committed state.

This process helps maintain a consistent database state and prevents partial data changes in case of errors or failures. For example, within a transaction where multiple tables are updated, a failure in any step will not affect other operations if managed correctly with rollbacks.

Transaction management is essential in applications where reliability and data accuracy are critical.

Optimizing SQL Queries for Performance

Optimizing SQL queries is vital for improving performance and efficiency. This involves using indexing strategies, analyzing query performance, and utilizing execution plans with optimization hints.

Indexing Strategies

Indexes play a critical role in query optimization. They help databases find data quickly without scanning entire tables.

When a query includes a WHERE clause, using an index on the filtered columns can improve speed significantly.

Types of Indexes:

- Single-column index

- Composite index (covers multiple columns)

Choosing the right type depends on the query. For instance, a composite index can speed up queries that filter based on multiple columns.

It’s important to note that while indexes can boost performance, they also require maintenance and can slow down write operations like INSERTs and UPDATEs.

Query Performance Analysis

Analyzing query performance involves checking how efficiently a query runs. Tools like SQL Profiler, built into some database management systems, allow users to monitor queries and identify which ones take longer to execute.

Steps to Analyze:

- Use execution time statistics to find slow queries.

- Review resource usage like CPU and memory.

- Identify possible bottlenecks or inefficient code patterns.

Regular monitoring can reveal trends and help prioritize optimizations. Improving query logic, limiting data retrieval, and reducing unnecessary complexity can lead to substantial performance gains.

Execution Plans and Optimization Hints

Execution plans provide insights into how a database executes a query and what operations it performs. These plans show important details like join types and sequence of operations.

Using an Execution Plan:

- Identify costly operations or scans.

- Check if the plan uses indexes effectively.

In some cases, developers can use optimization hints to suggest specific strategies to the database. For instance, using a hint to force an index can be beneficial if the optimizer chooses a less efficient path.

Both execution plans and optimization hints are powerful tools that, when used correctly, can lead to significant improvements in query performance.

Data Engineering with SQL

Data engineering often relies on SQL to handle large datasets efficiently. This involves integrating data, optimizing storage solutions, and managing data flow through complex systems.

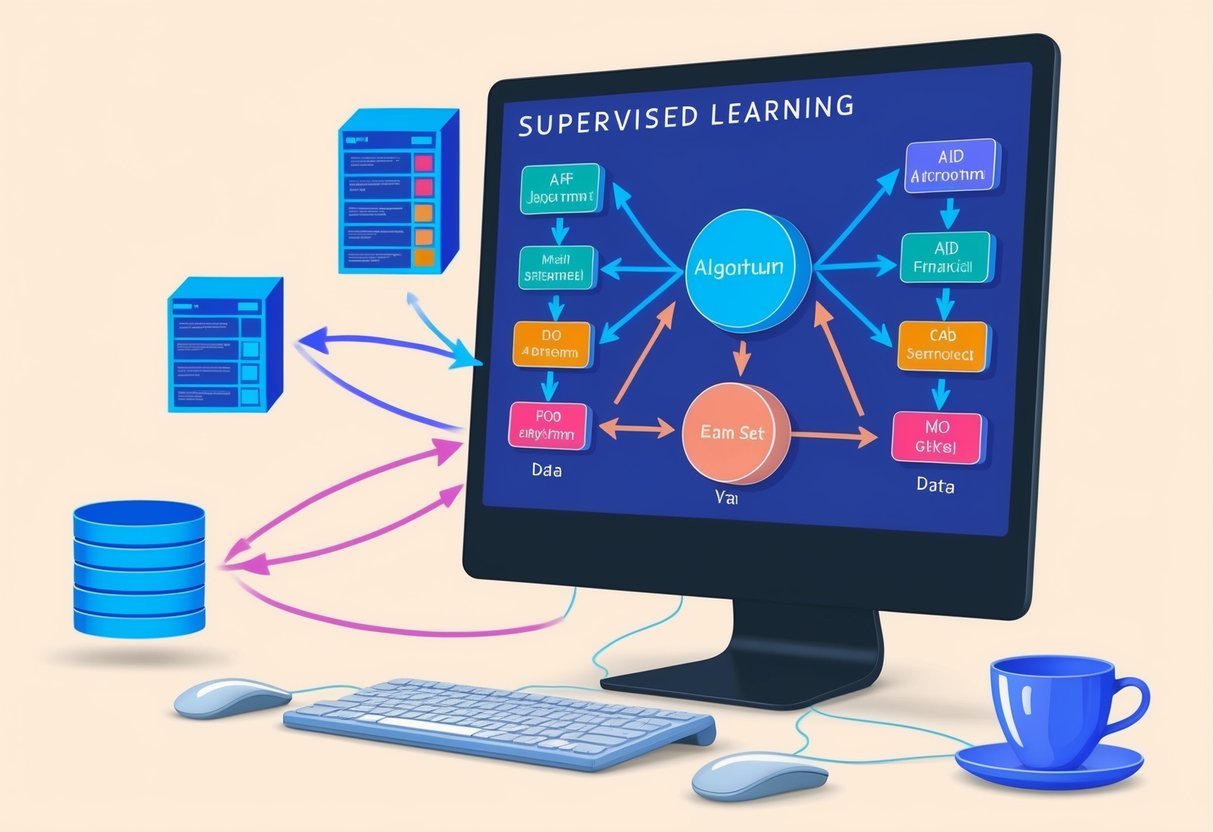

ETL Processes

ETL (Extract, Transform, Load) processes are vital in data engineering. They help extract data from various sources, transform it for analysis, and load it into databases. SQL plays a key role in each step.

Extraction with SQL queries allows filtering of relevant data. During transformation, SQL functions help clean and normalize the data, ensuring it fits the desired structure. Finally, loading involves inserting transformed data into a data warehouse or another storage system, ready for analysis.

Using SQL for ETL provides efficiency and scalability, which are crucial in handling big data projects.

Data Warehousing Concepts

Data warehouses store large volumes of historical data from multiple sources. SQL is fundamental in querying and managing these warehouses.

It enables complex queries over large datasets, supporting business intelligence and reporting tasks. Using SQL, data engineers can create schemas that define the structure of data storage. They can also implement indexing and partitioning, which improve query performance.

Data warehouses often integrate with big data tools like Hadoop, enhancing their ability to handle massive datasets.

Ultimately, SQL’s role in data warehousing is to ensure that data remains organized, accessible, and secure, which is crucial for informed decision-making processes.

Building and Managing Data Pipelines

Data pipelines automate data flow between systems, often spanning multiple stages. They are essential for continuous data processing and delivery.

In building these pipelines, SQL is used to query and manipulate data at various steps. For instance, SQL scripts can automate data transformation tasks within pipelines. They can also integrate with scheduling tools to ensure timely data updates.

Managing pipelines requires monitoring for performance bottlenecks and errors, ensuring data integrity. SQL’s ability to handle complex queries aids in maintaining smooth operations within the pipelines.

These processes are vital in delivering real-time analytics, crucial for data-driven businesses.

Integrating SQL with Other Technologies

Integrating SQL with various technologies enhances data engineering capabilities. These integrations enable seamless data manipulation, storage, and visualization, crucial for comprehensive data solutions.

SQL and Python Programming

SQL and Python are often used together to streamline data manipulation and analysis. Python’s libraries like Pandas and SQLAlchemy allow users to interact with databases efficiently. They provide tools to execute SQL queries within Python scripts, automating data workflows.

SQL handles data storage and retrieval, while Python processes and visualizes data. This combination offers robust solutions, particularly beneficial in data science and data engineering.

It allows professionals to build powerful data pipelines, integrate data from various sources, and perform advanced analytics.

Leveraging SQL with Cloud Computing

SQL’s integration with cloud computing services enhances scalability and flexibility. Platforms like Amazon Web Services (AWS) and Google Cloud integrate SQL databases to store and process large datasets efficiently.

Cloud-based SQL databases offer seamless scaling without the need for physical hardware. This integration enables businesses to manage and analyze vast amounts of data in real-time.

Cloud services also provide backup and recovery solutions, ensuring data security and integrity. Leveraging such technologies helps organizations streamline operations and reduce infrastructure costs.

Connectivity with Data Visualization Tools

SQL plays a crucial role in connecting with data visualization tools like Power BI and Tableau. These tools use SQL to fetch data from databases, allowing users to create dynamic, interactive dashboards.

SQL queries retrieve precise data, which can be visualized to uncover trends and insights. This connection empowers users to perform in-depth analyses and present data in visually appealing formats.

Data visualization tools facilitate decision-making by transforming raw data into actionable insights. This integration is vital for businesses to communicate complex information effectively.

Advanced Concepts in SQL

Advanced SQL skills include tools such as stored procedures, triggers, views, materialized views, and dynamic SQL. These concepts provide powerful ways to manipulate and optimize data handling. Understanding them can enhance efficiency and flexibility in data engineering tasks.

Stored Procedures and Triggers

Stored procedures are precompiled sets of SQL statements that can be executed on demand. They improve efficiency by reducing network traffic and enhancing performance. Stored procedures also promote code reuse and consistency in database operations.

Triggers are automatic actions set off by specific events like data changes, allowing for automated enforcement of rules and data validation. Both stored procedures and triggers can be pivotal in managing complex data operations, ensuring processes run smoothly and effectively.

Views and Materialized Views

Views are virtual tables representing a saved SQL query. They help simplify complex queries, maintaining abstraction while allowing users to retrieve specific data sets without altering the underlying tables. Views are widely used to ensure security, hiding certain data elements while exposing only the needed information.

Materialized views, unlike regular views, store actual data, offering faster query performance. They are beneficial when dealing with large data sets and are often refreshed periodically to reflect data changes.

Using views and materialized views wisely can greatly enhance how data is accessed and managed.

Dynamic SQL and Metadata Operations

Dynamic SQL is an advanced feature enabling the creation of SQL statements dynamically at runtime. It provides flexibility when dealing with changing requirements or when the exact query structure is unknown until runtime. This ability makes it valuable for complex applications.

Metadata operations involve handling data about data, like schema updates, and are crucial for automating database tasks. These operations are central to data dictionary maintenance and ensure that database systems can adapt to evolving data structures.

Combining dynamic SQL with metadata operations allows for more adaptive and robust database management.

Ensuring Data Security and Compliance

Ensuring data security and compliance involves protecting databases against unauthorized access and adhering to legal requirements. This area is crucial in mitigating risks and maintaining trust in data systems.

Implementing Database Security Measures

Database security involves implementing measures like access controls, encryption, and strong authentication. Access controls ensure that only authorized users can interact with the data.

Encrypting sensitive information helps in protecting it during storage and transmission. Firewalls and Intrusion Detection Systems (IDS) further enhance security by monitoring and alerting on suspicious activities.

Regular updates and patches are essential to address vulnerabilities. Ensuring that database schemas and structures (DDL) are secure prevents unwanted alterations. Backup strategies ensure data recovery in case of breaches or failures.

Data Privacy Regulations

Data privacy regulations such as the GDPR in Europe and CCPA in California provide guidelines for maintaining data protection standards. These regulations require organizations to inform users about data collection and usage and obtain consent.

Non-compliance can lead to substantial fines and damage to reputation. Companies must implement policies that align with these regulations, ensuring personal data is only accessible to those with proper authorization. Data minimization is a key concept, reducing the amount of personal data collected and stored.

Auditing and Monitoring Database Activity

Auditing and monitoring involve tracking access and modifications to the database. This helps in detecting unauthorized activities and ensuring compliance with data security policies.

Regular audits can identify potential security gaps. Monitoring tools can log who accessed data, when, and what changes were made. Automated alerts can be set up for unusual activity patterns.

This continuous oversight is crucial in maintaining accountability and transparency in data handling. Frequent reviews of audit logs help in understanding usage patterns and enhancing security protocols.

Frequently Asked Questions

Beginners in data engineering often seek guidance on effective learning resources for SQL, key concepts to focus on, and practical ways to enhance their skills.

This section provides answers to common questions, helping newcomers navigate their learning journey in SQL for data engineering.

What are the best resources for a beginner to learn SQL for data engineering?

Beginners can benefit from interactive platforms like DataCamp and SQLZoo which offer structured lessons and practical exercises. Additionally, Interview Query provides insights into typical interview questions, aiding learners in understanding the practical application of SQL in data engineering.

Which SQL concepts are crucial for beginners to understand when starting a career in data engineering?

Key concepts include understanding basic SQL queries, data manipulation using DML (Data Manipulation Language), and the importance of DDL (Data Definition Language) for database structure.

Proficiency in these areas lays the foundation for more advanced topics such as ETL processes and performance tuning.

How can beginners practice SQL coding to enhance their data engineering skills?

Hands-on practice is essential. Beginners can use platforms like LeetCode and HackerRank that offer SQL challenges to reinforce learning.

Regular practice helps improve problem-solving skills and exposes learners to real-world scenarios.

Where can someone find SQL exercises tailored for data engineering?

Exercises tailored for data engineering can be found on platforms such as StrataScratch, which provides problem sets designed to simulate data engineering tasks. These exercises help bridge the gap between theory and practical application.

What is the recommended learning path for beginners interested in SQL for data engineering?

A structured learning path involves starting with basic SQL syntax and gradually moving to advanced topics like joins, subqueries, and indexes. Understanding ETL processes is crucial.

This progression ensures a well-rounded comprehension suitable for data engineering roles.

Are there any books or online courses highly recommended for learning beginner-level SQL for aspiring data engineers?

Books such as “SQL for Data Scientists” offer a foundational understanding.

Online courses from platforms like Coursera and edX provide comprehensive curricula.

These resources cater to varying learning styles and offer practical exercises to solidify knowledge.