Understanding Polynomial Regression

Polynomial regression extends linear regression by introducing higher-degree terms, allowing for the modeling of nonlinear relationships.

This technique captures patterns in data that linear models might miss, offering a more flexible framework for prediction.

Key Concepts Behind Polynomial Regression

Polynomial regression fits a relationship between a dependent variable and an independent variable using an nth-degree polynomial. The equation can be represented as:

y = β₀ + β₁x + β₂x² + … + βₙxⁿ

In this equation, y is the dependent variable, x is the independent variable, and the coefficients (β₀, β₁, β₂, …, βₙ) are determined through training.

These coefficients help the model capture complex patterns. More degrees introduce more polynomial terms, allowing the model to adjust and fit the data more accurately.

Regularization techniques like Ridge or Lasso can help prevent overfitting by controlling the complexity of the polynomial model.

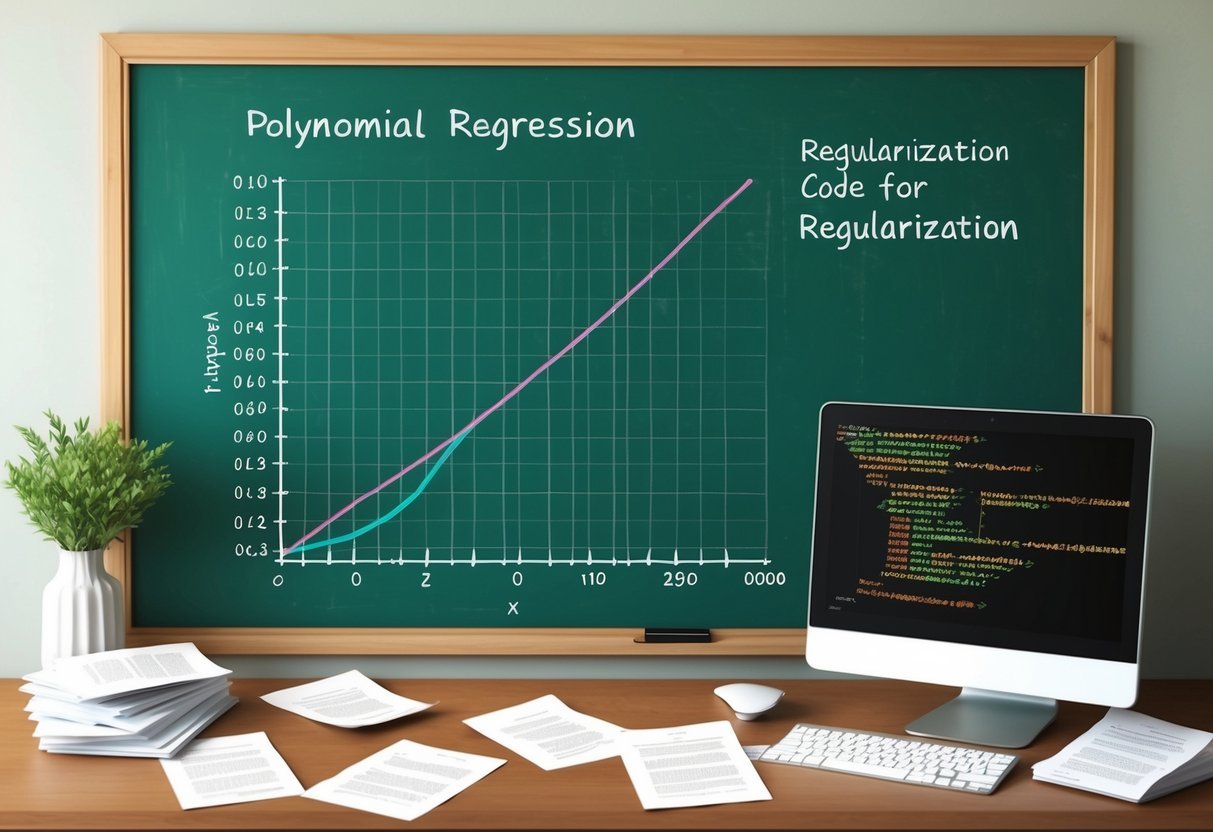

Differences Between Linear and Polynomial Regression

Linear regression assumes a straight-line relationship between variables, while polynomial regression allows for curved patterns. The key difference is the flexibility in capturing the data’s trends.

In linear regression, predictions are made by fitting the best line through the dataset using a first-degree polynomial.

Polynomial regression, on the other hand, involves adding higher power terms like x², x³, etc., to the equation, which introduces curvature. This helps in modeling datasets where the relationship between variables is not just linear but involves some non-linear tendencies, improving the model’s accuracy in such cases.

The Need for Regularization

Regularization is crucial to ensure that machine learning models perform well on new data. It addresses key issues that can arise during model training, especially overfitting and the bias-variance tradeoff.

Preventing Overfitting in Model Training

Overfitting happens when a model learns the noise in the training data too well. It performs with high accuracy on the training set but poorly on unseen data. This occurs because the model is too complex for the task at hand.

Regularization techniques, such as L1 and L2 regularization, help mitigate overfitting by adding a penalty for using large coefficients.

For example, ridge regression implements L2 regularization to keep model weights small, reducing complexity and maintaining performance on new data.

By controlling overfitting, regularization helps create models that generalize better, leading to more accurate predictions on different datasets.

Balancing Bias and Variance Tradeoff

The bias-variance tradeoff is a critical concept in model training. High bias can cause models to be too simple, missing important patterns and exhibiting underfitting. Conversely, high variance makes models too complex, leading to overfitting.

Regularization helps to achieve the right balance between bias and variance. Techniques like polynomial regression with regularization adjust the model complexity.

By introducing a penalty to complexity, regularization reduces high variance while ensuring the model does not become too biased. This tradeoff allows for optimal model performance, capturing essential patterns without becoming overly sensitive to training data noise.

Core Principles of Regularization Techniques

Regularization techniques are essential for reducing overfitting in machine learning models. These techniques help balance simplicity and accuracy by adding a penalty term to the cost function, ensuring the model remains generalizable to new data.

Understanding L1 and L2 Regularization

L1 and L2 regularization are two widely used techniques to constrain model complexity.

L1 regularization, or Lasso, adds an absolute value penalty to the loss function, which can lead to sparse models by driving some weights to zero.

L2 regularization, known as Ridge regression, adds a squared magnitude penalty to the loss function.

It helps in controlling multicollinearity and prevents coefficients from becoming too large by shrinking them evenly, which is beneficial for situations where all input features are expected to be relevant.

This technique makes the model more stable and reduces variance, leading to better performance on unseen data.

More insights into this can be found in the concept of ridge regression.

Insights into Elastic Net Regularization

Elastic Net combines both L1 and L2 penalties in its regularization approach.

This technique is particularly useful when dealing with datasets with numerous correlated features.

The combination allows Elastic Net to handle scenarios where Lasso might select only one feature from a group of correlated ones, while Ridge would include all, albeit small, coefficients.

Elastic Net effectively balances feature reduction with generalization by tuning two hyperparameters: one for the L1 ratio and another for the strength of the penalty.

It is especially useful in high-dimensional datasets where the number of predictors exceeds the number of observations.

This makes Elastic Net a flexible and powerful tool, incorporating strengths from both L1 and L2 regularization while mitigating their individual weaknesses.

Exploring L1 Regularization: Lasso Regression

Lasso regression is a type of linear regression that uses L1 regularization to prevent overfitting. This technique adds a penalty to the model’s coefficient estimates. It encourages the model to reduce the importance of less relevant features by setting their coefficients to zero.

L1 regularization, also known as lasso regularization, involves a penalty term based on the L1 norm. This penalty is the sum of the absolute values of the coefficients. As a result, feature selection is effectively performed during model training.

In the context of machine learning, lasso regression is valued for its simplicity and ability to handle situations where only a few features are relevant.

By making some coefficients zero, it automates the selection of the most important features, helping to simplify the model.

The selection of specific features is influenced by the regularization parameter, which controls the strength of the penalty. A larger penalty makes the model more sparse by zeroing out more coefficients, thus performing stricter feature selection.

Overall, lasso regression is a powerful tool when the goal is to create a simpler model that still captures the essential patterns in the data. By focusing only on the most impactful variables, it helps create models that are easier to interpret and apply successfully in various contexts.

Exploring L2 Regularization: Ridge Regression

Ridge regression, also known as L2 regularization, adds a penalty to the sum of the squared coefficients. This penalty term helps prevent overfitting by discouraging overly complex models. By including this penalty, ridge regression can improve the model’s performance on unseen data.

The penalty term is defined as the L2 norm of the coefficients, represented as (||w||_2^2). The inclusion of this term slightly alters the linear regression formula, introducing a regularization strength parameter, often denoted by (lambda). A higher value for (lambda) means stronger regularization.

| Term | Description |

|---|---|

| Ridge Regression | A type of linear regression that includes L2 regularization. |

| L2 Norm | The sum of the squares of coefficients, used as a penalty. |

| Penalty Term | Adds regularization strength to limit model complexity. |

In machine learning, ridge regression is popular for its ability to handle multicollinearity—where predictor variables are highly correlated. This trait makes it suitable for datasets with many features, reducing the variance of estimates.

Ridge regularization is particularly useful when fitting polynomial models. These models often risk overfitting, but ridge regression effectively controls this by penalizing large coefficients. Thus, it helps in balancing the bias-variance trade-off, ensuring a more reliable model performance.

When implemented correctly, ridge regression provides a robust approach to model fitting. Its incorporation of L2 regularization ensures that even complex data can be approached with confidence, supporting accurate predictions and reliable results. Explore more about ridge regression on IBM’s Ridge Regression page.

Combined Approaches: Elastic Net Regression

Elastic Net Regression is a hybrid technique that merges the strengths of two methods: L1 and L2 regularization. This combination aims to enhance the ability to handle datasets with many features, some of which might be irrelevant.

These regularizations apply penalties to the model’s coefficients. The L1 norm, from Lasso, promotes sparsity by shrinking some coefficients to zero. The L2 norm, from Ridge, ensures smaller but complex coefficient adjustments.

The Elastic Net model incorporates both norms through a weighted parameter, allowing a flexible mix. The parameter controls how much of each regularization to apply. This can be adjusted to suit specific training data needs.

A valuable feature of Elastic Net is its ability to reduce overfitting by controlling large coefficients. This results in a smoother prediction curve. This approach is beneficial when working with datasets that contain multicollinearity, where features are highly correlated.

Here’s a simple representation:

| Regularization Type | Penalty | Effect on Coefficients |

|---|---|---|

| L1 (Lasso) | |β| | Promotes sparsity |

| L2 (Ridge) | |β|² | Shrinks coefficients smoothly |

| Elastic Net | α|β| + (1-α)|β|² | Combines both effects |

The choice between L1, L2, or their combination depends on specific project goals and the nature of the data involved. Adjusting the combination allows modeling to be both robust and adaptable, improving prediction accuracy.

Optimizing Model Performance

To enhance the performance of a polynomial regression model, two key areas to focus on are tuning hyperparameters and managing the balance between feature coefficients and model complexity. Each plays a crucial role in ensuring a model fits well to the data without overfitting or underfitting.

Tuning Hyperparameters for Best Results

Hyperparameters are settings that need to be set before training a model and can significantly affect model performance. These include parameters like the degree of the polynomial and regularization strength.

Adjusting these parameters helps control the balance between fitting the training dataset and generalizing to test data.

For polynomial regression, selecting the appropriate polynomial degree is critical. A high degree might lead to overfitting, while a low degree could cause underfitting.

Using techniques like cross-validation helps in choosing the best hyperparameters.

Additionally, regularization parameters such as those used in ridge regression can fine-tune how much penalty is applied to complex models, ensuring the feature coefficients remain suitable.

Feature Coefficients and Model Complexity

Feature coefficients indicate the model’s sensitivity to each feature, influencing predictions. Managing these helps in reducing model complexity and improving generalization.

Regularization techniques like L1 (Lasso) or L2 (Ridge) introduce penalties that limit the size of coefficients. This can prevent the model from becoming too complex.

Keeping feature coefficients small often leads to simpler models that perform well on test data. Complexity should align with the quality of the data to avoid fitting noise from the training data.

Understanding these aspects ensures that models remain effective and robust when faced with different datasets. Regularization methods also help in managing large numbers of features by encouraging sparsity or smoothness.

Quantifying Model Accuracy

Quantifying how accurately a model predicts outcomes involves using specific metrics to assess performance.

These metrics help determine how well a model is learning and if it generalizes well to new data.

Loss Functions and Cost Function

A loss function measures how far predictions deviate from actual outcomes for a single data point. It calculates the difference between the predicted and true values.

Loss functions guide model training by updating parameters to minimize error.

The cost function, on the other hand, summarizes the total error over all data points. It is often the average of individual losses in the dataset.

By minimizing the cost function, a model increases its overall predictive accuracy.

Common loss functions include the mean squared error and the squared error, both of which penalize larger errors more heavily than smaller ones.

Mean Squared Error and Squared Error

Squared error is a simple measure of error for a single data point. It is the squared difference between the predicted value and the actual value.

This squaring process emphasizes larger errors.

The mean squared error (MSE) expands on squared error by averaging these squared differences across all predictions.

MSE provides a single value that quantifies the model’s accuracy over the entire dataset.

In practice, MSE is widely used due to its ability to highlight models that make significant errors and has easy-to-compute derivatives that aid in the optimization of predictions.

Practical Applications of Polynomial Regression

Polynomial regression is widely used in various fields due to its ability to model complex, nonlinear relationships.

This section explores its uses in finance and engineering, highlighting specific applications where this technique is particularly beneficial.

Polynomial Regression in Finance

In finance, polynomial regression helps in analyzing trends and forecasting.

Financial markets are often influenced by nonlinear patterns, and this method captures these intricacies better than simple linear models.

For instance, it is used to predict stock price movements by considering factors like unemployment rates and GDP growth.

Also, it aids in risk management by modeling the nonlinear relationship between different financial indicators.

This approach assists in constructing portfolios that optimize risk and return, making it valuable for financial analysts and portfolio managers.

Use Cases in Engineering and Science

In engineering, polynomial regression is applied to model relationships between variables in mechanical systems, such as stress and strain analysis.

This helps in predicting system behavior under different conditions, which is crucial for design and safety assessments.

Science fields often rely on this regression to study phenomena where variables interact in complex ways.

For example, environmental science utilizes it to analyze climate data and forecast future trends.

Additionally, engineering and science tasks, such as optimizing materials for durability or predicting chemical reactions, benefit from its capacity to identify patterns in experimental data, providing deeper insights into material properties and reaction outcomes.

Machine Learning Algorithms and Regularization

Regularization is a key technique in machine learning to improve model generalization.

It helps reduce overfitting by adding a penalty term to the model’s loss function. This encourages simpler models with smaller coefficients, promoting stability across various datasets.

Types of Regularization:

-

L1 Regularization (Lasso): Adds the sum of the absolute values of coefficients to the loss function. It can result in sparse models, where some coefficients become zero.

-

L2 Regularization (Ridge): Includes the sum of the squared values of coefficients in the loss function, effectively shrinking them but rarely making them zero.

These regularization techniques are crucial for algorithms like linear regression, support vector machines, and neural networks.

Models that are too complex tend to fit noise in training data, which harms their predictive performance on new data.

Overfitting happens when a machine learning algorithm learns patterns that exist only in the training data.

Regularization helps models find the right balance, ensuring they perform well not just on the training set but also on unseen data.

In polynomial regression, without regularization, high-degree polynomials can easily overfit, capturing fluctuations in data that don’t represent real patterns.

By applying regularization, these models become more robust, enhancing their generalization capabilities.

Software Implementations and Code Examples

Polynomial regression involves using different Python libraries to fit polynomial models, often alongside regularization techniques to prevent overfitting. These tools offer functions and methods to simplify the coding process.

Python Libraries for Polynomial Regression

When working with polynomial regression in Python, the scikit-learn library is highly recommended.

It offers the PolynomialFeatures method, which is used to transform the input data to include polynomial combinations of features. This is crucial for crafting polynomial models.

The LinearRegression function can be used to fit the model after transforming the data.

By combining these tools, users can construct polynomial regression models efficiently.

Practical Python code snippets with scikit-learn demonstrate how to build and evaluate these models.

Other libraries like numpy and pandas assist with data manipulation and preparation.

For more in-depth understanding and other algorithm options, resources like GeeksforGeeks provide thorough guides.

Applying Regularization in Python

Regularization is a technique used to improve model performance by adding penalties to the model coefficients.

In Python, scikit-learn provides the Ridge and Lasso classes for regularization purposes.

These are integrated into the polynomial regression process to control overfitting.

Using Ridge, also known as L2 regularization, adds a penalty to the loss function that is proportional to the square of the coefficients. This encourages the shrinking of coefficients, enhancing model reliability.

Example: After creating polynomial features, apply Ridge along with the transformed data to fit a regularized polynomial regression model.

Resources such as this GeeksforGeeks article provide more details and code examples.

Advanced Topics in Model Development

In-depth work on model development involves tackling complex issues like multicollinearity and optimizing algorithms through gradient descent. These topics are crucial for enhancing the accuracy and reliability of polynomial regression models, especially when dealing with real-world data.

Addressing Multicollinearity

Multicollinearity occurs when two or more predictor variables in a regression model are highly correlated. This can distort the results and make it difficult to determine the effect of each variable.

One way to address this is through regularization techniques such as ridge regression, which penalizes large coefficients and helps prevent overfitting.

Another approach is to use variance inflation factor (VIF) to identify and remove or combine correlated predictors.

A simpler model may result in better performance. Ensuring diverse data sources can also help minimize multicollinearity.

Techniques like principal component analysis (PCA) can be employed to reduce dimensionality, thus making the model more robust.

Gradient Descent and Tuning Parameters

Gradient descent is a crucial optimization algorithm used for finding the minimum of a function, often employed in regression analysis to optimize coefficients.

The learning rate is a critical tuning parameter that dictates the step size taken during each iteration of gradient descent.

Choosing the right learning rate is essential; a rate too high can cause overshooting, while one too low can slow convergence.

Adaptive methods like AdaGrad and RMSProp adjust the learning rate dynamically, enhancing efficiency.

Other tuning parameters can include the number of iterations and initializing weights.

Properly tuning these parameters can significantly improve model accuracy and convergence speed.

Frequently Asked Questions

Polynomial regression with regularization involves techniques like L1 and L2 regularization to improve model performance. It is applied in various real-world scenarios, and selecting the right polynomial degree is crucial to avoid overfitting.

What does L2 regularization entail in the context of polynomial regression models?

L2 regularization, also known as ridge regression, adds a penalty equal to the square of the magnitude of coefficients to the loss function.

This technique helps to prevent overfitting by discouraging overly complex models, thus keeping the coefficient values small.

Can you elaborate on the concept and mathematics behind polynomial regression?

Polynomial regression is an extension of linear regression where the relationship between the independent variable and the dependent variable is modeled as an nth degree polynomial.

It involves fitting a curve to the data points by minimizing the error term in the polynomial equation.

What strategies are effective in preventing overfitting when using polynomial regression?

To prevent overfitting in polynomial regression, it’s important to choose the appropriate degree for the polynomial.

Using regularization techniques like L1 or L2 can also help. Cross-validation is another effective strategy to ensure the model generalizes well to unseen data.

In what real-world scenarios is polynomial regression commonly applied?

Polynomial regression is used in various fields such as finance for modeling stock trends and in environmental studies for analyzing temperature changes over time.

It is also applied in biology to model population growth and in engineering for material stress analysis.

How does the choice of polynomial degree affect the model’s performance?

The degree of the polynomial affects both bias and variance in the model.

A low degree can cause high bias and underfitting, while a high degree can lead to high variance and overfitting.

Finding a balance is crucial for achieving optimal model performance.

What are the differences between L1 and L2 regularization techniques in polynomial regression?

L1 regularization, or Lasso, adds an absolute value penalty to the loss function, which can lead to sparse models by driving some coefficients to zero.

L2 regularization, or Ridge regression, penalizes the square of the coefficient magnitudes, promoting smaller coefficients but not necessarily zero.