Understanding SQL Grouping Sets

SQL Grouping Sets are a powerful tool for generating multiple groupings in a single query. They enhance data analysis by allowing different aggregations to be defined concurrently, improving efficiency and readability in SQL statements.

Definition and Purpose of Grouping Sets

Grouping Sets offer flexibility by letting you define multiple groupings in one SQL query. This saves time and simplifies queries that need various levels of data aggregation.

With Grouping Sets, SQL can compute multiple aggregates, such as totals and subtotals, using a single, concise command.

They streamline data processing by addressing specific requirements in analytics, such as calculating sales totals by both product and region. By reducing repetitive code, they make databases more efficient.

The Group By Clause and Grouping Sets

The GROUP BY clause in SQL is used to arrange identical data into groups. It works hand-in-hand with Grouping Sets to provide a structured way to summarize information.

While GROUP BY focuses on single-level summaries, Grouping Sets extend this by allowing multiple levels of aggregation in one statement.

This approach compares to writing several separate GROUP BY queries. Each set within the Grouping Sets can be thought of as a separate GROUP BY instruction, letting you harness the power of combined data insights.

In practice, using Grouping Sets reduces query duplication and enhances data interpretation.

Setting Up the Environment

Before starting with SQL grouping sets, it’s important to have a proper environment. This involves creating a sample database and tables, as well as inserting initial data for practice.

Creating Sample Database and Tables

To begin, a sample database must be created. In SQL Server, this is done using the CREATE DATABASE statement. Choose a clear database name for easy reference.

After setting up the database, proceed to create tables. Use the CREATE TABLE command.

Each table should have a few columns with appropriate data types like INT, VARCHAR, or DATE. This structure makes understanding grouping sets easier.

Here’s an example of creating a simple table for storing product information:

CREATE TABLE Products (

ProductID INT PRIMARY KEY,

ProductName VARCHAR(100),

Category VARCHAR(50),

Price DECIMAL(10, 2)

);

This setup is essential for running queries later.

Inserting Initial Data

With the tables ready, insert initial data into them. Use the INSERT INTO statement to add rows.

Ensure the data reflects various categories and values, which is crucial for exploring grouping sets.

For example, insert data into the Products table:

INSERT INTO Products (ProductID, ProductName, Category, Price) VALUES

(1, 'Laptop', 'Electronics', 999.99),

(2, 'Smartphone', 'Electronics', 499.99),

(3, 'Desk Chair', 'Furniture', 89.99),

(4, 'Table', 'Furniture', 129.99);

Diverse data allows for different grouping scenarios. It helps in testing various SQL techniques and understanding how different groupings affect the results. Make sure to insert enough data to see meaningful patterns in queries.

Basic SQL Aggregations

Basic SQL aggregations involve performing calculations on data sets to provide meaningful insights. These techniques are crucial for summarizing data, identifying patterns, and making informed business decisions.

Using Aggregate Functions

Aggregate functions are vital in SQL for calculating sum, count, minimum (MIN), average (AVG), and maximum (MAX) values.

These functions are commonly used with the GROUP BY clause to summarize data into different groups.

For example, the SUM() function adds up all values in a column, providing a total. Similarly, COUNT() returns the number of entries in a group.

Other functions like MIN() and MAX() help identify the smallest or largest values in a group, respectively. The AVG() function calculates the average by dividing the total by the number of entries.

Understanding how these functions work can significantly enhance data analysis efforts by simplifying complex datasets into manageable outputs.

Understanding Aggregate Query Output

The output of aggregate queries in SQL provides a concise view of data, summarizing key metrics.

When using GROUP BY with aggregate functions, the output is organized into categories based on specified columns. Each group displays a single value per aggregate function, simplifying complex datasets.

For instance, if one groups sales data by region, the query can generate a table showing the SUM() of sales, the AVERAGE() transaction size, and the COUNT() of orders per region.

This refined output makes it easier to compare performance across different segments.

Proper application of these queries helps in efficiently extracting meaningful information from large datasets, aiding in strategic decision-making.

Grouping Data with Group By

Grouping data in SQL is essential for summarizing information and generating useful insights. The GROUP BY clause is used within a SELECT statement to group rows that share the same values in specified columns, leading to organized result sets.

Syntax and Usage of Group By

The GROUP BY clause in an SQL query follows the SELECT statement and is crucial for working with aggregate functions, such as SUM, AVG, or COUNT. The basic syntax is:

SELECT column1, aggregate_function(column2)

FROM table_name

GROUP BY column1;

Using GROUP BY, the database groups rows that have the same value in specified columns.

For example, grouping sales data by product type helps in calculating total sales for each type. This clause ensures that only the grouped data appears in the result set, making it easier to analyze patterns or trends.

Common Group By Examples

A typical example involves calculating sales totals for each product category.

Suppose there is a table of sales records with columns for product_category, sales_amount, and date. An SQL query to find total sales for each category would look like this:

SELECT product_category, SUM(sales_amount) AS total_sales

FROM sales

GROUP BY product_category;

This query provides a result set that shows the total sales per category, enabling easier decision-making.

Another classic example involves counting the number of orders per customer. By grouping orders by customer_id, a business can determine purchasing behavior.

These examples illustrate the versatility of the GROUP BY clause in summarizing large sets of data into meaningful insights. When combined with aggregate functions, GROUP BY becomes a powerful tool for data analysis.

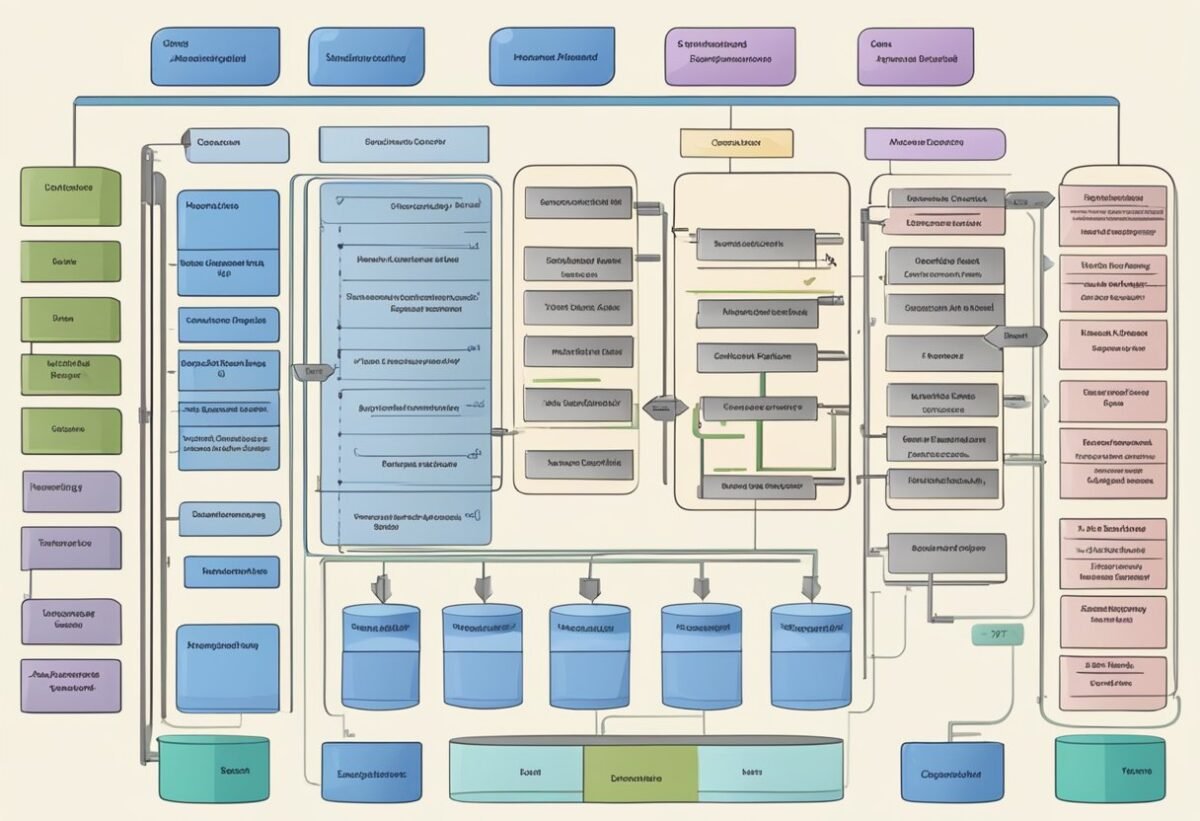

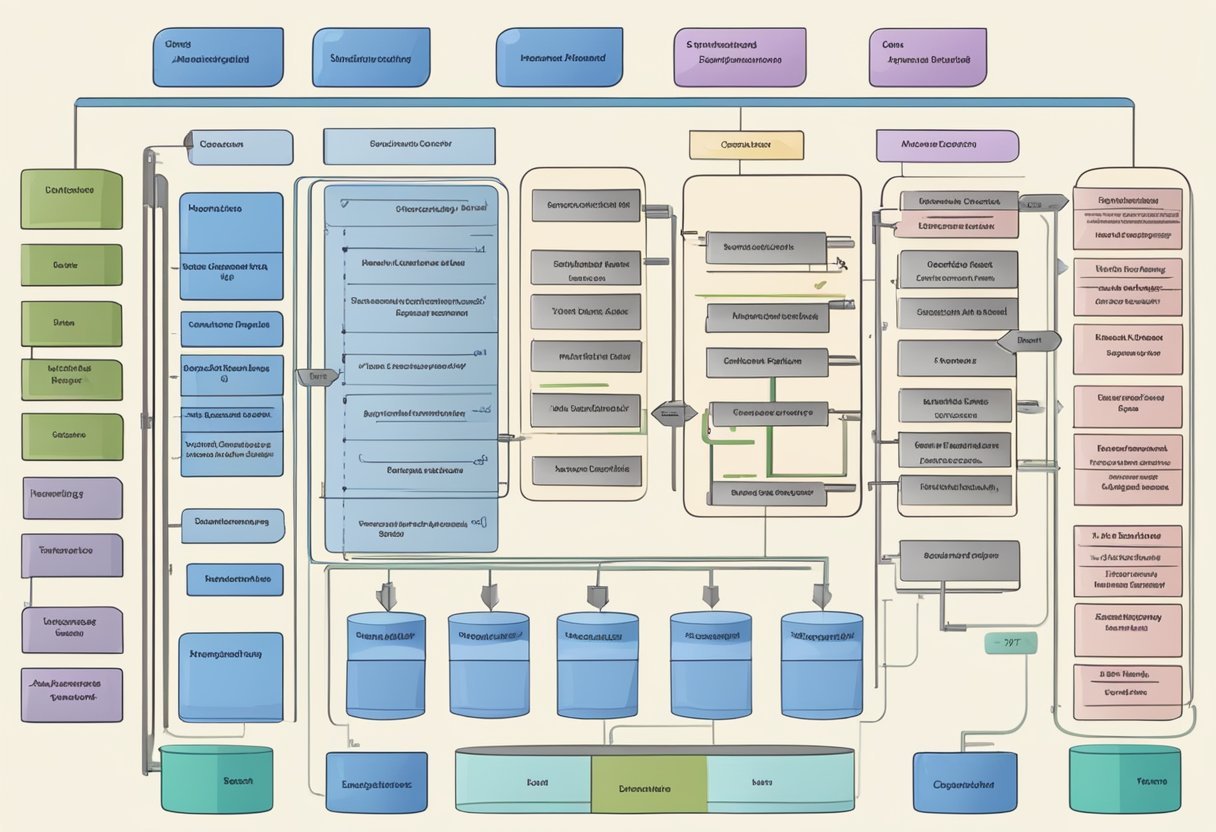

Advanced Grouping Sets

Advanced SQL grouping techniques allow users to perform efficient data analysis by generating multiple grouping sets in a single query. They help in creating complex reports and minimizing manual data processing.

Implementing Multiple Grouping Sets

SQL provides a way to create multiple grouping sets within the same query. By using the GROUPING SETS clause, users can define several groupings, allowing for precise data aggregation without multiple queries.

For example, using GROUPING SETS ((column1, column2), (column1), (column2)) enables custom groupings based on specific analysis needs. This flexibility reduces the query complexity and enhances performance, making it easier to work with large datasets.

These sets are especially useful in reporting and dashboards where groupings may vary. Implementing multiple grouping sets can dramatically simplify SQL scripts and make query maintenance more straightforward. The use of these sets also helps in highlighting SQL GROUPING SETS by reducing redundant operations.

Analyzing Complex Groupings

Complex data analysis often requires breaking down data into various groups for deeper insights. SQL grouping sets can analyze intricate datasets by allowing different columns to be aggregated in a single query.

For instance, one can use GROUPING SETS to compare multiple dimensions, such as sales by region and sales by product. This capability provides a clearer view of data patterns and trends.

To handle complex groupings, exceptions can be managed within the query logic, addressing unique analytical requirements.

This feature is advantageous for business intelligence, offering flexibility in data presentation while optimizing processing times.

Incorporating grouping sets into SQL queries strengthens data exploration capabilities, supports diverse analytical tasks, and eases the workflow for data professionals.

Combining Sets with Rollup and Cube

In SQL, the ROLLUP and CUBE operators help create detailed data summaries. These operators allow users to generate subtotals and totals across various dimensions, enhancing data analysis and reporting.

Exploring Rollup for Hierarchical Data

ROLLUP is used to aggregate data in a hierarchical manner. It is especially useful when data needs to be summarized at multiple levels of a hierarchy.

For example, in a sales report, one might want to see totals for each product, category, and for all products combined. The ROLLUP operator simplifies this by computing aggregates like subtotals and grand totals automatically.

This operation is cost-effective as it reduces the number of grouping queries needed. It computes subtotals step-wise from the most detailed level up to the most general.

This is particularly beneficial when analyzing data across a structured hierarchy. For instance, it can provide insights at the category level and an overall total, enabling managers to quickly identify trends and patterns.

Utilizing Cube for Multidimensional Aggregates

The CUBE operator extends beyond hierarchical data to encompass multidimensional data analysis. It creates all possible combinations of the specified columns, thus useful in scenarios requiring a multi-perspective view of data.

This can be observed in cross-tabulation reports where one needs insights across various dimensions.

For instance, in a retail scenario, it can show sales totals for each combination of store, product, and time period.

This results in a comprehensive dataset that includes every potential subtotal and total. The CUBE operator is crucial when a detailed examination of relationships between different categories is needed, allowing users to recognize complex interaction patterns within their datasets.

Optimizing Grouping Sets Performance

Optimization of grouping sets in SQL Server enhances data processing speed and efficiency, especially in aggregate queries. Effective strategies minimize performance issues and make T-SQL queries run smoother.

Best Practices for Efficient Queries

To enhance SQL Server performance when using grouping sets, it’s crucial to follow best practices.

Indexing plays a key role; ensuring relevant columns are indexed can dramatically reduce query time.

Employing partitioning helps manage data efficiently by dividing large datasets into smaller, more manageable pieces.

Ordering data before applying grouping sets can also be helpful. This reduces the need for additional sorting operations within the server.

Using the GROUP BY ALL technique can be beneficial. This not only includes all possible combinations but also reduces the number of operations needed.

Avoid excessive use of subqueries as they slow down processing times.

It’s also recommended to use temporary tables when manipulating large datasets, as this can offer substantial performance gains.

Handling Performance Issues

When encountering performance issues, analyzing the query execution plan is essential. They identify bottlenecks within the T-SQL operations.

Look specifically for full table scans, which can be optimized by implementing better indexing or query restructuring.

High-density grouping sets can cause SQL Server to select an unsuitable scan strategy. Utilizing query hints can force the server to use more efficient methods.

Another way to handle performance issues is by reducing the query’s logical reads, commonly achieved by optimizing the table schema.

Utilize SQL Server’s built-in tools like the Database Engine Tuning Advisor to provide recommendations for indexing and partitioning.

These steps can significantly improve query speed and overall performance. For more insights into the performance differences between grouping sets and other methods, you can explore GROUPING SETS performance versus UNION performance.

Dealing with Special Cases

When dealing with complex SQL queries, special cases require attention to achieve accurate results.

Handling null values and using conditions with the HAVING clause are critical when working with grouping sets.

Grouping with Null Values

Null values can pose challenges in SQL grouping. They often appear as missing data, impacting the outcome of queries.

When using GROUPING SETS, null values might appear in the results to represent unspecified elements. It’s crucial to recognize how SQL treats nulls in aggregation functions.

For instance, using GROUP BY with nulls will consider null as a distinct value. This means a separate group for nulls is created.

Departments in a database often have some missing entries, representing departments as null. To manage this, special handling might be needed, such as replacing nulls with a placeholder value or excluding them based on the requirement.

Using Having with Grouping Sets

The HAVING clause plays a vital role in filtering results of grouped data. It allows specifying conditions on aggregates, ensuring the end data matches given criteria.

This is often used after GROUPING SETS to refine results based on aggregate functions like SUM or AVG.

For example, a query might focus on departments with a total sales amount exceeding a certain threshold. The HAVING clause evaluates these criteria.

If departments report null values, conditions must be set to exclude them or handle them appropriately. Understanding how to use HAVING ensures precise and meaningful data, enhancing insights from complex queries.

Consistent use of the HAVING clause refines data with clear, actionable criteria. It guides the process to include only relevant entries, improving the quality of output in SQL operations.

Utilizing Common Table Expressions

Common Table Expressions (CTEs) can simplify complex SQL queries and improve their readability. Understanding how to integrate CTEs with grouping sets can enhance data analysis capabilities.

Introduction to CTEs

Common Table Expressions, shortened as CTEs, allow for the definition of a temporary result set that can be referenced within a SELECT statement. They are defined using the WITH clause at the start of a SQL query.

CTEs help break down complex queries by allowing developers to structure their code into readable and manageable segments.

A CTE can be reused within the query, which minimizes code duplication. This feature is particularly useful when the same data needs to be referenced multiple times. CTEs also support recursive queries, allowing repeated references to the same data set.

Integrating CTEs with Grouping Sets

Grouping sets in SQL are used to define multiple groupings in a single query, effectively providing aggregate results over different sets of columns. This is beneficial when analyzing data from various perspectives.

Using CTEs in combination with grouping sets further organizes query logic, making complex analysis more approachable.

CTEs can preprocess data before applying grouping sets, ensuring that the input data is neat and relevant.

For instance, one can use a CTE to filter data and then apply grouping sets to examine different roll-ups of aggregate data. This integration facilitates more flexible and dynamic reporting, leveraging the most from SQL’s capabilities for analytical queries.

Reporting with Grouping Sets

Grouping sets in SQL allow for efficient report creation by providing multiple aggregations within a single query. This is ideal for creating detailed result sets that highlight various perspectives in data analysis.

Designing Reports Using SQL

When designing reports, grouping sets enable complex queries that gather detailed data insights. By defining different groupings, users can efficiently aggregate and display data tailored to specific needs.

SQL’s GROUPING SETS function simplifies this by generating multiple grouping scenarios in a single query, reducing code complexity.

A practical example involves sales data, where a report might need total sales by product and location. Instead of writing separate queries, one can use grouping sets to combine these requirements, streamlining the process and ensuring consistent output.

Customizing Reports for Analytical Insights

Customization of reports for analytical insights is crucial for meaningful data interpretation. Grouping sets allow for flexibility in aggregating data, which supports deeper analysis.

Users can create custom report layouts, focusing on relevant data points while keeping the query structure efficient.

For instance, in a financial report, users might want both quarterly and annual summaries. Using grouping sets enables these different periods to be captured seamlessly within a single result set, aiding in strategic decision-making.

The ability to mix various aggregations also boosts the report’s analytical value, providing insights that drive business actions.

Union Operations in Grouping

Union operations play a significant role in SQL by helping manage and combine data results. In grouping operations, “union” and “union all” are essential for consolidating multiple datasets to provide a comprehensive view of data.

Understanding Union vs Union All

In SQL, the union operation is used to combine results from two or more queries. It removes duplicate rows in the final output. In contrast, union all keeps all duplicates, making it faster because it skips the extra step of checking for duplicates.

Using union and union all is vital when working with grouping sets. Grouping sets allow different group combinations in queries. Union simplifies combining these sets, while union all ensures that every group, even if repeated, appears in the final results.

Both operations require that each query inside the union have the same number of columns, and the data types of each column must be compatible.

Practical Applications of Union in Grouping

Practical uses of union in grouping include scenarios where multiple grouping set results need to be displayed in one table. Using union all is efficient when the exact number of groups, including duplicates, is necessary for analysis.

For example, if one query groups data by both brand and category, and another only by category, union all can merge them into one unified dataset. This method ensures that all combinations from the grouping sets are represented.

It is especially useful in reporting when full data detail, including duplicates, is necessary to provide correct analytics and insights. This operation helps simplify complex queries without losing crucial information.

Practical Examples and Use Cases

Practical examples and use cases for SQL grouping sets demonstrate their value in analyzing complex data. By supporting aggregate queries and facilitating efficient data analysis, grouping sets provide powerful tools for businesses to process and interpret large datasets.

Grouping Sets in E-Commerce

In the e-commerce industry, SQL grouping sets can be used to aggregate data across various dimensions such as product categories, regions, and time periods. This allows businesses to gain insights from different geographic locations.

For instance, grouping sets can help evaluate sales performance by examining both individual product sales and regional sales.

An e-commerce platform can run an aggregate query to find the total sales for each product category, region, and quarter. This helps identify trends and focus efforts on high-performing areas. With SQL grouping sets, companies can simplify complex aggregations into a single query instead of running multiple queries for each group.

Analyzing Sales Data with Grouping Sets

For analyzing sales data, SQL grouping sets provide a way to view data from multiple perspectives. They make it possible to see aggregate sales across different dimensions like time, product, and store location, all in a single query.

A retail business might use grouping sets to compare total sales by month, product line, and store location. This enables the business to pinpoint peak sales periods and high-demand products.

By using SQL grouping sets, the analysis becomes more efficient, revealing meaningful patterns and trends. The ability to group data in various ways helps businesses target marketing strategies and enhance inventory management.

Frequently Asked Questions

SQL GROUPING SETS allow for detailed data aggregation, providing multiple grouping results within a single query. They offer flexibility in organizing data compared to traditional methods.

How can GROUPING SETS be utilized to aggregate data in SQL?

GROUPING SETS allow users to define multiple groupings in one query. This is efficient for generating subtotals and totals across different dimensions without writing multiple queries.

By specifying combinations of columns, users can create detailed summaries, which simplify complex data analysis tasks.

What are the advantages of using GROUPING SETS over ROLLUP in SQL?

GROUPING SETS provide more flexibility than ROLLUP, which assumes a specific hierarchy in column analysis. Unlike ROLLUP, which aggregates data in a fixed order, GROUPING SETS can handle custom combinations of columns, allowing users to control how data should be grouped at various levels of detail.

Can you provide an example of how to use GROUPING SETS in Oracle?

In Oracle, GROUPING SETS can be used within a GROUP BY clause. An example would be: SELECT warehouse, product, SUM(sales) FROM sales_data GROUP BY GROUPING SETS ((warehouse, product), (warehouse), (product), ()).

This query generates aggregates for each warehouse and product combination, each warehouse, each product, and a grand total.

How do GROUPING SETS in SQL differ from traditional GROUP BY operations?

Traditional GROUP BY operations result in a single grouping set. In contrast, GROUPING SETS allow for multiple groupings in one query. This feature helps to answer more complex queries, as it creates subtotals and totals without needing multiple separate queries, saving time and simplifying code.

What is the role of GROUPING SETS in data analysis within SQL Server?

In SQL Server, GROUPING SETS play a crucial role in multi-dimensional data analysis. By allowing diverse grouping combinations, they help users gain insights at different levels of aggregation.

This feature supports comprehensive reporting and detailed breakdowns within a single efficient query.

How are GROUPING SETS implemented in a BigQuery environment?

In BigQuery, GROUPING SETS are implemented via the GROUP BY clause with specified sets. They enable powerful data aggregation by calculating different grouping scenarios in a single query.

This functionality aids in producing complex analytics and reporting, streamlining the data processing tasks in large datasets.