Understanding Advanced SQL Concepts

In advanced SQL, mastering complex queries and using sophisticated functions play a critical role.

These skills allow data engineers to handle intricate tasks such as data manipulation and analysis effectively.

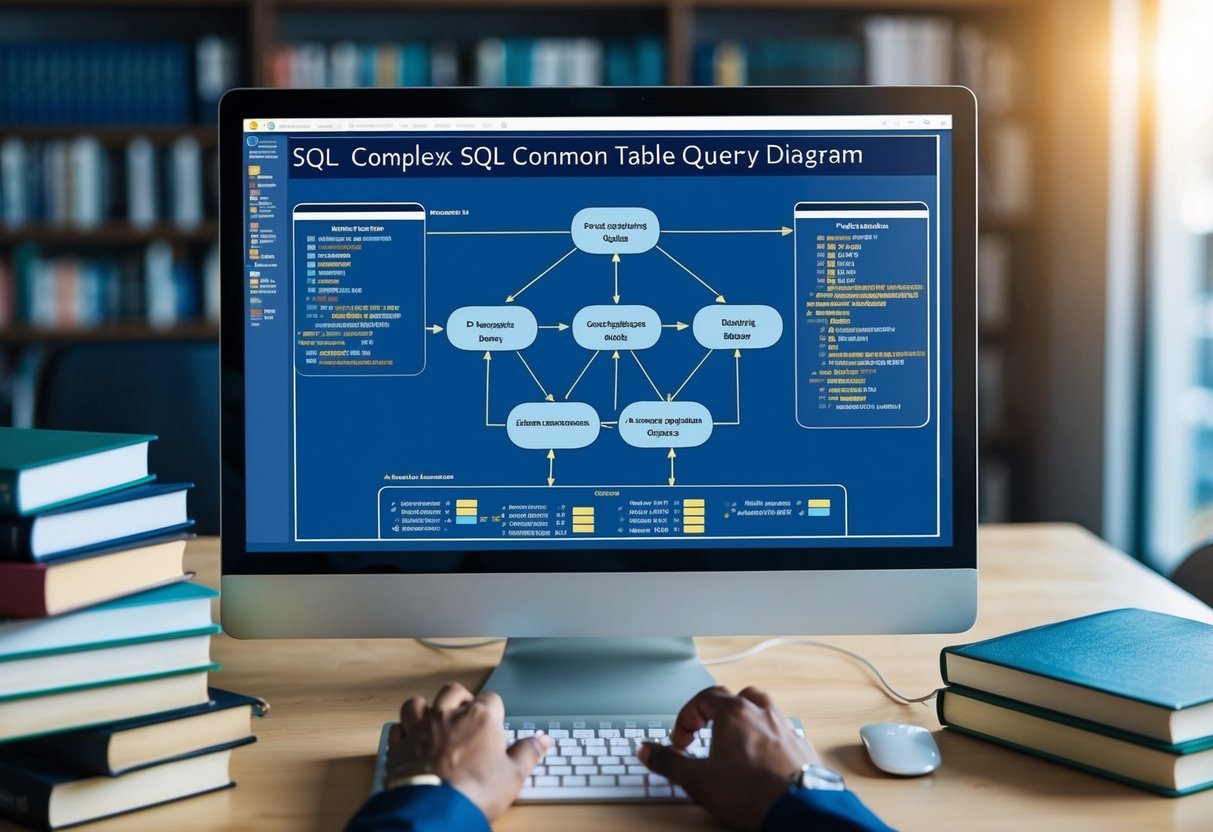

Working with Complex SQL Queries

Complex SQL queries are essential for managing large datasets and extracting valuable insights.

These queries often involve multiple tables and require operations like joins, subqueries, and set operations. They help in combining data from various sources to produce comprehensive results.

One useful aspect of complex queries is subqueries, which are nested queries that allow for more refined data extraction.

Joins are also pivotal, enabling the combination of rows from two or more tables based on a related column. This ability to link data is crucial in data engineering tasks where diverse datasets must be integrated.

Utilizing Advanced Functions for Data Analysis

Advanced SQL functions enhance analytical capabilities, enabling detailed data examination.

Window functions provide insights by performing calculations across a set of rows related to the current row, without collapsing them. This is useful for analyzing trends over time or within specific partitions of data.

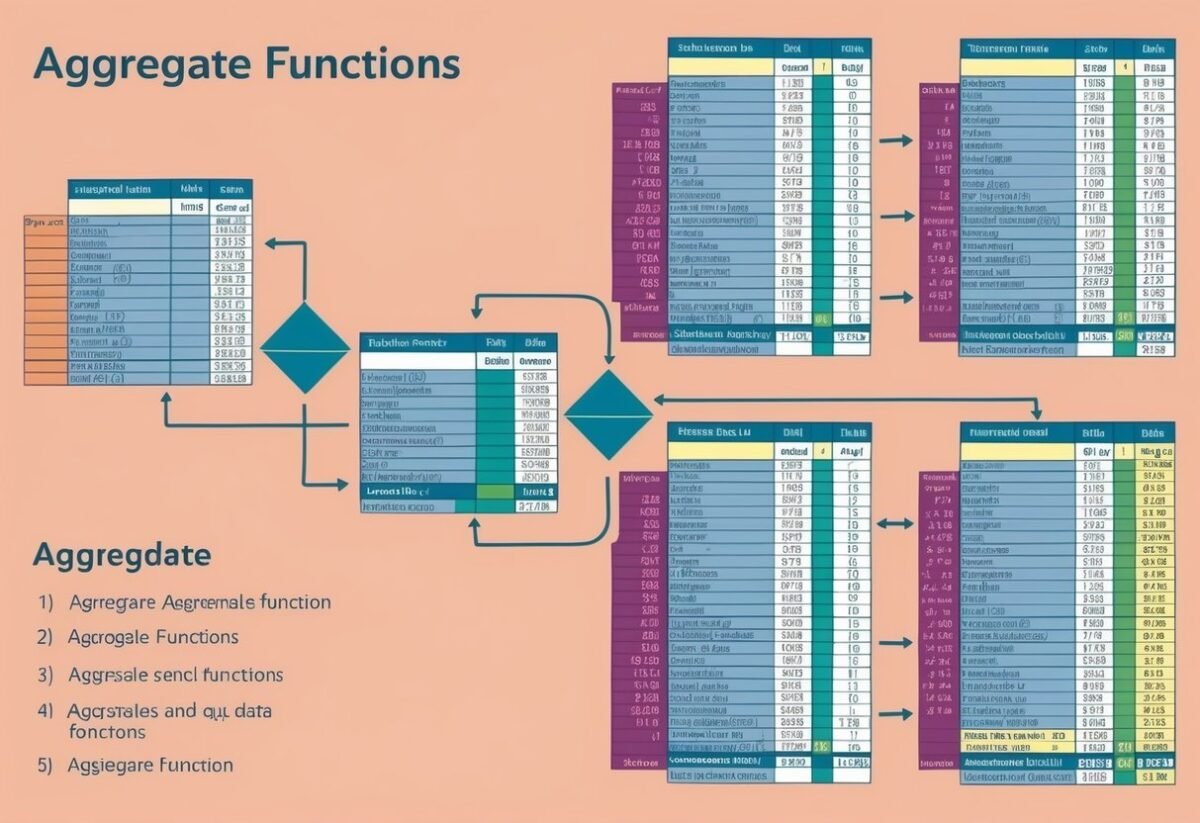

Aggregate functions, like SUM and AVG, assist in consolidating data, offering summaries that reveal patterns or anomalies.

Other specialized functions, like PIVOT, transform data in columns to enhance readability and reporting capabilities. These functions elevate SQL skills, making them indispensable for data manipulation and analysis tasks.

For comprehensive resources on advanced SQL, consider exploring materials such as advanced SQL concepts with examples and courses that focus on data engineering.

Database Design and Management

In the realm of database management, security and performance are paramount. Understanding how to secure data and optimize databases can enhance the functionality and efficiency of any system.

Ensuring Robust Database Security

Ensuring database security involves multiple layers of protection to safeguard sensitive information.

Proper access control is crucial; users should only have permissions necessary for their roles. Implementing strong passwords and regular audits can prevent unauthorized access.

Data encryption, both in transit and at rest, is another key strategy. Encrypting sensitive data makes it unreadable without the proper decryption key, adding an essential layer of protection.

Firewalls should be configured to block unwanted traffic, and network segmentation can limit access to certain parts of the database.

Regular updates and patches are vital to protect against vulnerabilities. Databases like RDS (Relational Database Service) offer built-in security features, simplifying the management of security protocols.

Optimizing Database Performance with Indexes

Indexes are a powerful tool for improving database performance by allowing faster retrieval of records.

In a relational database, an index functions like an efficient roadmap, reducing the time it takes to find specific data. Well-designed indexes can significantly reduce query times, benefiting database management.

However, careful planning is necessary. Over-indexing can lead to performance issues as it increases the time needed for insertions and updates. Understanding how to balance the number and type of indexes is essential.

Clustered and non-clustered indexes serve different purposes and should be used according to data access patterns.

Tools like SQL query optimizers can help in determining the most effective indexing strategies, ensuring databases run more efficiently and effectively.

SQL Data Structures and Views

In the realm of data engineering, understanding SQL data structures and the use of views is crucial. These tools allow professionals to manage, optimize, and access complex datasets efficiently.

Implementing and Querying Views

Views are essential in organizing and accessing data in SQL. They act as virtual tables, providing a snapshot of data from one or more tables. This makes it easier to handle complex SQL queries by encapsulating frequently used join operations or selecting specific columns.

Using views improves data security by restricting access to specific data. Read-only views limit accidental data modification, maintaining data integrity.

Materialized views store the results of a query and can be refreshed periodically, improving performance for large datasets where real-time accuracy is not essential.

Mastering Joins for Complex Data Sets

Joins are pivotal for advanced SQL, allowing data from several tables to be brought together into a unified output.

There are various types like INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL JOIN, each serving specific purposes in data relationships.

For instance, an INNER JOIN fetches records with matching values in both tables, essential for precise filtering. LEFT JOIN retrieves all records from one table and matched records from the second, useful when comprehensive data is required.

Choosing the right join is crucial for efficient data processing.

Using joins wisely, along with Common Table Expressions (CTEs), can enhance query clarity and maintain performance in data-rich environments. Understanding and practicing these techniques are vital for those delving deep into SQL for data engineering.

Data Engineering with SQL

SQL plays a crucial role in data engineering by enabling automation of ETL processes and effective orchestration of data pipelines. These advanced SQL skills facilitate efficient data management and integration of large data sets across relational databases and big data systems.

Automating ETL Processes

Automating ETL (Extract, Transform, Load) processes is key for data engineers. SQL helps streamline these tasks by allowing for the creation of repeatable queries and procedures.

Extract: SQL is used to pull data from multiple sources, including relational databases and big data platforms.

Transform: Data engineers use SQL to perform aggregations, joins, and data cleaning operations. This ensures the data is ready for analysis.

Load: SQL scripts automate the process of writing data into databases, ensuring consistency and integrity.

Efficient ETL automation boosts productivity and reduces manual effort, allowing engineers to manage larger data sets and maintain data quality.

Utilizing SQL in Data Pipeline Orchestration

SQL is vital in orchestrating data pipelines, which are essential for managing complex data flows. It enables seamless integration between different stages of the data journey.

Engineers design data pipelines that move and process data efficiently from different sources to target systems. This involves using SQL to perform scheduled and on-demand data processing tasks.

SQL supports the orchestration of data workflows by coordinating between data ingestion, processing, and output operations. It can be integrated with tools that trigger SQL scripts based on events, ensuring timely updates and data availability.

This orchestration capability is important for handling big data, as it ensures data pipelines are robust, scalable, and responsive to changes in data input and demand.

Expert-Level SQL Functions and Procedures

Expert-level SQL involves mastering advanced techniques, like creating complex stored procedures and user-defined functions. These skills enhance performance and allow for efficient data manipulation.

Crafting Complex Stored Procedures

Stored procedures are powerful tools in SQL that help automate repetitive tasks and improve performance. They allow for the encapsulation of SQL statements and business logic into a single execution process.

By crafting complex stored procedures, developers can handle intricate data tasks with efficiency. These procedures can include conditional logic, loops, and error handling to manage complex data processes seamlessly.

Using parameters, stored procedures can be made modular and reusable, allowing them to adapt to different scenarios without rewriting the entire SQL code.

Creating User-Defined Functions

User-defined functions (UDFs) extend the capability of SQL by allowing developers to create custom functions to perform specific tasks. Unlike standard SQL functions, UDFs give the ability to define operations that might be specific to the business needs.

UDFs are particularly useful for tasks that require standardized calculations or data processing that is reused across different queries.

They can return a single value or a table, depending on requirements, and can be incorporated into SQL statements like SELECT, WHERE, and JOIN clauses. This makes them a versatile tool for maintaining cleaner and more manageable SQL code.

Enhancing Business Intelligence with SQL

SQL plays a vital role in transforming raw data into meaningful insights for businesses. It helps in analyzing trends and making data-driven decisions efficiently. By harnessing SQL, businesses can enhance their intelligence operations and optimize various analyses, including insurance claims processing.

SQL for Business Analysts

Business analysts use SQL to extract, manipulate, and analyze data. It helps them understand patterns and trends in large datasets. This enables them to make informed decisions based on past and present data insights.

Common tasks include filtering data from databases, creating detailed reports, and summarizing data to show key performance indicators. SQL helps in evaluating sales numbers, customer behavior, and market trends, which are crucial for strategic planning.

Advanced SQL techniques allow business analysts to join multiple tables, use sub-queries, and apply functions to handle complex data problems. These abilities lead to more precise analyses and can highlight areas needing improvement or potential growth opportunities. By efficiently managing data, they drive better business intelligence.

SQL in Insurance Claims Analysis

In insurance, SQL is essential for analyzing claims data. It helps in identifying patterns and potential fraud, improving risk assessment, and streamlining claim processes.

Key processes involve querying claims data to find anomalies, grouping claims by factors like location, date, or type, and performing calculations to assess potential payouts. This offers insights into claim frequencies and loss patterns.

Advanced queries can integrate data from other sources like customer profiles or historical claims. This comprehensive view aids in determining risk levels and pricing strategies. SQL enables efficient data processing, reducing time spent on manual analysis, and allows insurers to respond more quickly to claims and policyholder needs.

Integrating SQL with Other Technologies

Integrating SQL with other technologies enhances data processing and analysis. This approach improves the ability to perform advanced data tasks, combining SQL’s querying power with other robust tools.

Combining SQL and Python for Advanced Analysis

SQL and Python together enable efficient data manipulation and analysis. This combination is beneficial for data engineers who need precise control over data workflows.

Python, with libraries like Pandas and NumPy, provides data processing capabilities that complement SQL’s powerful querying.

Python programming allows for complex calculations and statistical analysis that SQL alone may struggle with. Data scientists often utilize both SQL for database operations and Python for machine learning algorithms and data visualization.

Scripts can pull data from SQL databases, process it using Python, and then push results back into the database.

Leveraging SQL with Machine Learning Techniques

SQL’s integration with machine learning opens up new possibilities for predictive analysis and automated decision-making.

Large datasets stored in SQL databases can be directly accessed and used to train machine learning models, enhancing data science projects.

Many frameworks support SQL-based data retrieval, allowing seamless data transfer to machine learning pipelines.

Data scientists often use SQL to preprocess data, cleaning and filtering large datasets before applying machine learning algorithms.

By using SQL queries to create clean, organized datasets, the machine learning process becomes more efficient and effective. This approach streamlines data handling, allowing for quicker iterations and more accurate predictions.

SQL in the Modern Development Environment

In today’s tech landscape, SQL plays a crucial role in software development and data engineering, supported by various modern tools. From Integrated Development Environments (IDEs) to cloud-based platforms, these resources offer enhanced functionality for SQL development.

Exploring Integrated Development Environments

Integrated Development Environments (IDEs) are essential for developers working with SQL. They provide features like syntax highlighting, code completion, and error detection. These tools streamline the development process and improve efficiency.

IDEs such as PyCharm, known for Python programming, also support SQL plugins that enhance database management capabilities.

These environments allow developers to work with SQL seamlessly alongside other programming languages, providing a cohesive setup.

Using an IDE, developers can manage their SQL environment more effectively. The ease of integration with version control systems like GitHub, enables collaborative project management and code sharing.

Developing in Cloud Developer Environments

Cloud developer environments offer a flexible and scalable solution for SQL development.

Platforms like GitHub Codespaces allow developers to run their SQL code in the cloud. This provides access to extensive computing resources and storage.

These environments reduce the need for extensive local hardware setups and offer scalability to handle large databases.

They make it easier to develop, test, and deploy SQL applications from anywhere in the world.

Cloud platforms often support advanced features such as real-time collaboration, automated backups, and integration with other cloud services.

This integration helps teams manage projects more efficiently and securely, making them a vital component of modern SQL development.

Advanced Data Visualization and Reporting

Advanced data visualization and reporting require leveraging sophisticated tools and techniques to transform raw data into meaningful insights. These skills are crucial for effectively communicating complex data findings in a digestible manner.

Leveraging SQL for Data Visualization

SQL can be a powerful ally in data visualization. By using SQL, analysts can extract and prepare data from large databases efficiently.

For instance, SQL can filter, aggregate, and join different datasets to create a comprehensive view of the data. This process helps in building data visualizations that highlight critical trends and patterns.

Advanced SQL techniques, such as window functions, enable more complex data manipulation. These functions allow analysts to perform calculations across sets of table rows that are related to the current row, without altering the numbers in the database.

Integrating SQL with visualization tools like Tableau or Microsoft Excel enhances capabilities.

These platforms often allow direct SQL queries to populate dashboards, giving users dynamic and real-time insights.

The combination of SQL’s data processing power and visualization tools’ graphical representation capabilities provides a robust solution for data-driven decision-making.

Generating Reports with SQL and Business Software

SQL can also be essential in generating detailed reports. By using SQL queries, analysts can create structured reports that feature precise metrics and summaries necessary for business processes.

Business software like Microsoft Excel complements SQL by providing a familiar interface for report generation. Excel can connect to databases where SQL retrieves data, allowing for seamless integration of data into formatted reports.

The use of pivot tables in Excel helps in summarizing SQL data outputs efficiently.

Through these tables, complex datasets are converted into interpretable reports, which can be custom-tailored to meet specific business needs.

Other business software, such as Power BI, further enhances reporting by enabling interactive and visually appealing reports.

By exporting SQL query results into these platforms, analysts can deliver reports that are easily accessible and understandable by stakeholders. This integration supports real-time data exploration and informed decision-making.

Learning Path and Resources for SQL Mastery

For advancing SQL skills in data engineering, structured learning paths and resources play a crucial role. Key components include targeted courses and hands-on projects that help in building a strong understanding of advanced SQL.

Navigating SQL Courses and Certifications

To begin mastering SQL, it is essential to choose courses that match one’s skill level, from beginner to intermediate and advanced.

Platforms like Coursera offer advanced SQL courses which cover complex querying and database optimization. Completing these courses often awards a certificate of completion, which can be added to a LinkedIn profile to highlight expertise.

LinkedIn Learning provides structured learning paths where individuals can learn SQL across different databases and data analysis techniques.

These courses help in advancing data careers through comprehensive lessons and practice.

Building a SQL Learning Portfolio

A well-structured learning portfolio is key for demonstrating SQL proficiency. It can include hands-on projects such as data analysis tasks and report generation using SQL.

Websites like LearnSQL.com encourage working on industry-specific projects for sectors like healthcare which can enrich one’s portfolio.

Including a capstone project in a learning portfolio showcases an individual’s ability to solve real-world problems. These projects allow learners to apply SQL skills gained through courses in practical scenarios, an important step towards mastery.

Creating a blog or GitHub repository to share these projects can further enhance visibility to potential employers.

Frequently Asked Questions

Advanced SQL skills are crucial for data engineers. These topics include essential concepts, resources for learning, and tips for practicing complex queries, which are important for handling large datasets efficiently.

What are the essential advanced SQL topics that a data engineer should master?

Data engineers should focus on mastering topics like window functions, recursive queries, and performance tuning. Understanding database design and indexing strategies is also important for building efficient and scalable systems.

How can one practice SQL skills to handle complex queries in data engineering?

To practice SQL skills, individuals can work on real-world projects and challenges.

Joining online platforms that host SQL competitions or using databases to solve complex problems can significantly improve query handling.

Where can I find quality resources to learn advanced SQL for free?

Many platforms offer free resources to learn advanced SQL, such as online tutorials, coding platforms, and forums where learners can exchange knowledge.

Websites like LearnSQL.com provide comprehensive guides and examples.

What are the differences between SQL for data analysis and SQL for data engineering?

SQL for data analysis focuses on querying data for insights, often using aggregation and reporting tools. In contrast, SQL for data engineering involves designing data architectures and optimizing queries for performance, ensuring data pipelines run smoothly and efficiently.

How do I prepare for data engineering positions that require proficiency in advanced SQL?

Preparation involves studying advanced SQL topics and practicing with sample questions from interview preparation books.

Articles and blog posts that list common SQL interview questions, like those found at Interview Query, are also beneficial.

What are the recommended practices to optimize SQL queries for large datasets?

Optimizing SQL queries for large datasets involves using indexing, partitioning, and efficient join operations.

Reducing unnecessary computations and using appropriate data types can greatly enhance performance.