Understanding Combinatorics in Data Science

Combinatorics plays a significant role in enhancing math skills crucial for data science. Its principles of counting provide essential strategies used to calculate the probability of various data scenarios.

Role and Importance of Combinatorics

Combinatorics is essential in data science because it offers tools for solving counting problems. It helps in arranging, selecting, and organizing data efficiently. This is crucial in tasks like feature selection, where identifying the right combination of variables can impact model performance.

Data scientists rely on combinatorics to optimize algorithms by considering different possible combinations of data inputs. This enhances predictive modeling by increasing accuracy and efficiency. Combinatorics also aids in algorithm complexity analysis, helping identify feasible solutions in terms of time and resources.

Fundamental Principles of Counting

The fundamental principles of counting include permutations and combinations.

Permutations consider the arrangement of items where order matters, while combinations focus on the selection of items where order does not matter. These concepts are critical in calculating probabilities in data science.

In practical applications, understanding how to count the outcomes of various events allows data scientists to evaluate models effectively. The principles help build stronger algorithms by refining data input strategies. By mastering these fundamentals, data science practitioners can tackle complex problems with structured approaches, paving the way for innovative solutions.

Mathematical Foundations

Mathematics plays a vital role in data science. Understanding key concepts such as set theory and probability is essential, especially when it comes to functions and combinatorics. These areas provide the tools needed for data analysis and interpretation.

Set Theory and Functions

Set theory is a branch of mathematics that deals with the study of sets, which are collections of objects. It forms the basis for many other areas in mathematics. In data science, set theory helps users understand how data is grouped and related.

Functions, another crucial concept, describe relationships between sets. They map elements from one set to another and are foundational in analyzing data patterns. In combinatorics, functions help in counting and arranging elements efficiently. Functions are often used in optimization and algorithm development in data analysis. Understanding sets and functions allows data scientists to manipulate and interpret large data sets effectively.

Introduction to Probability

Probability is the measure of how likely an event is to occur. It is a key component in statistics and data science, providing a foundation for making informed predictions. In data science, probability helps in modeling uncertainty and variability in data. It is used to analyze trends, assess risks, and make decisions based on data.

Basic concepts in probability include random variables, probability distributions, and expected values. These concepts are applied in machine learning algorithms that require probabilistic models. Probability aids in understanding patterns and correlations within data. Combinatorics often uses probability to calculate the likelihood of specific combinations or arrangements, making it critical for data-related decisions.

Mastering Permutations and Combinations

Permutations and combinations are essential topics in math, especially useful in data science. Understanding these concepts helps in predicting and analyzing outcomes efficiently. Mastery in these areas offers an edge in solving complex problems logically.

Understanding Permutations

Permutations refer to different ways of arranging a set of objects. The focus is on the order of items. To calculate permutations, use the formula n! (n factorial), where n is the number of items. For instance, arranging three letters A, B, and C can result in six arrangements: ABC, ACB, BAC, BCA, CAB, and CBA.

Permutations are crucial in situations where order matters, like task scheduling or ranking results. Permutation formulas also include scenarios where items are selected from a larger set (nPr). This is useful for generating all possible sequences in algorithms or decision-making processes.

Exploring Combinations

Combinations focus on selecting items from a group where order does not matter. The formula used is nCr = n! / [r! (n-r)!], where n is the total number of items and r is the number to choose. An example is choosing two fruits from a set of apple, banana, and cherry, leading to the pairs: apple-banana, apple-cherry, and banana-cherry.

These calculations help in evaluating possibilities in scenarios like lotteries or team selection. Combinatorial algorithms aid in optimizing such selections, saving time and improving accuracy in complex decisions. This approach streamlines processes in fields ranging from coding to systematic sampling methods.

Combinations With Repetitions

Combinations with repetitions allow items to be selected more than once. The formula becomes (n+r-1)Cr, where n is the number of options and r is the number chosen. An example includes choosing three scoops of ice cream with options like vanilla and chocolate, allowing for combinations like vanilla-vanilla-chocolate.

This method is valuable in scenarios like distributing identical items or computing possible outcomes with repeated elements in a dataset. Understanding repetitive combinations is key to fields involving resource allocation or model simulations, providing a comprehensive look at potential outcomes and arrangements.

Advanced Combinatorial Concepts

In advanced combinatorics, two key areas are often emphasized: graph theory and complex counting techniques. These areas have valuable applications in algorithms and data science, providing a robust foundation for solving problems related to networks and intricate counts.

Graph Theory

Graph theory is a cornerstone of combinatorics that deals with the study of graphs, which are mathematical structures used to model pairwise relations between objects. It includes various concepts like vertices, edges, and paths. Graph theory is foundational in designing algorithms for data science, particularly in areas like network analysis, where understanding connections and paths is crucial.

Algorithms like depth-first search and breadth-first search are essential tools in graph theory. They are used to traverse or search through graphs efficiently. Applications of these algorithms include finding the shortest path, network flow optimization, and data clustering, which are vital for handling complex data sets in data science scenarios.

Complex Counting Techniques

Complex counting techniques are critical for solving advanced combinatorial problems where simple counting doesn’t suffice. Methods like permutations, combinations, and the inclusion-exclusion principle play essential roles. These techniques help count possibilities in situations with constraints, which is common in algorithm design and data science.

Another important approach is generating functions, which provide a way to encode sequences and find patterns or closed forms. Recurrence relations are also significant, offering ways to define sequences based on previous terms. These techniques together offer powerful tools for tackling combinatorial challenges that arise in data analysis and algorithm development, providing insight into the structured organization of complex systems.

Algebraic Skills for Data Science

Algebraic skills are crucial in data science, providing tools to model and solve real-world problems. Essential components include understanding algebraic structures and using linear algebra concepts like matrices and vectors.

Understanding Algebraic Structures

Algebra serves as the foundation for various mathematical disciplines used in data science. It involves operations and symbols to represent numbers and relationships. Key concepts include variables, equations, and functions.

Variables are symbols that stand for unknown values. In data analysis, these could represent weights in neural networks or coefficients in regression models.

Functions express relationships between variables. Understanding how to manipulate equations is important for tasks like finding the roots of a polynomial or optimizing functions.

Algebraic structures like groups, rings, and fields provide a framework for operations. They help in understanding systems of equations and their solutions.

Linear Algebra and Matrices

Linear algebra is a vital part of data science, dealing with vector spaces and linear mappings. It includes the study of matrices and vectors.

Matrices are rectangular arrays of numbers and are used to represent data and transformations. They are essential when handling large datasets, especially in machine learning where operations like matrix multiplication enable efficient computation of data relationships.

Vectors, on the other hand, are objects representing quantities with magnitude and direction. They are used to model data points, perform data visualization, and even perform tasks like calculating distances between points in space.

Operations involving matrices and vectors, such as addition, subtraction, and multiplication, form the computational backbone of many algorithms including those in linear regression and principal component analysis. Understanding these operations allows data scientists to manipulate high-dimensional data effectively.

Integrating Calculus and Combinatorics

Integrating calculus with combinatorics allows for robust analysis of complex mathematical and scientific problems. By employing techniques such as functions, limits, and multivariable calculus, these two fields provide essential tools for data analysis and problem-solving.

Functions and Limits

Functions serve as a critical link between calculus and combinatorics. They map input values to outputs and are crucial in determining trends and patterns in data sets. Combinatorial functions often involve counting and arrangement, while calculus introduces the continuous aspect to these discrete structures.

In this context, limits help in understanding behavior as variables approach specific values. Limits are used to study the growth rates of combinatorial structures, providing insights into their behavior at infinity or under certain constraints. They are essential for analyzing sequences and understanding how they converge or diverge.

Multivariable Calculus

Multivariable calculus extends the principles of calculus to functions with more than one variable. It plays a significant role in analyzing multi-dimensional data which is common in data science. In combinatorics, multivariable calculus aids in exploring spaces with higher dimensions and their complex interactions.

Partial derivatives and gradients are important tools from multivariable calculus. They allow the examination of how changes in input variables affect the output, facilitating deeper interpretation of data. This is especially useful when dealing with network analysis or optimization problems, where multiple variables interact in complex ways.

Statistics and Probability in Data Science

Statistics and probability are essential in data science to analyze data and draw conclusions. Techniques like hypothesis testing and Bayes’ Theorem play a crucial role in making data-driven decisions and predictions.

Statistical Analysis Techniques

Statistical analysis involves using data to find trends, patterns, or relationships. It’s crucial for tasks like hypothesis testing, which helps determine if a change in data is statistically significant or just random. Key methods include descriptive statistics, which summarize data features, and inferential statistics, which make predictions or inferences about a population from a sample.

Hypothesis testing often uses tests like t-tests or chi-square tests to look at data differences. Regression analysis is another powerful tool within statistical analysis. It examines relationships between variables, helping predict outcomes. This makes statistical techniques vital for understanding data patterns and making informed decisions in data science projects.

Bayes’ Theorem and Its Applications

Bayes’ Theorem provides a way to update the probability of a hypothesis based on new evidence. It’s central in decision-making under uncertainty and often used in machine learning, particularly in Bayesian inference.

The theorem helps calculate the likelihood of an event or hypothesis by considering prior knowledge and new data. This approach is used in real-world applications like spam filtering, where probabilities are updated as more data becomes available.

Bayes’ Theorem also aids in data analysis by allowing analysts to incorporate expert opinions, making it a versatile tool for improving predictions in complex situations.

Computational Aspects of Data Science

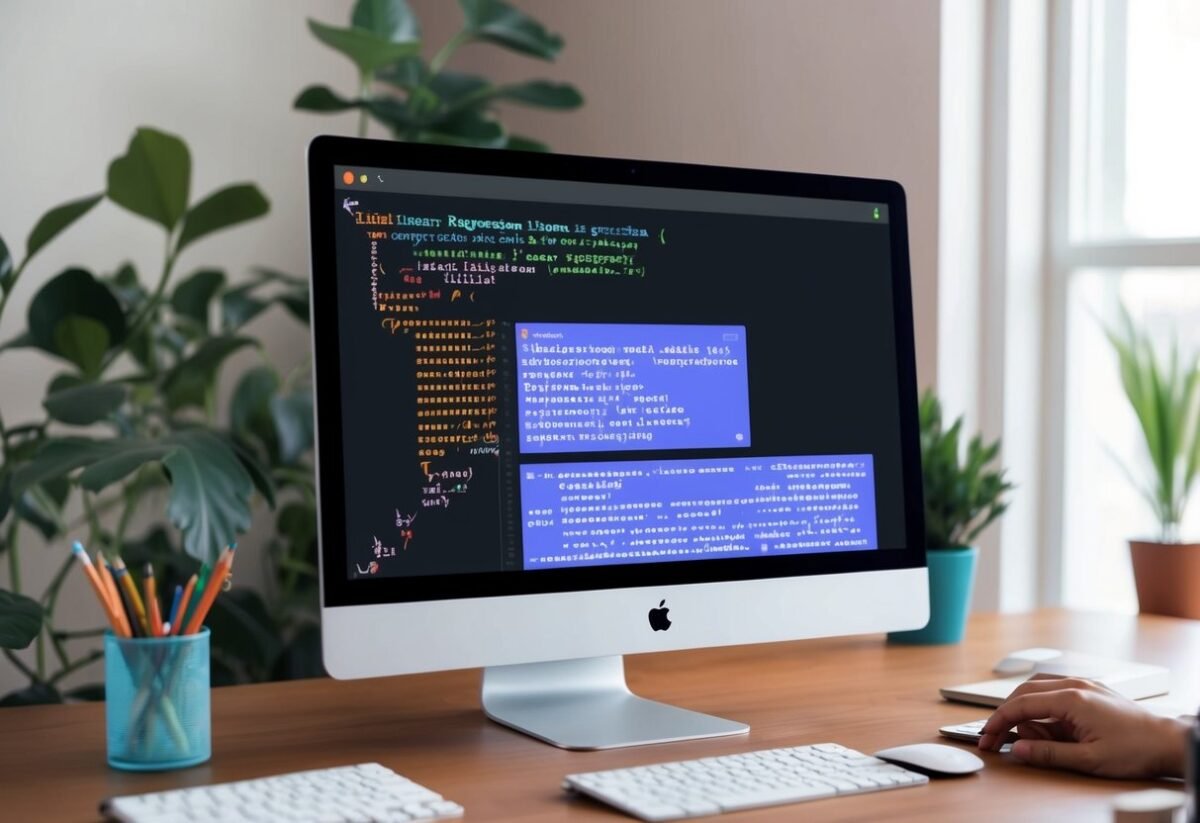

Computational aspects of data science focus on creating and improving algorithms, while ensuring they perform efficiently. Mastery in these areas advances the ability to process and analyze vast data sets effectively.

Algorithm Design

Designing robust algorithms is crucial in data science. Algorithms serve as step-by-step procedures that solve data-related problems and are central to the discipline. They help in tasks such as sorting, searching, and optimizing data.

Understanding the complexity of algorithms—how well they perform as data scales—is a key element.

In computer science, Python is a popular language for creating algorithms. Its versatility and vast libraries make it a preferred choice for students and professionals. Python’s simplicity allows for quick prototyping and testing, which is valuable in a fast-paced environment where changes are frequent.

Efficiency in Data Analysis

Efficiency in data analysis involves processing large volumes of data quickly and accurately. Efficient algorithms and data structures play a significant role in streamlining this process. The goal is to minimize resource use such as memory and CPU time, which are critical when dealing with big data.

Python programming offers various libraries like NumPy and pandas that enhance efficiency. These tools allow for handling large data sets with optimized performance. Techniques such as parallel processing and vectorization further assist in achieving high-speed analysis, making Python an asset in data science.

Applying Machine Learning

Applying machine learning requires grasping core algorithms and leveraging advanced models like neural networks. Understanding these concepts is crucial for success in data-driven fields such as data science.

Understanding Machine Learning Algorithms

Machine learning algorithms are essential tools in data science. They help identify patterns within data. Key algorithms include regression methods, where linear regression is prominent for its simplicity in modeling relationships between variables. Algorithms focus on learning from data, adjusting as more data becomes available. Regression helps predict numeric responses and can be a starting point for more complex analyses.

Machine learning algorithms aim to improve with experience. They analyze input data to make predictions or decisions without being explicitly programmed. Algorithms are at the core of machine learning, enabling computers to learn from and adapt to new information over time.

Neural Networks and Advanced Models

Neural networks are influential in advanced machine learning models. They mimic human brain function by using layers of interconnected nodes, or “neurons.” Each node processes inputs and contributes to the network’s learning capability. Their strength lies in handling large datasets and complex patterns. Neural networks are crucial in fields like image and speech recognition and serve as the backbone of deep learning models.

Neural networks can be further expanded into more sophisticated architectures. These include convolutional neural networks (CNNs) for image data and recurrent neural networks (RNNs) for sequential data, like time series. By adapting and scaling these models, practitioners can tackle a range of challenges in machine learning and data science.

Data Analytics and Visualization

Data analytics and visualization are key in transforming raw data into actionable insights. Understanding analytical methods and the role of visuals can greatly enhance decision-making and storytelling.

Analytical Methods

Analytical methods form the backbone of data analysis. These methods include techniques such as statistical analysis, machine learning, and pattern recognition. Statistical analysis helps in identifying trends and making predictions based on data sets. Tools like regression analysis allow analysts to understand relationships within data.

Machine learning brings in a predictive dimension by providing models that can learn from data to make informed predictions. This involves using algorithms to detect patterns and insights without being explicitly programmed. In data analytics, predictive analytics uses historical data to anticipate future outcomes.

The use of effective analytical methods can lead to improved efficiency in processes and innovative solutions to complex problems.

The Power of Data Visualization

Data visualization is a powerful tool that enables the representation of complex data sets in a more digestible format. Visualizations such as charts, graphs, and heatmaps help users understand trends and patterns quickly. Tools like Visualization and Experiential Learning of Mathematics for Data Analytics show how visuals can improve mathematical skills needed for analytics.

Effective visualization can highlight key insights that may not be immediately obvious from raw data. This makes it easier for decision-makers to grasp important information. Pictures speak volumes, and in data analytics, the right visualization turns complicated datasets into clear, actionable insights. Visualization not only aids in presenting data but also plays a crucial role in the analysis process itself by revealing hidden trends.

Paths to Learning Data Science

There are multiple pathways to becoming skilled in data science. Exploring courses and certifications provides a structured approach, while self-directed strategies cater to individual preferences.

Courses and Certifications

For those starting out or even experienced learners aiming for advanced knowledge, enrolling in courses can be beneficial. Institutions like the University of California San Diego offer comprehensive programs. These courses cover essential topics such as machine learning and data analysis techniques.

Certifications validate a data scientist’s skills and boost job prospects. They often focus on practical knowledge and can serve as a benchmark for employers. Many platforms offer these courses, making them accessible globally. Learners gain updated knowledge and practical skills needed for real-world applications.

Self-Directed Learning Strategies

Self-directed learning is suitable for those who prefer a flexible approach. Learners can explore resources like online tutorials, videos, and textbooks at their own pace. Websites like Codecademy provide paths specifically designed for mastering data science.

Experimentation and personal projects help deepen understanding and application. Engaging in forums and study groups can offer support and insight. For beginners, starting with fundamental concepts before moving to advanced topics is advisable. This approach allows learners to structure their learning experience uniquely to their needs and goals.

Assessing Knowledge in Data Science

Evaluating a person’s expertise in data science involves multiple methods.

Assessments are key. These can include quizzes or exams focusing on core concepts such as statistics and data analysis. For example, the ability to interpret statistical results and apply them to real-world scenarios is often tested.

Practical tasks are another way to gauge skills. These tasks might include analyzing datasets or building models. They demonstrate how well an individual can apply theoretical knowledge to practical problems.

Data analysis projects can be used as assessments. Participants may be asked to explore data trends, make predictions, or draw conclusions. These projects often require the use of tools like Python or R, which are staples in data science work.

Understanding of AI is also important. As AI becomes more integrated into data science, assessing knowledge in this area can include tasks like creating machine learning models or using AI libraries.

Peer reviews can be helpful in assessing data science proficiency. They allow others to evaluate the individual’s work, providing diverse perspectives and feedback.

Maintaining a portfolio can help in assessments. It showcases a variety of skills, such as past projects and analyses, highlighting one’s capabilities in data science.

Frequently Asked Questions

Combinatorics plays a vital role in data science, helping to solve complex problems by analyzing arrangements and counts. Below are answers to important questions about combinatorics and its application in data science.

What are the foundational combinatorial concepts needed for data science?

Foundational concepts in combinatorics include permutations and combinations, which are essential for understanding the arrangement of data. Additionally, understanding how to apply these concepts to finite data structures is crucial in data science for tasks like probabilistic modeling and sampling.

How does mastering combinatorics benefit a data scientist in their work?

Combinatorics enhances a data scientist’s ability to estimate the number of variations possible in a dataset. This is key for developing efficient algorithms and performing thorough data analysis, enabling them to make sound decisions when designing experiments and interpreting results.

Are there any recommended online courses for learning combinatorics with a focus on data science applications?

For those looking to learn combinatorics in the context of data science, the Combinatorics and Probability course on Coursera offers a comprehensive study suited for these applications.

What are some free resources available for learning combinatorics relevant to data science?

Free resources include online platforms like Coursera, which offers foundational courses in math skills for data science, thereby building a strong combinatorial background.

Which mathematical subjects should be studied alongside combinatorics for a comprehensive understanding of data science?

Alongside combinatorics, it’s beneficial to study statistics, linear algebra, and calculus. These subjects are integral to data science as they provide the tools needed for data modeling, analysis, and interpretation.

How can understanding combinatorics improve my ability to solve data-driven problems?

By mastering combinatorics, one can better dissect complex problems and explore all possible solutions. This helps in optimizing strategies to tackle data-driven problems. It also boosts problem-solving skills by considering various outcomes and paths.