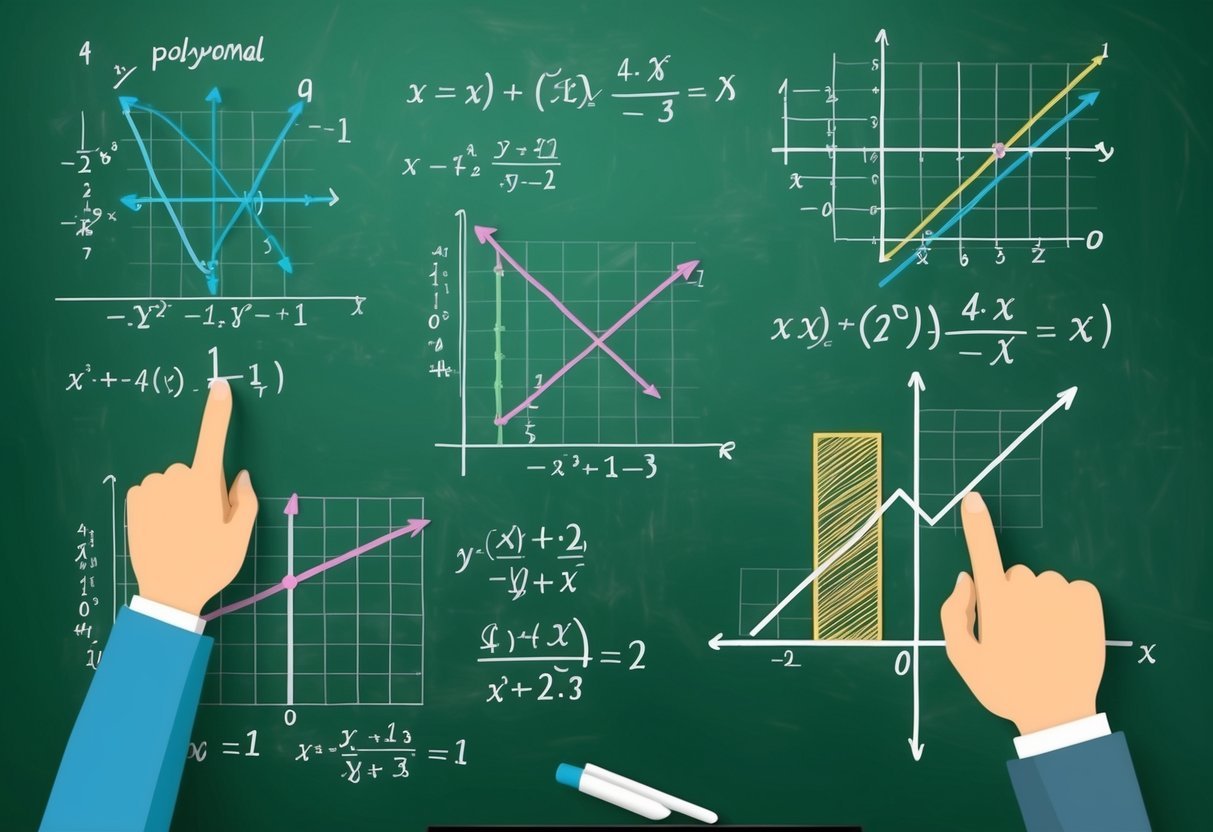

Understanding Polynomial Regression

Polynomial regression is essential for modeling complex relationships. It goes beyond linear regression by using polynomial expressions to better fit curves in data, capturing non-linear patterns effectively.

Defining Polynomial Regression

Polynomial regression is a type of regression analysis where the relationship between the independent variable (x) and the dependent variable (y) is modeled as an (n)-th degree polynomial. The general equation is:

[

y = beta_0 + beta_1x + beta_2x^2 + … + beta_nx^n

]

Here, each (beta) represents the coefficients that need to be determined during training. This approach allows the model to capture non-linear relationships, which makes it more flexible than simple linear regression.

While linear regression fits a straight line, polynomial regression can fit curves, making it suitable for datasets where the change in (y) relative to (x) isn’t constant.

Contrasting Polynomial and Linear Regression

Linear regression assumes that there’s a linear relationship between the input variables and the predicted output. Its equation is straightforward: (y = beta_0 + beta_1x).

By contrast, polynomial regression includes polynomial terms, allowing for the modeling of curves rather than straight lines. This flexibility helps in situations where trends in the data aren’t adequately captured by a straight line.

Polynomial regression, however, requires careful consideration to avoid overfitting, which occurs when the model learns noise rather than the actual pattern, often due to a polynomial of too high a degree.

Significance of Polynomial Features

Polynomial features are used to transform the input variables and introduce non-linearity into the model. By creating new features from the original ones, such as squares or cubes of the variables, the regression model gains the ability to fit non-linear functions.

The inclusion of polynomial features can substantially enhance a model’s performance on complex, real-world datasets with non-linear interactions. It is crucial to balance the degree of the polynomial used, as higher degrees can lead to overfitting.

Employing polynomial regression can be particularly useful in fields like physics and finance where relationships between variables are rarely linear.

Fundamentals of Polynomial Theory

Polynomial theory involves understanding mathematical expressions that incorporate variables and coefficients. These expressions can take various forms and complexities, providing a foundation for polynomial regression which models complex data relationships.

Exploring Degree of Polynomial

The degree of a polynomial is a key concept and refers to the highest power of the variable present in the polynomial expression. For example, in a quadratic polynomial like (3x^2 + 2x + 1), the degree is 2.

Higher-degree polynomials can model more complex patterns, but may also risk overfitting in data analysis.

Understanding an nth-degree polynomial helps in determining the potential maximum number of roots or turning points. This designation shows how flexible the model will be in fitting data.

Using a higher degree typically requires more caution and understanding of the data structure.

Interpreting Coefficients and Polynomial Terms

Coefficients in polynomials are constants that multiply the variables. In the expression (4x^3 – 3x^2 + 2x – 1), coefficients are 4, -3, 2, and -1. These define the contribution each term makes to the polynomial’s overall value at any given point.

Each polynomial term contributes differently based on both its coefficient and degree. The impact of these terms on the shape and behavior of the polynomial is crucial.

Careful analysis of coefficients helps predict how altering them affects polynomial curves. This balance allows for the practical application of polynomial models in real-world situations while ensuring accuracy and relevance.

Preparing Training Data

Effectively preparing training data is essential for building a reliable polynomial regression model. This involves several techniques and best practices to ensure the data is ready for analysis, including data cleaning and transforming features.

Data Preparation Techniques

One of the first steps in preparing data is collecting and organizing it into a structured format, often as a dataframe.

Ensuring the data is clean is crucial—this means handling missing values, outliers, and any irrelevant information. Techniques such as normalization or scaling may be applied to adjust feature ranges.

Missing Values: Use strategies like mean imputation or deletion.

Outliers: Identify using z-scores or IQR methods, then address them by transformation or removal.

These techniques ensure the data is consistent and suitable for modeling.

Feature Engineering Best Practices

Feature engineering transforms raw data into meaningful inputs for the model.

Polynomial regression benefits from creating polynomial features, which involve raising existing features to various powers to capture non-linear relationships.

To manage feature complexity, consider interaction terms, which combine multiple variables to evaluate their joint effect. Using techniques like PCA can help reduce dimensionality if a model has too many features.

By carefully engineering features, the model’s performance improves, leading to better predictions while avoiding overfitting. Balancing feature complexity and relevance is key to success in polynomial regression.

Utilizing Python Libraries

Python is a powerful tool for polynomial regression, offering several libraries that simplify the process. These libraries help with creating models and making data visualizations to understand trends and patterns. Let’s explore how two popular libraries, Sklearn and Matplotlib, can be utilized effectively.

Leveraging Sklearn and PolynomialFeatures

Sklearn, a robust Python library, offers a module called PolynomialFeatures for polynomial regression. With this tool, transforming linear data into polynomial form becomes straightforward.

This module adds new polynomial terms to the dataset, making it possible to fit polynomial curves to complex data trends.

Users should first prepare their dataset, often using Pandas for easy data manipulation. By importing PolynomialFeatures from sklearn.preprocessing, one can create polynomial terms from independent variables. Set the degree of the polynomial to control model complexity.

A simple example can involve transforming a linear feature X using PolynomialFeatures(degree=3). This process expands the dataset by adding new variables like X^2 and X^3. Fit the expanded data with a linear model from sklearn to make predictions.

Data Visualization with Matplotlib and Seaborn

Visualizing data is crucial for understanding a polynomial regression model’s performance. Matplotlib and Seaborn are Python libraries that facilitate the creation of informative and visually appealing charts.

Matplotlib offers a foundation for basic plotting, enabling users to craft scatter plots to view raw data points and curves representing the polynomial regression model. Plotting these together can highlight how well the model captures data patterns.

For enhanced visuals, Seaborn can be used alongside Matplotlib. It provides easy-to-customize themes and color palettes, making plots more professional and insightful.

Adding trend lines or confidence intervals often becomes more intuitive with Seaborn, enhancing the reader’s understanding of the data trends.

By combining these tools, analysts can create comprehensive visualizations that aid in evaluating model predictions against actual data.

Model Training Techniques

Training a polynomial regression model involves techniques like applying the least squares method and understanding the balance between overfitting and underfitting. These approaches are crucial for building models that generalize well to new data.

Applying Least Squares in Polynomial Regression

In polynomial regression, the least squares method minimizes the difference between observed and predicted values. This approach helps in finding the best-fitting curve by adjusting the parameters of the polynomial regression formula.

The objective is to minimize the sum of the squares of the residuals, which are the differences between actual and predicted values.

By calculating this minimized sum, a more accurate model is achieved.

The least squares method involves setting up and solving a system of equations derived from partial derivatives of the polynomial function. This process is essential to ensure the model’s predictions closely match the training data.

For practitioners, it is crucial to implement least squares correctly to prevent errors in the coefficient estimates. An improper calculation might lead to ineffective models that do not capture the underlying trend accurately.

Understanding Overfitting and Underfitting

Overfitting and underfitting are significant challenges in polynomial regression.

Overfitting occurs when the model is too complex, fitting the training data almost perfectly but performing poorly on unseen data due to capturing noise instead of the trend. This can happen when the polynomial degree is too high. More on overfitting issues in polynomial regression can be found in this guide.

Underfitting, on the other hand, happens when the model is too simple. It cannot capture the underlying pattern of the data, often due to a low polynomial degree. This leads to poor training data performance and lackluster generalization.

To strike a balance, practitioners adjust model complexity through cross-validation and other techniques. Understanding the trade-offs between complexity and generalization is key to building effective polynomial regression models.

Evaluating Polynomial Regression Models

Evaluating polynomial regression models involves understanding key metrics and techniques. Critical aspects include how well the model explains data variance and how predictive it is on new, unseen data.

Assessing Model Performance with R-squared

R-squared is a common evaluation metric for assessing the fit of polynomial regression models. It shows the proportion of variance in the dependent variable explained by the model. Higher R-squared values typically indicate a better fit.

When evaluating, it’s important to consider adjusted R-squared as well. This metric adjusts for the number of predictors in the model, preventing overfitting by penalizing unnecessary complexity.

Unlike simple R-squared, the adjusted version accounts for the addition of features, maintaining valid model performance assessments.

A well-calibrated R-squared helps ascertain if adding polynomial terms improves the model without causing overfitting. Good R-squared values must reflect meaningful relationships between variables rather than coincidental patterns.

Utilizing Cross-Validation Techniques

Cross-validation is vital for testing how a polynomial regression model generalizes to new data.

A popular method is k-fold cross-validation, where data is divided into k equally sized folds. The model trains on k-1 folds and tests on the remaining one, repeating this process k times. This method estimates model performance and variance using unseen data.

The mean squared error (MSE) from each fold is calculated to provide a comprehensive view of model accuracy. Comparing MSE across different polynomial degrees helps guide the choice of model without relying solely on a fixed dataset.

Cross-validation ensures the model’s robustness, giving confidence that it will perform well, regardless of new data.

Regression Analysis Applications

Regression analysis plays a crucial role in understanding relationships between variables. It is widely used in various fields to predict outcomes and analyze data sets, including cases with non-linear relationships.

Case Studies with Real-Life Examples

Many sectors use regression analysis to make data-driven decisions.

For example, in finance, it is used to forecast stock prices by examining historical data. The health sector employs regression to predict patient outcomes based on treatment types and patient histories.

Marketing departments leverage it to assess how different advertising strategies impact sales, adjusting campaigns accordingly.

Real estate professionals analyze housing market trends, such as how location and years of experience in selling properties affect home prices.

These practical applications showcase the versatility and utility of regression analysis in providing valuable insights.

Analyzing Non-linear Data in Data Science

In data science, handling non-linear relationships between variables is essential.

Polynomial regression is a common method utilized when linear models fall short. This approach models the data with higher-degree polynomials, capturing more complex patterns effectively.

For instance, applications in machine learning involve predicting home prices based on features like square footage or neighborhood, where relationships are not strictly linear.

Data scientists often use these techniques to refine predictive models, enhancing accuracy and providing deeper insights.

Handling non-linearities helps in identifying trends that linear models might overlook, thus broadening the applicability of regression in solving diverse problems.

Advanced Polynomial Models

In exploring advanced polynomial models, quadratic and cubic regression models provide a foundation by extending simple linear regression to capture more complex data patterns.

Higher-degree polynomials advance this further, offering powerful but challenging options to model intricate relationships.

From Quadratic to Cubic Models

Quadratic models are an extension of linear regression and can model curves by adding an (x^2) term.

These models are suitable for data that forms a parabolic pattern, making them more flexible than linear models. A classic example could be modeling the trajectory of a ball, where height depends on the square of time.

Cubic models add another layer of complexity by including an (x^3) term. This allows the model to capture changes in curvature.

This flexibility is useful in scenarios such as growth rate changes in biology. According to a study on advanced modeling with polynomial regression, cubic regression often strikes a balance between fitting the data well and avoiding excessive complexity.

Working with Higher-Degree Polynomials

Higher-degree polynomials increase the model’s capacity to fit complex data by increasing the polynomial degree. This includes terms like (x^4) or higher.

While these models can fit any dataset almost perfectly, they risk overfitting, especially when noise and outliers are present.

Managing overfitting is crucial. Techniques like cross-validation and regularization help mitigate this.

In practice, as noted in the context of polynomial regression techniques, selecting the right degree is key to balancing model complexity and performance.

These models are effective in applications like signal processing or financial trend analysis, where complex patterns are common.

Computational Considerations

In polynomial regression, computational efficiency and algorithmic complexity are significant factors that influence the model’s performance. They determine how well a model can handle calculations and the implications for processing time and resource usage.

Efficiency in Polynomial Calculations

Polynomial regression requires various calculations that can be computationally intense, especially with higher-degree polynomials.

Tools like NumPy streamline these computations by leveraging vectorized operations, which are faster than standard loops. This can greatly reduce computation time, offering efficiency when working with large datasets.

Using scikit-learn, polynomial features can be created efficiently with the PolynomialFeatures transformer, saving time and minimizing coding complexity.

Efficient calculations ensure that the regression models are effective without unnecessary delays or computational burden. This is especially important when the model is implemented in resource-constrained environments.

Algorithmic Complexity in Polynomial Regression

The complexity of polynomial regression increases with the degree of the polynomial being used.

Higher degrees can capture more intricate patterns but will also require more processing power and memory.

Techniques from linear models, like regularization, help manage complexity by preventing overfitting and improving generalization to new data.

In practice, balancing complexity with computational cost is crucial.

Efficient algorithms and data preprocessing methods, available in scikit-learn, can play a pivotal role in managing this balance. They ensure computational resources aren’t excessively taxed, keeping the application of polynomial regression both feasible and practical.

Integrating Polynomial Regression in Systems

Polynomial regression plays a key role in applying machine learning to real-world challenges. By modeling non-linear relationships between features and response variables, it enhances prediction accuracy.

Effective integration requires careful attention to both production environments and potential challenges.

Incorporating Models into Production

Integrating polynomial regression models into production systems involves several critical steps.

Initially, the model must be trained on data that accurately reflects real-world conditions. This ensures reliable performance when exposed to new data.

Once trained, the model must be efficiently deployed in the system architecture. It could reside on cloud servers or local machines, depending on resource availability and system design.

A crucial element is ensuring the model can handle continuous data inputs. This involves strategies for managing data flow and updates.

Monitoring tools should be set up to track model performance and outcomes. This allows for timely adjustments, maintaining the model’s accuracy and relevance.

Regular updates to the model may be needed to incorporate new patterns or changes in user behavior.

Challenges of Implementation

Implementing polynomial regression in systems comes with its own set of challenges.

The complexity of polynomial equations can demand significant computational resources. Ensuring efficient processing and response times is vital in operational settings. Strategies like parallel processing or optimized algorithms can help manage this.

Data quality is another crucial factor. Poor quality or biased data can lead to inaccurate predictions.

It is essential to have robust data validation and preprocessing methods to maintain data integrity.

Additionally, balancing model complexity against overfitting is vital. A model too complex may fit the training data well but perform poorly on new data. Regular evaluation against a test dataset is recommended to mitigate this risk.

Frequently Asked Questions

Polynomial regression is a valuable tool for modeling relationships between variables where linear models don’t fit well. This section addresses common questions about its implementation, practical applications, and considerations.

How do you implement polynomial regression in Python?

In Python, polynomial regression can be implemented using libraries such as scikit-learn.

By transforming input features to include polynomial terms, a model can fit complex data patterns. The PolynomialFeatures function helps create these terms, and LinearRegression fits the model to the transformed data.

What are some common use cases for polynomial regression in real-world applications?

Polynomial regression is often used in fields like economics for modeling cost functions, in engineering for predicting system behavior, or in agriculture to assess growth patterns.

It helps describe curvilinear relationships where straight lines are insufficient to capture data trends.

What are the primary disadvantages or limitations of using polynomial regression?

A key limitation of polynomial regression is its tendency to overfit data, especially with high-degree polynomials. This can lead to poor predictions on new data.

It also requires careful feature scaling to ensure that polynomial terms do not produce excessively large values.

How do you determine the degree of the polynomial to use in polynomial regression?

Selecting the polynomial degree involves balancing fit quality and overfitting risk.

Techniques like cross-validation are used to test various degrees and assess model performance. Analysts often start with a low degree and increase it until performance improvements diminish.

What metrics are commonly used to evaluate the performance of a polynomial regression model?

Common metrics include Mean Absolute Error (MAE), Mean Squared Error (MSE), and R-squared.

These metrics help to compare model predictions with actual values, indicating how well the model captures underlying patterns in the data.

Can you provide an example of how polynomial regression is applied in a data analysis context?

Polynomial regression is used in machine learning courses to teach complex modeling.

A typical example includes predicting housing prices where prices do not increase linearly with features such as square footage, requiring nonlinear models for accurate predictions.