In the world of data science, data manipulation is a crucial step that can often dictate the success of machine learning models.

SQL, a powerful tool for managing and querying large datasets, plays a vital role in this process.

Using SQL for data manipulation allows data scientists to prepare and clean data effectively, ultimately enhancing the accuracy and performance of machine learning models.

Data manipulation with SQL helps in transforming raw data into a suitable format for machine learning. By efficiently handling missing values and inconsistencies, SQL ensures that the dataset is ready for analysis.

This preparation is essential for developing robust machine learning models, as clean data often leads to better predictions and insights.

Integrating machine learning with SQL databases also enables the execution of complex queries and algorithms without leaving the database environment.

This seamless integration not only makes data processing easier but also allows for scalable and efficient model deployment.

Leveraging SQL for machine learning tasks offers a practical approach for data scientists aiming to build effective prediction models.

Key Takeaways

- SQL streamlines data preparation for machine learning models.

- Data manipulation in SQL improves model accuracy.

- Integrating SQL with machine learning boosts efficiency.

Understanding SQL in Machine Learning

SQL plays a crucial role in managing and manipulating data used in machine learning.

By leveraging SQL databases, data scientists can efficiently prepare and process data, which is essential for training robust machine learning models.

Comparing SQL and NoSQL helps identify the right tools for data analysis in specific scenarios.

Role of SQL Databases in Machine Learning

SQL databases are foundational in many machine learning workflows. They provide reliable storage and retrieval of structured data, which is often necessary for training models.

SQL enables users to execute complex queries to extract relevant datasets quickly. This capability is vital during the data preparation phase, where data is cleaned and transformed.

In addition to querying, SQL is used to maintain data integrity with constraints such as primary keys and foreign keys. This ensures that the data used for model training is both accurate and consistent.

As machine learning grows, tools that integrate SQL with popular programming languages help streamline the process.

SQL vs. NoSQL for Data Science

In data science, choosing between SQL and NoSQL depends on the data type and use case.

SQL databases excel in scenarios where data is highly structured and relationships between entities must be maintained. They offer powerful querying capabilities, essential for tasks that require in-depth data analysis.

Conversely, NoSQL databases are suited for handling unstructured or semi-structured data, such as social media posts. They provide flexibility and scalability, useful for big data applications.

However, SQL remains a preferred choice when consistency and structured querying are priorities in machine learning projects.

Data Processing and Manipulation Techniques

Effective data processing is crucial for machine learning models. Cleaning and preparation help remove errors, while feature engineering enhances model precision. Transformation and normalization ensure that the data format supports accurate analysis.

Data Cleaning and Preparation

Data cleaning is a foundational step in preparing data for machine learning. It involves identifying and correcting errors or inconsistencies in the dataset.

Handling missing values is paramount; strategies such as imputation or removal are often used.

Outlier detection is another essential aspect, where anomalous data points may be adjusted or removed to prevent skewed results.

Duplicate entry removal helps maintain data integrity.

Data preprocessing, including these tasks, ensures that the data is ready for analysis.

Feature Engineering and Selection

Feature engineering involves creating new input variables based on existing data. This can enhance the performance of machine learning models.

It’s crucial to identify which features will contribute the most to model accuracy by using feature selection techniques.

Dimensionality reduction methods, such as PCA (Principal Component Analysis), help in reducing the number of input variables while preserving the essential information. This streamlines machine learning tasks by focusing on the most influential data aspects.

Data Transformation and Normalization

Data transformation converts data into a suitable format for analysis.

Common techniques include log transformation, which helps manage skewed data distributions, and scaling methods such as min-max scaling, which standardizes the feature range.

Normalization adjusts data distributions to make algorithms work more efficiently. Z-score normalization is one approach that scales data based on standard deviation.

These methods ensure that different data attributes contribute evenly to the model’s training process.

SQL Techniques for Data Analytics

SQL is a powerful tool used in data analytics for structuring data queries and achieving efficient data manipulation. This involves techniques like grouping data, using window functions, and handling complex queries to draw insights from data.

Aggregate Functions and Grouping Data

One essential aspect of data analytics is using aggregate functions.

These functions, such as SUM(), AVG(), COUNT(), MIN(), and MAX(), help summarize large datasets.

By employing the GROUP BY clause, SQL enables grouping records that have identical data in specific fields. This is crucial when evaluating trends or comparing different data categories.

The HAVING clause often follows GROUP BY to filter groups based on aggregate conditions. For instance, selecting categories with a sales total exceeding a threshold.

In a SELECT statement, these functions streamline data for focused analysis, not only enhancing efficiency but providing clear and measurable outcomes.

Window Functions and Subqueries

Window functions are vital for analyzing data across rows related to the current row within data partitions. Unlike typical aggregate functions, they do not collapse rows into a single one.

Examples include ROW_NUMBER() and RANK(), which are used for ranking and numbering data more flexibly within the context of its data set or segments.

Subqueries are another powerful tool, allowing for nested queries within larger queries. They help break down complex calculations or selections into manageable steps, executing preliminary queries to guide the main query.

This technique ensures that the resulting SQL query remains organized and readable.

Pivoting Data and Handling Complex Queries

Pivoting transforms data from rows to columns, making it easier to interpret and compare. This is especially useful when restructuring data for reporting purposes.

The PIVOT operator in SQL is a common way to achieve this, though different databases may require specific syntax or additional steps.

Dealing with complex queries often involves advanced SQL techniques like joining multiple tables or using conditional statements.

Mastery of handling these intricacies, including outer and cross joins, ensures that complex data sets are queried and manipulated effectively, leading to more insightful analytics.

These capabilities are crucial for anyone looking to fully leverage SQL in data-driven environments.

SQL for Machine Learning Model Development

Using SQL in machine learning allows for efficient handling of data directly within databases. It aids in preparing training data and manipulating large datasets seamlessly, often integrating with tools like Python and R to enhance model development.

Preparing Training Data with SQL

SQL is an essential tool for managing training data for machine learning projects. It enables the extraction and cleaning of large datasets, making it easier to perform operations like filtering, aggregating, and joining tables. This process is crucial for creating a robust dataset for model training.

When preparing data, SQL can handle tasks such as managing missing values by using functions like COALESCE() or creating new columns for feature engineering.

For instance, SQL commands can quickly scale up to handle larger datasets by performing operations directly on the server, reducing the time needed to prepare data for machine learning algorithms.

SQL’s ability to seamlessly integrate with programming languages like Python and R further empowers data scientists.

By feeding clean, structured data directly into machine learning pipelines, SQL streamlines the entire process of model development.

Building and Refining Machine Learning Models

Once the data is ready, building machine learning models involves training algorithms on this data.

SQL supports certain analytics functions that can be used directly within the database. Platforms like PostgreSQL enable executing multiple machine learning algorithms in SQL queries, which simplifies the process.

For more complex tasks, SQL can work alongside libraries in Python to develop models.

Python libraries like Pandas are often paired with SQL to refine models, leveraging SQL for data selection and preliminary processing before executing Python-based machine learning code.

The refinement of models through SQL involves iteration and optimization, often requiring adjustments to the dataset or its features to achieve improved accuracy and performance.

By using SQL efficiently, developers can focus on enhancing model accuracy without getting bogged down by manual data handling.

Machine Learning Algorithms and SQL

SQL is used with machine learning by enabling data manipulation and analysis within databases. Various machine learning algorithms, such as clustering and regression, can be implemented directly in SQL environments, allowing for efficient data processing and model building.

Cluster Analysis and Classification

Clustering involves grouping data points based on similarities. The k-means clustering algorithm is often used in SQL for this purpose. It helps identify patterns and segments within the data without predefined categories.

Classification, on the other hand, involves assigning data points into predefined categories based on features and is common in various applications like customer segmentation.

In SQL, classification models can be executed to sort data into these categories effectively. Tools like SQL Server Machine Learning Services integrate Python and R scripts, which enhance the ability to perform both clustering and classification tasks.

This integration streamlines processes, making data management simple and efficient.

Regression Analysis

Regression analysis aims to predict continuous outcomes. Linear regression is a popular method used to find relationships between variables.

In SQL, regression algorithms can be applied to forecast trends and make predictions directly within databases. This avoids the need for external analysis tools, leading to faster insights.

By leveraging stored procedures or SQL-based libraries, professionals can automate regression tasks. This not only saves time but also ensures consistency in predictive modeling.

Such capabilities are crucial for industries that rely heavily on data-driven decisions.

Advanced Algorithms for Structured Data

Structured data benefits greatly from advanced algorithms implemented in SQL.

With SQL, deep learning models and neural networks can work directly within a database environment. Though most deep learning and neural network tasks are traditionally handled outside of SQL, newer technologies and extensions are bringing them closer to SQL databases.

For example, the dask-sql library supports machine learning tasks, allowing for complex computations directly in SQL.

This integration facilitates tasks such as feature engineering and model deployment, which are critical steps in developing robust machine learning models.

By bridging the gap between complex algorithms and SQL, professionals can achieve greater efficiency and precision in data analysis.

Utilizing Programming Frameworks and Libraries

Using programming frameworks and libraries optimizes data manipulation and machine learning model development. This section highlights key libraries in Python and R for data analysis and integration with SQL for machine learning frameworks.

Python and R Libraries for Data Analysis

Python and R are popular languages for data analysis.

Python is known for its extensive libraries like Pandas and Scikit-learn. Pandas makes manipulating large datasets easy with features for reading, filtering, and transforming data. Scikit-learn offers a simple interface for implementing machine learning algorithms, making it ideal for beginners and experts alike.

R is another powerful language used in statistics and data analysis. It offers libraries like dplyr for data manipulation and caret for machine learning. These libraries provide tools to process data and support predictive modeling.

Both languages enable data professionals to manage datasets effectively and prepare them for machine learning applications.

Integrating SQL with Machine Learning Frameworks

SQL databases can store and manage large datasets for machine learning. Integration with frameworks like TensorFlow enhances performance by allowing direct data queries.

SQL supports quick data retrieval, essential when training models as it reduces loading times.

For instance, PostgresML can integrate with pre-trained models to streamline workflows. Moreover, accessing data through SQL queries ensures models are trained on recent and relevant data.

Using SQL with machine learning frameworks bridges the gap between data storage and analysis.

It allows for seamless transitions from data retrieval to model training, optimizing efficiency. This integration suits data scientists aiming to improve their model-building process.

Data Mining and Predictive Analytics

Data mining and predictive analytics use statistical techniques and algorithms to analyze historical data, identify patterns, and make predictions.

This section focuses on specific applications like anomaly detection, sentiment analysis, recommendation systems, and collaborative filtering.

Anomaly Detection and Sentiment Analysis

Anomaly detection is about finding unusual patterns in data, which can indicate errors or fraud.

It’s crucial in fields like finance and cybersecurity, where early detection of anomalies can prevent significant losses.

Machine learning models help flag data points that deviate from expected patterns.

Sentiment analysis examines text data to understand emotions and opinions. It is widely used in marketing and customer service to gauge public sentiment.

For instance, analyzing social media posts can help predict brand perception. Machine learning algorithms process language data to classify these emotions effectively, aiding businesses in decision-making.

Recommendation Systems and Collaborative Filtering

Recommendation systems suggest items to users based on past preferences. They are vital in e-commerce and streaming services to enhance user experience.

These systems predict a user’s liking for items by analyzing past behavior.

Collaborative filtering improves this by using data from multiple users to recommend items. This approach matches users with similar tastes, predicting preferences even for unknown items.

For example, if a user liked certain movies, the system predicts they might enjoy similar movies that others with similar interests have liked.

This data-driven approach offers personalized recommendations, enhancing user satisfaction.

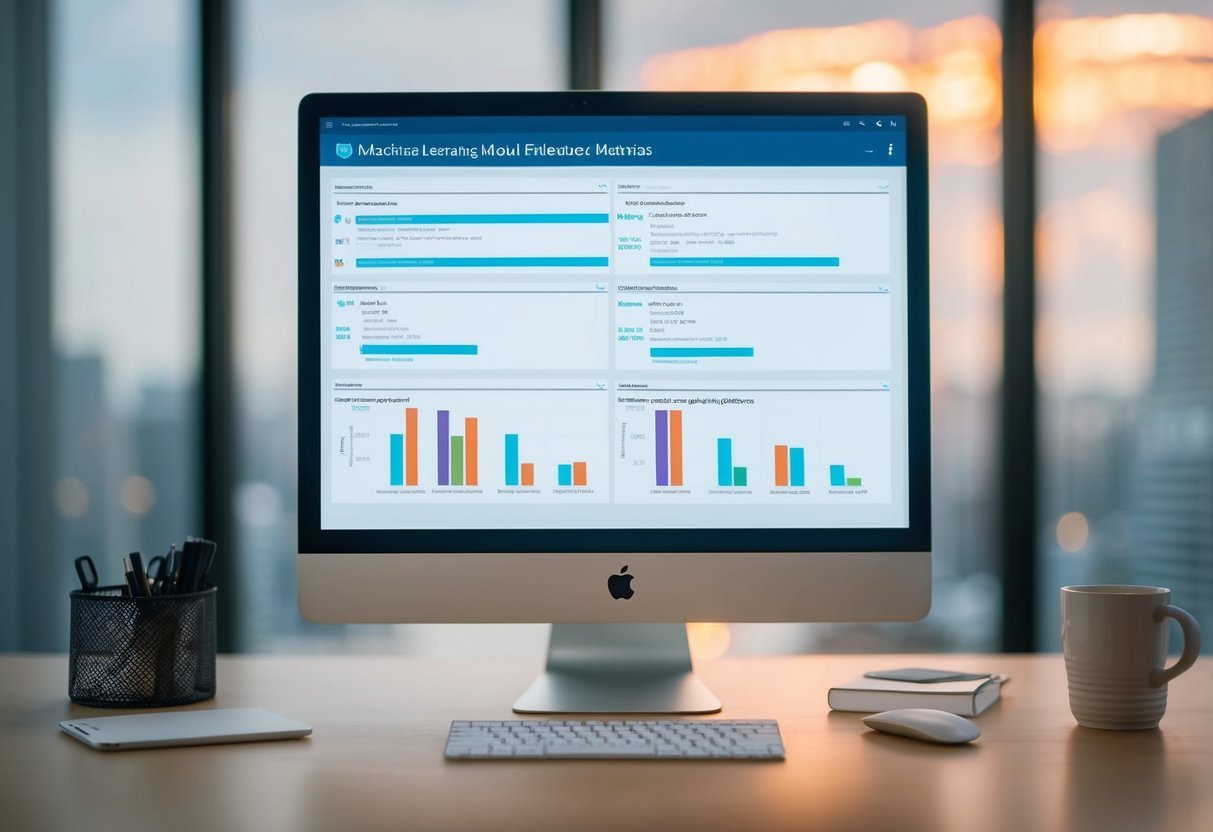

Performance Metrics and Model Evaluation

Performance metrics and model evaluation are essential in ensuring the accuracy and effectiveness of machine learning models. These metrics help highlight model strengths and areas needing improvement, supporting data-driven decisions.

SQL plays a crucial role in refining data for these evaluations.

Cross-Validation and Performance Metrics

Cross-validation is a method used to assess how a model will perform on unseen data.

This process involves splitting the dataset into several subsets and training the model on each subset while testing on the others. This method helps in detecting issues like overfitting.

Common performance metrics include accuracy, precision, recall, F1-score, and mean squared error, depending on the problem type.

Accuracy measures the proportion of correct predictions. Precision and recall are crucial for models where the cost of false positives or negatives is high.

The F1-score balances precision and recall when both are important. For regression tasks, mean squared error gives insights into prediction errors by averaging squared differences between predicted and actual values.

Iterative Model Improvement and SQL

Iterative model improvement involves making systematic tweaks based on metric outcomes.

SQL can be pivotal in this process, especially when handling large datasets. By writing efficient SQL queries, one can aggregate, filter, and transform data to create cleaner input for models, ultimately enhancing performance.

SQL server tools can optimize data manipulation tasks, ensuring faster and more efficient data handling.

For instance, creating indexed views or using partitioning can significantly speed up data retrieval, aiding iterative model refinement. Using SQL, models can be recalibrated quickly by integrating feedback from ongoing evaluations, ensuring they remain sharp and applicable to real-world scenarios.

SQL Operations for Maintaining Machine Learning Systems

In maintaining machine learning systems, SQL plays a crucial role in handling data and streamlining processes. Key operations involve managing data entries and maintaining databases through various SQL commands and stored procedures.

Managing Data with Insert, Delete, and Update Statements

To efficiently handle data, SQL environments rely on Insert, Delete, and Update statements.

The Insert Into command adds new records to existing tables, ensuring that datasets are continually updated with relevant information. Meanwhile, the Delete Statement helps in removing outdated or irrelevant data, keeping the datasets clean and precise for model training.

Lastly, the Update Statement modifies existing records based on new findings, ensuring that data remains relevant and useful for ongoing machine learning processes. These operations allow for better data accuracy and accessibility within the system.

Database Maintenance and Stored Procedures

Stored Procedures support effective database maintenance by automating routine tasks. In SQL environments, these procedures are pre-written SQL codes that can execute complex operations efficiently. They handle tasks like data validation and routine updates, reducing the workload on data administrators.

Moreover, the use of user-defined functions in conjunction with stored procedures enhances customization options, allowing unique data manipulations that cater to specific model needs.

A well-maintained database through these means not only ensures data integrity but also boosts overall system performance, facilitating smoother machine learning model maintenance.

Best Practices and Advanced SQL Techniques

Advanced SQL techniques can greatly enhance the performance and security of machine learning models. Effective use of SQL ensures efficient data processing, robust security, and optimal resource management.

Securing Data and Implementing Access Control

Securing data is essential in SQL-based systems. Implementing access control ensures only authorized personnel have access to sensitive information.

Role-based access control (RBAC) is a structured approach that assigns access rights based on user roles.

Encryption in transit and at rest further enhances security. Regular audits of database access logs help monitor unauthorized attempts and ensure compliance with security protocols.

Data anonymization techniques can also be used to protect sensitive information without losing analytical value. These methods ensure the safeguarding of data integrity and privacy, which is vital for data-driven decision-making.

Optimization and Performance Tuning

Optimizing SQL queries is crucial for improving performance in data manipulations.

Indexing is a common method to speed up data retrieval. However, excessive indexing can slow down updates, so balance is key.

Using partitioning helps in managing large datasets by breaking them into smaller, manageable pieces.

Proper use of caching and query optimization techniques can significantly reduce response times. Additionally, monitoring tools can identify bottlenecks and optimize resource allocation.

Efficient query structures and minimizing nested subqueries contribute to better performance, aiding business intelligence processes by providing timely insights.

Case Studies and Real-world Applications

Exploring the use of SQL in data manipulation for machine learning shines a light on critical areas like customer segmentation and healthcare data analysis. These fields leverage SQL to extract valuable insights from vast datasets, supporting data-driven decision-making and business intelligence.

Customer Segmentation in Retail

In retail, customer segmentation helps businesses group their customers based on buying behaviors and preferences.

By utilizing SQL data manipulation, retailers can create detailed customer profiles and identify trends. This enables personalized marketing strategies and enhances customer satisfaction.

SQL queries can sift through transaction histories, demographic data, and online behaviors. For example, retailers might examine purchase frequency or average spending per visit.

By analyzing this data, businesses can target promotions more effectively and increase sales efficiency.

Significant case studies have shown how companies use SQL for segmentation. They adjust inventory and layout based on customer data, demonstrating a practical application of business intelligence and improving customer experience.

This targeted approach not only boosts sales but also builds stronger customer relationships, reflecting the power of data-driven strategies.

Healthcare Data Analysis

In the healthcare sector, data analysis plays a crucial role in patient care and operational efficiency.

Using SQL, practitioners and administrators can manage and interpret massive datasets. This includes patient records, treatment outcomes, and resource allocation.

SQL helps hospitals track health trends, improve patient outcomes, and reduce costs. For instance, analyzing patient admission data allows healthcare providers to forecast patient needs and allocate resources effectively.

Real-world applications highlight the importance of SQL in this field. By using data-driven insights, healthcare organizations can enhance patient treatment plans and streamline operations.

These capabilities enable them to adapt to the demands of a rapidly evolving healthcare environment, demonstrating the practical benefits of SQL in improving patient care and institutional performance.

Frequently Asked Questions

Data manipulation using SQL is crucial for preparing datasets for machine learning models. This process involves everything from data extraction to integration with Python for enhanced analysis and model training.

How can you perform data manipulation in SQL for training machine learning models?

Data manipulation in SQL involves using commands to clean, filter, and transform datasets.

SQL commands like SELECT, JOIN, WHERE, and GROUP BY help extract and refine data, making it suitable for machine learning models. By structuring data correctly, SQL prepares it for the model training phase.

What are some examples of SQL Server being used for machine learning?

SQL Server can be used to integrate custom models or for data pre-processing. It supports in-database analytics, enabling the execution of machine learning scripts close to the data source.

Techniques like these enhance model performance by reducing data movement and augmenting processing speed.

In what ways is SQL important for pre-processing data in machine learning workflows?

SQL is fundamental for cleaning and organizing data before feeding it into machine learning models.

It handles missing values, outlier detection, and feature engineering. SQL’s efficiency in data retrieval and preparation streamlines the pre-processing stage, leading to more accurate models.

How can Python and SQL together be utilized in a machine learning project?

Python and SQL complement each other by combining robust data handling with powerful analysis tools.

SQL fetches and manipulates data, while Python uses libraries like Pandas and scikit-learn for statistical analysis and model development. This integration allows seamless data flow and efficient machine learning processes.

What techniques are available for integrating SQL data manipulation into a machine learning model in Python?

One method involves using SQLAlchemy or similar libraries to query data and bring it into Pandas DataFrames.

This makes it easier to leverage Python’s machine learning tools to analyze and create models. Techniques like these allow data scientists to manipulate and analyze data effectively within Python.

What is the role of data manipulation through SQL when training a GPT model with custom data?

For GPT model training with custom data, SQL is used to extract, organize, and preprocess text data.

SQL ensures data is in the correct format and structure before it is inputted into the model. This step is vital for training the model effectively on specific datasets.