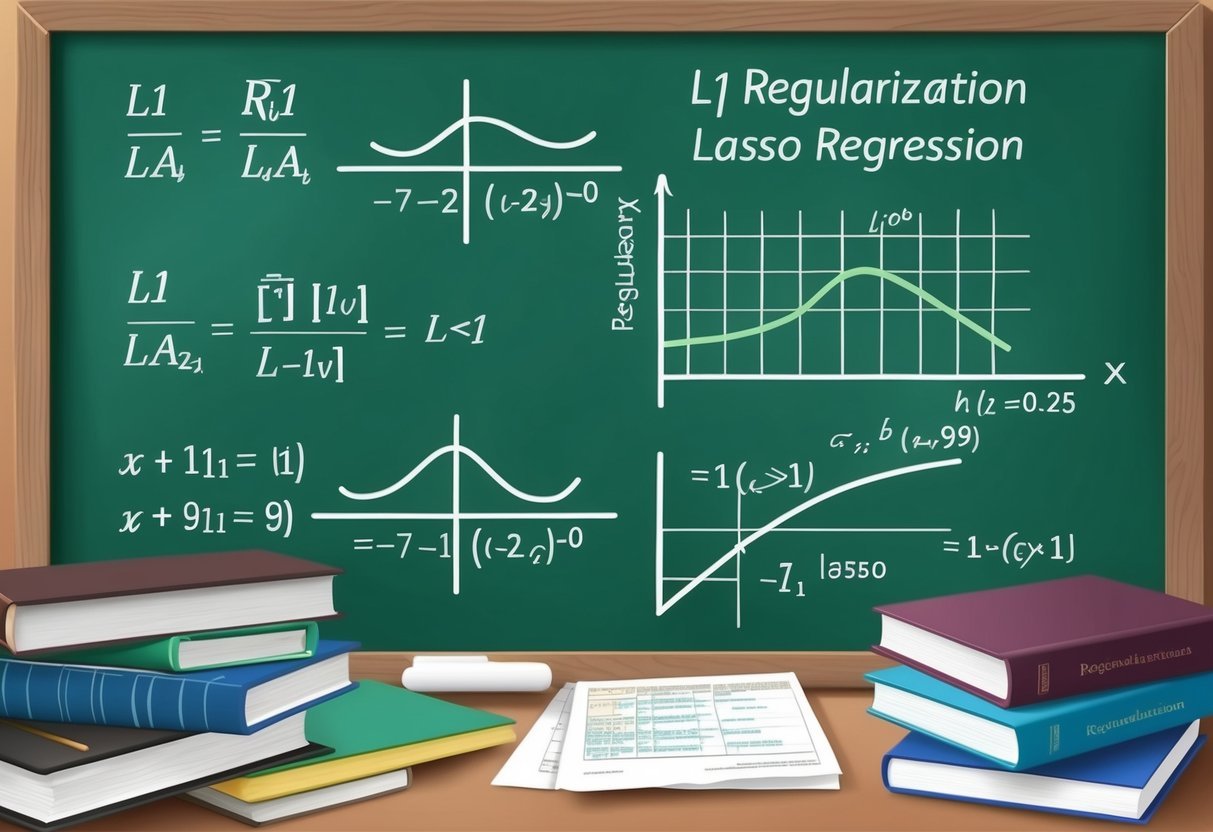

Understanding L1 Regularization

L1 regularization, also known as Lasso Regression, is a technique used in machine learning to enhance model performance by reducing overfitting.

It involves adding a penalty term to the loss function, encouraging simpler models with fewer coefficients.

In the context of L1 regularization, the penalty term is the sum of the absolute values of the coefficients, multiplied by a parameter, often denoted as λ (lambda).

This can shrink some coefficients to zero, effectively performing feature selection.

The main advantage of L1 regularization is its ability to balance the bias-variance tradeoff. By selecting only the most important features, it helps reduce variance while managing bias in the model.

L1 regularization is commonly applied in high-dimensional datasets where numerous features might lead to overfitting.

By simplifying the model, L1 regularization enhances prediction accuracy and generalization capabilities.

L1 regularization is often compared to L2 regularization, which uses squared coefficients instead of absolute values.

While both methods aim to control model complexity, L1 is particularly effective in scenarios where feature selection is crucial. More information on L1 regularization in deep learning can be found here.

A helpful way to remember L1 regularization is through bold and italic notes. The key is its simplicity and effectiveness in improving models by automatically choosing which features to focus on while ignoring others. This selective approach makes L1 regularization a valuable tool in statistical modeling and machine learning.

Fundamentals of Lasso Regression

Lasso regression, or Least Absolute Shrinkage and Selection Operator, is a powerful tool in machine learning and statistics. It is widely used for feature selection and regularization in linear models. Important differences exist between Lasso and other methods like Ridge Regression.

Core Concepts of Lasso

Lasso focuses on reducing overfitting by adding a penalty to the absolute size of the coefficients in a model. This penalty is known as L1 regularization.

By doing so, Lasso can effectively shrink some coefficients to zero, leading to simpler models with relevant features. This characteristic makes it a valuable tool for feature selection in high-dimensional datasets.

The mathematical formulation of Lasso involves minimizing the sum of squared errors with a constraint on the sum of the absolute values of coefficients.

The balance between fitting the data and keeping coefficients small is controlled by a tuning parameter, often called lambda (λ).

Small values of λ can lead to models resembling ordinary linear regression, while larger values increase the regularization effect.

Differences from Ridge Regression

Although both Lasso and Ridge Regression are forms of regularization, their approaches differ significantly.

Lasso uses L1 regularization, which means it penalizes the coefficients by their absolute values. In contrast, Ridge Regression applies L2 regularization, penalizing the square of the coefficients.

A key difference is that Lasso can set some coefficients exactly to zero. This results in models that are often simpler and easier to interpret. Ridge Regression, on the other hand, tends to keep all features in the model, shrinking them only toward zero. Consequently, Lasso is often chosen for cases where feature selection is crucial.

These distinctions help users choose the right method based on the specific needs of their data analysis tasks. For further reading, consider learning more about Lasso Regression.

Mathematical Formulation of Lasso

Lasso regression is a technique that helps enhance model accuracy and interpretability. It involves L1 regularization, which adds a penalty to the cost function. This penalty term forces some coefficients to be exactly zero, aiding in feature selection.

The cost function for lasso is:

[ J(theta) = text{Loss Function} + lambda sum_{i=1}^{n} |theta_i| ]

- Loss Function: Often, the loss function is the mean squared error for regression tasks.

- Regularization term: Here, (lambda) is the regularization parameter that determines the strength of the penalty. Larger (lambda) values increase regularization, which can lead to simpler models.

The purpose of L1 regularization is to minimize the cost function, balancing both model fit (loss function) and complexity (regularization term).

In lasso regression, this can lead to sparse models by shrinking some coefficients to zero, effectively eliminating some features from the model.

This mathematical strategy helps combat overfitting by discouraging overly complex models. By introducing penalties tied to the absolute values of coefficients, lasso ensures models remain both accurate and interpretable.

For more detailed insights, Lasso’s approach to regularization can be seen in Stanford’s exploration of feature selection.

Advantages of Lasso in Feature Selection

Lasso Regression, known for its L1 regularization, is valuable for improving model accuracy by focusing on critical features. It reduces complexity and enhances interpretability, especially when models are dealing with numerous variables.

Promoting Model Sparsity

Lasso Regression promotes sparsity by reducing many feature coefficients to zero. This helps in identifying only the most significant variables and ignoring irrelevant features.

By adding a penalty for large coefficients, it encourages a simpler and more efficient model.

This method is particularly effective in high-dimensional datasets where distinguishing between relevant and irrelevant features is crucial. The sparsity it creates is beneficial for creating models that are not only easier to interpret but also faster in processing.

Handling Multicollinearity

Multicollinearity occurs when independent variables in a dataset are highly correlated, which can complicate model interpretation. Lasso addresses this by selecting one variable from a group of correlated features, essentially reducing unnecessary feature inclusion.

Through this selection process, more stable and unbiased estimators are established. This makes models built with Lasso Regression more reliable in predictions as they manage multicollinearity effectively.

By simplifying the feature set, it helps in enhancing the robustness of statistical models.

Comparing L1 and L2 Regularization

L1 Regularization (Lasso Regression) and L2 Regularization (Ridge Regression) are techniques used in machine learning to prevent overfitting.

L1 regularization adds the “absolute value of magnitude” of coefficients as a penalty term. This tends to make some of the weights exactly zero, leading to sparse models and making it valuable for feature selection. More details can be found in Understanding L1 and L2 Regularization for Deep Learning.

L2 regularization, on the other hand, adds the “squared magnitude” of coefficients as a penalty term. Unlike L1, it does not force coefficients to become zero, but rather shrinks them toward zero evenly.

This method is often more stable for models where feature selection is not important. Further insights are available in Understanding Regularization: L1 vs. L2 Methods Compared.

Key Differences

- L1 Regularization: Leads to sparse models, useful for feature selection.

- L2 Regularization: Provides evenly distributed weights, does not reduce coefficients to zero.

Both techniques are widely used in machine learning, each with unique advantages for different types of problems. Combining them can sometimes provide a balanced approach to regularization challenges. For more, visit The Difference Between L1 and L2 Regularization.

Optimizing the Lasso Regression Model

When optimizing a Lasso Regression model, selecting the right hyperparameters and using effective cross-validation techniques are crucial. These steps help balance bias and variance, minimizing the mean squared error.

Tuning Hyperparameters

Tuning hyperparameters is vital for Lasso Regression. The main tuning parameter in Lasso is alpha, which impacts the L1 regularization strength.

A higher alpha penalizes large coefficients more, which can help reduce overfitting by creating a sparser model.

To find the optimal alpha, try different values and evaluate the model’s performance on a validation set. Using a grid search approach is common. It systematically tests a range of alpha values and finds the combination that results in the lowest mean squared error.

This approach helps in understanding how different hyperparameter settings affect model performance.

Cross-Validation Techniques

Cross-validation techniques are essential to assess model performance and improve its reliability.

The most common method is k-fold cross-validation, which involves splitting the data into k subsets. The model is trained on k-1 of these subsets, and validated on the remaining one. This process is repeated k times, with each subset used once as the validation set.

A variation of this is stratified k-fold cross-validation, ensuring each fold is representative of the entire dataset. This is particularly useful when dealing with imbalanced data.

Cross-validation helps in controlling bias and variance and provides a more robust estimate of the model’s mean squared error.

Using these techniques ensures that the model isn’t sensitive to a single data split and performs consistently across various samples.

Impact of Regularization on Overfitting

Regularization is a key technique in machine learning for managing overfitting, which happens when a model learns the training data too well, including noise and random fluctuations.

Overfitting often leads to poor performance on new data because the model doesn’t generalize well. When a model is too complicated, it captures this noise along with the underlying pattern.

Regularization Techniques:

-

L1 Regularization (Lasso): Adds the absolute value of coefficients as a penalty to the model’s error. This can result in some coefficients becoming zero, effectively reducing the complexity of the model by selecting only significant features. Learn more about L1 regularization’s impact on feature selection and overfitting from DataHeadhunters.

-

L2 Regularization (Ridge): Adds the squared magnitude of coefficients to the penalty. This shrinks the coefficients towards zero, reducing model complexity without necessarily setting them to zero.

Bias-Variance Tradeoff:

Regularization helps balance the bias-variance tradeoff. Low bias and high variance can indicate overfitting.

By introducing a penalty on the model’s complexity, regularization increases bias slightly but decreases variance, resulting in a more generalized model.

Implementing regularization wisely can prevent overfitting and improve a model’s ability to generalize from training data to new, unseen data. When done correctly, it ensures that a model captures the fundamental patterns without memorizing the noise.

Handling High-Dimensional Data with Lasso

Lasso regression is a powerful tool for managing high-dimensional data. It applies L1 regularization, which adds a penalty equal to the sum of the absolute values of the coefficients.

This method effectively controls model complexity and reduces overfitting.

A key advantage of lasso regression is its ability to perform variable selection. By forcing some coefficients to zero, it automatically eliminates less important features, helping to focus on the most relevant ones.

This makes it particularly useful for creating more interpretable and sparse models.

In the context of high-dimensional data, where there are more features than data points, lasso regression is valuable. It deals with the problem of multicollinearity and helps improve model prediction performance.

It ensures that only a few variables are selected, which simplifies the model and enhances its predictive power.

High-dimensional datasets often contain noise and irrelevant data. Lasso regression minimizes the impact of this noise by focusing on significant variables and reducing the complexity of the data.

A detailed guide on handling high-dimensional data highlights how L1 regularization aids in feature selection. Researchers and data scientists utilize these features for better model accuracy and efficiency.

Lasso Regression and Model Interpretability

Lasso regression enhances interpretability by simplifying regression models. It uses L1 regularization to push the coefficients of less important features to zero.

This results in models that are more sparse and easier to understand.

Increased sparsity means fewer variables are included, making it simple to identify which features are most influential. This is a form of feature selection, as it naturally highlights significant variables in the model.

Feature selection through lasso also aids in reducing overfitting. By only retaining impactful features, the model generalizes better to unseen data.

This makes it a valuable tool for analysts and data scientists.

Comparing lasso with other methods, such as ridge regression, lasso stands out for its ability to zero-out coefficients. While ridge adjusts coefficients’ sizes, it doesn’t eliminate them, making lasso uniquely effective for interpretability.

Applying lasso in both linear and logistic regression contributes to a more straightforward analysis. For people seeking to balance model accuracy and simplicity, lasso regression is a reliable option.

In practice, interpreting model results becomes simpler with fewer coefficients. Because of this, analysts can communicate findings more effectively, supporting decision-making processes. Lasso’s ability to enforce sparsity ensures a clearer picture of the data landscape.

Case Studies of Lasso Regression in Practice

Lasso regression is widely used for model fitting and predictive performance, particularly in cases with high-dimensional data. It improves model accuracy by applying a penalty that forces regression coefficients towards zero. This results in simpler and more interpretable models.

In a medical study, researchers used lasso regression to identify key predictors of disease from a vast set of genetic data. By applying L1 regularization, they were able to enhance the model’s predictive power while reducing the risk of overfitting.

Real estate analysts often employ lasso regression in R to predict housing prices. With numerous variables like square footage, location, and amenities, lasso helps in selecting the most influential features, offering more accurate estimates.

In marketing, businesses utilize lasso regression for customer behavior analysis. By selecting important variables from customer data, companies can tailor their strategies to target specific segments effectively. This ensures more personalized marketing campaigns.

Here is a simple example in R where lasso regression can be implemented to fit a model:

library(glmnet)

# Example data

x <- matrix(rnorm(100*20), 100, 20)

y <- rnorm(100)

# Fit lasso model

model <- glmnet(x, y, alpha = 1)

# View coefficients

coef(model)

These practical applications demonstrate how lasso regression aids in streamlining complex models and enhancing predictive performance across diverse fields.

Assessing Model Performance

Assessing model performance in Lasso Regression involves evaluating how well the prediction aligns with actual outcomes. Key metrics and the balance between test and training accuracy are critical for a robust analysis.

Evaluation Metrics

One crucial metric for evaluating Lasso Regression is the residual sum of squares (RSS). The RSS measures the sum of squared differences between the observed and predicted outcomes. A lower RSS value indicates better predictive performance of the model.

Another important metric is model accuracy. This tells us how often the model’s predictions are correct. Validation accuracy helps confirm that the model generalizes well to new, unseen data.

These metrics provide a well-rounded view of performance, guiding adjustments to improve the model.

Test vs Training Accuracy

The comparison between test dataset accuracy and training accuracy is crucial in assessing performance.

High training accuracy might indicate that a model is fitting well to the data it was trained on. However, if the test accuracy is low, it suggests overfitting, where the model struggles with new data.

Balancing these accuracies requires adjusting Lasso’s regularization strength to find the optimal setting.

This ensures that the model performs consistently well across different datasets. By monitoring these accuracies, one can refine the model to achieve reliable prediction outcomes.

Regularization Techniques Beyond Lasso

Ridge Regularization: Ridge regularization, also called L2 regularization, is another popular method. It adds a penalty equal to the square of the magnitude of the coefficients.

This helps in stabilizing solutions to problems like ordinary least squares (OLS) by discouraging overly complex models. It often handles multicollinearity and improves model generalization by reducing variance.

Elastic Net: Elastic Net combines the strengths of both Lasso and Ridge regularization. It introduces penalties that include both the absolute value of coefficients (like Lasso) and their squared values (like Ridge).

This makes Elastic Net suitable for scenarios where there are many more predictors than observations or when predictors are highly correlated.

Lists and tables can make complex topics simpler. Here’s a comparison:

| Technique | Penalty | Use Case |

|---|---|---|

| Ridge | Squared values of coefficients | Multicollinearity, general model tuning |

| Lasso | Absolute values of coefficients | Feature selection, sparse models |

| Elastic Net | Combination of Ridge and Lasso | Handling correlated features, high-dimensional data |

Constraints in these techniques guide the model to find the best balance between simplicity and accuracy.

By applying these regularization techniques, models can be tuned to achieve better performance, especially in the presence of large datasets with complex patterns or noise.

Frequently Asked Questions

Lasso regression is a powerful tool in machine learning for tasks like feature selection and dealing with high-dimensional datasets. It introduces the concept of sparsity in model parameters through L1 regularization, setting it apart from other techniques.

How does L1 regularization in Lasso regression affect feature selection?

L1 regularization in Lasso regression applies a penalty to coefficients. This penalty can shrink some coefficients to zero, effectively removing these features from the model.

This feature selection ability helps simplify models and can improve their interpretability.

In what scenarios is Lasso regression preferred over Ridge regression?

Lasso regression is preferred when the goal is to perform automatic feature selection. It is particularly useful when reducing the number of features is important, such as in high-dimensional datasets.

In contrast, Ridge regression is better when dealing with multicollinearity without dropping variables.

Can you explain how L1 regularization can lead to sparsity in model parameters?

L1 regularization penalizes the absolute magnitude of coefficients. This can lead to some coefficients becoming exactly zero, which means those features are not used in the model.

This sparsity helps in creating simpler, more interpretable models, which is particularly beneficial in datasets with a large number of predictors.

How do you choose the regularization strength when applying Lasso regression?

The regularization strength in Lasso regression is crucial and is often selected using cross-validation. This involves testing different values and selecting the one that results in the best model performance.

The right strength balances between bias and variance, leading to an optimal model.

What are the implications of using Lasso regression for variable reduction in high-dimensional datasets?

Using Lasso regression in high-dimensional datasets can significantly reduce the number of features, leading to more manageable and efficient models.

This can improve model interpretability and performance, particularly in cases where many features are irrelevant or redundant.

How does the implementation of Lasso regression in Python differ from conventional linear regression models?

Implementing Lasso regression in Python typically involves using libraries such as scikit-learn. The process is similar to linear regression but includes setting a parameter for the regularization strength.

This allows Python to automatically handle feature selection and regularization, unlike standard linear regression models.