Foundations of Linear Algebra for Machine Learning

Linear algebra is crucial in machine learning. It provides methods to handle various types of data. Concepts such as vectors, matrices, and tensors are key to understanding how algorithms process information.

Understanding Vectors and Their Importance

Vectors are fundamental in mathematics and data science. They are one-dimensional arrays of numbers that represent points in space. Each element in a vector has significance, contributing to the overall direction and length.

In machine learning, vectors often represent data points or features in a dataset.

Vectors enable efficient processing of numerical data. For example, in document classification, words can be transformed into numerical vectors using techniques like TF-IDF. This transformation allows machines to process text data swiftly, identifying patterns or clusters.

Grasping Matrix Operations and Properties

Matrices are pivotal in organizing and manipulating data. They are two-dimensional arrays of numbers arranged in rows and columns.

Operations like addition, multiplication, and inversion are key to transforming and analyzing datasets. For instance, matrix multiplication is essential for feeding data through neural networks.

Matrices also have unique properties, like determinants and eigenvalues, which help understand data structures better. These properties are used in various algorithms to optimize performance and accuracy.

Exploring the Role of Tensors in Machine Learning

Tensors generalize the concept of vectors and matrices to higher dimensions. They are multi-dimensional arrays that aid in complex data representation.

In machine learning, tensors are used extensively in deep learning frameworks like TensorFlow.

Tensors help in managing data with multiple dimensions, such as color images or videos. This capability allows algorithms to learn intricate patterns and make more accurate predictions. By utilizing tensors, models can handle diverse and complex datasets effectively.

Mathematical Concepts and Theories

In this exploration of linear algebra for machine learning, understanding determinants, eigenvalues, eigenvectors, eigenbases, and the rank of a matrix is essential. These concepts are crucial in data processing and mathematical modeling.

Diving Into Determinants and Eigenvalues

Determinants are a numerical value associated with square matrices that provide insight into whether a system of equations has a unique solution. If the determinant is zero, the matrix is singular, meaning no inverse exists.

Eigenvalues, another key concept, are scalars that indicate the magnitude by which the direction is scaled during a transformation. They are found by solving the characteristic equation, which involves the determinant of the matrix minus lambda times the identity matrix.

Understanding how determinants and eigenvalues interact helps predict system behavior, making these concepts valuable in both mathematics and machine learning.

Deconstructing Eigenvectors and Eigenbases

Eigenvectors are non-zero vectors that change only in scalar when a linear transformation is applied. For a given matrix, finding its eigenvectors involves solving a system of linear equations where the matrix times the vector equals the eigenvalue times the same vector.

An eigenbasis is a set of eigenvectors that form a basis for the space, allowing for matrix diagonalization. This transformation is beneficial for simplifying complex calculations, as diagonal matrices are easier to handle.

Grasping the relationship between eigenvectors and their corresponding eigenvalues reveals much about the matrix’s geometric transformations.

Decoding the Rank of a Matrix

The rank of a matrix refers to the maximum number of linearly independent row or column vectors in the matrix. This value indicates the dimension of the vector space spanned by its rows or columns.

A full-rank matrix, where the rank equals the number of rows or columns, has the largest possible number of independent vectors. In contrast, a matrix with lower rank has dependency among its vectors.

Understanding matrix rank is pivotal, as it influences solutions to linear systems and data dimensions, affecting how algorithms process data.

Advanced Mathematical Techniques

Exploring advanced mathematical techniques in machine learning includes mastering matrix operations and understanding dimensionality reduction. These methods are crucial for developing efficient algorithms and improving data processing.

Mastering Matrix Inverse and Linear Transformations

Matrix inverses and linear transformations play a central role in machine learning. The matrix inverse is used to solve linear equations, which is important for many algorithms. Being able to efficiently compute the inverse can help in optimizing these processes.

Linear transformations involve applying a linear function to a data set. This is fundamental in altering data spaces, which can simplify complex problems and make patterns more visible.

Machine learning relies on these transformations to adjust data, making it easier to process and analyze.

Understanding these operations aids in creating robust models that handle diverse data sets. Algorithms become more adaptable and accurate, providing better predictions and performance. Efficient handling of these mathematical concepts is critical for computational efficiency in machine learning.

Applying Dimensionality Reduction in Data

Dimensionality reduction is a technique to simplify large data sets without losing important information. This is essential in handling high-dimensional data often encountered in machine learning.

Techniques such as Principal Component Analysis (PCA) reduce the number of input variables. By focusing on the most significant features, computational costs decrease, and models run faster and more efficiently.

Dimensionality reduction also helps mitigate the curse of dimensionality, a problem where the feature space becomes sparse due to many dimensions. This improves model performance and makes it simpler to visualize data.

Employing dimensionality reduction ensures more efficient data handling and enhances the learning process in machine learning tasks.

Programming for Linear Algebra

Programming for linear algebra can deeply influence the effectiveness of machine learning models. Through programming languages like Python, you can harness powerful libraries to manipulate matrices and vectors efficiently. This involves using specific tools to simplify computations and improve the performance of algorithms.

Leveraging Python in Linear Algebra

Python is a popular choice for linear algebra due to its simplicity and versatility. It allows users to perform complex mathematical operations with ease. In data science, Python’s syntax helps implement and visualize algorithms clearly.

Using Python in environments like Jupyter Notebook enhances interactivity and provides an excellent platform for testing and debugging code. Jupyter allows step-by-step execution, helping programmers better understand linear algebra operations.

Python’s extensive ecosystem includes libraries specifically designed for scientific computing, such as NumPy, which aids in array management and numerical calculations.

Python also works well with machine learning platforms like scikit-learn and PyTorch, making it a go-to language for researchers and developers.

Utilizing Libraries: Numpy and Beyond

Libraries like NumPy are crucial for linear algebra programming. NumPy offers functions for multi-dimensional arrays and matrices, enabling fast computations.

Key operations such as matrix multiplication, inversion, and eigenvalue calculations can be performed efficiently with NumPy.

For more advanced tasks, other libraries come into play. SciPy builds on NumPy’s capabilities with additional modules for optimization and statistical analysis.

Scikit-learn integrates with NumPy for machine learning tasks, allowing seamless manipulation and analysis of data.

PyTorch, primarily used for deep learning, leverages NumPy for tensor operations, ensuring efficient computation. These libraries, with their extensive functionalities, form the backbone of linear algebra programming in Python, enhancing the development of machine learning models.

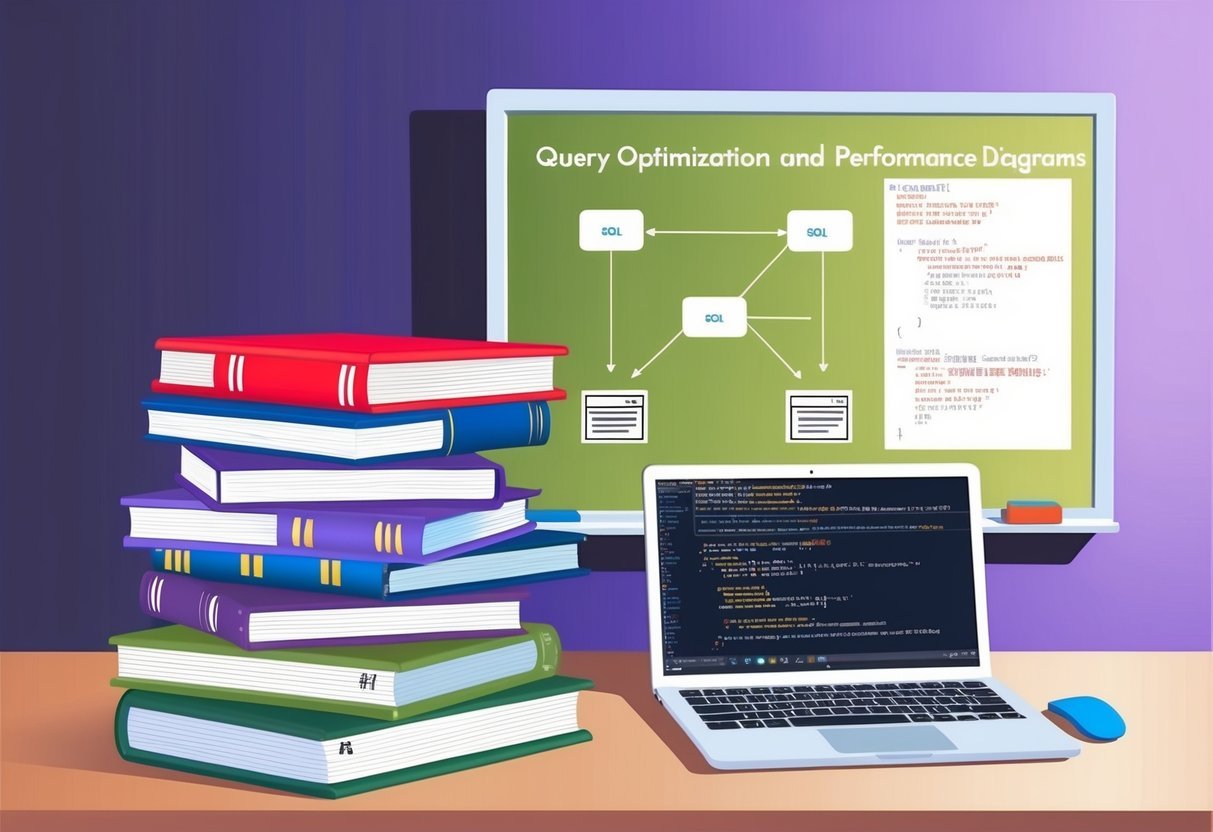

Key Algorithms and Their Application

In the realm of machine learning, linear algebra plays a crucial role. Understanding key algorithms involves exploring dimensions and transformations, which are foundational for deep learning.

Exploring ML Algorithms Using Linear Algebra

Machine learning algorithms often rely on linear algebra to process and transform data. Matrices and vectors are used as data structures to represent datasets and operations.

For instance, in algorithms like Principal Component Analysis (PCA), matrices help find patterns and reduce dimensions, providing insights into data.

Support Vector Machines (SVM) utilize hyperplanes for classification, where linear algebra helps define the best boundary between data classes. Additionally, matrix decomposition techniques like Singular Value Decomposition (SVD) are pivotal for tasks like recommendation systems.

Examining Deep Learning through The Lens of Algebra

Deep learning builds upon neural networks, heavily utilizing linear algebra for computations. Every layer in a neural network involves matrix operations such as multiplication and addition.

Backpropagation adjusts weights using gradient descent, heavily relying on derivatives derived using linear algebra.

Neural networks involve:

- Activation Functions: Evaluated through linear equations to introduce non-linearity.

- Weight Matrices: Trainable parameters optimized through iterative algorithms.

In convolutional neural networks (CNNs), convolution operations can be described as matrix multiplications. These are essential for feature extraction in image processing.

Deep learning frameworks also often use tensor operations, an extension of matrices, to handle complex, multi-dimensional data.

Data Science Essentials

Understanding data science requires a firm grasp of essential mathematical tools. Among these are the principles of statistics, probability, and calculus. These branches of mathematics are crucial for analyzing and interpreting data effectively.

Statistical Foundations in Data Science

Statistics form the backbone of data science. It helps in analyzing data sets to find patterns, trends, and insights.

Descriptive statistics like mean, median, and mode summarize data points, while inferential statistics help make predictions about a population based on a sample.

Statistical methods are also used to design experiments, which are essential to test hypotheses. Techniques such as regression analysis and hypothesis testing play key roles in understanding relationships within data.

Understanding variance and standard deviation is crucial for assessing data spread. These concepts help data scientists evaluate the reliability and precision of their models.

Probability and Calculus in Machine Learning

Probability provides a framework for making inferences about a population based on sample data. It is essential for creating models that predict outcomes under uncertainty.

Understanding concepts like random variables and probability distributions allows data scientists to evaluate the likelihood of different scenarios.

Calculus, on the other hand, is essential for optimization in machine learning. Derivatives are used to understand changes in data and optimize learning algorithms.

For example, gradient descent, a key technique in training models, relies heavily on calculus.

Knowledge of both probability and calculus is vital to develop algorithms that can predict future trends and make informed decisions based on data analysis. These tools enable the creation of robust models capable of handling real-world data challenges.

Machine Learning Foundations

Machine learning is built on a solid foundation that includes understanding its theoretical framework and the principles of AI. These concepts often involve complex mathematical roots, which are essential for developing effective algorithms.

From Theory to Practice: Machine Learning Foundations

Machine learning involves algorithms that enable computers to learn from and make predictions based on data. The theoretical side includes understanding fundamental concepts like supervised and unsupervised learning, training sets, and error analysis. To move from theory to practice, a solid grasp of these principles is essential.

Practical applications are diverse, ranging from image recognition to recommendation systems. Implementing machine learning requires a blend of coding skills and mathematical knowledge. It often uses tools such as Python and libraries like TensorFlow. Techniques like cross-validation and hyperparameter tuning are also key aspects of effective deployment.

Principles of AI and Their Mathematical Roots

Artificial intelligence and machine learning are closely linked. AI uses machine learning algorithms to perform tasks that typically require human-like intelligence. The mathematical foundation for these algorithms involves disciplines such as linear algebra, probability, and calculus.

Linear algebra is critical, especially when working with data sets represented as matrices. Probability theory helps in understanding uncertainties and model predictions. Meanwhile, calculus is often used in optimization problems, which aim to minimize error in predictions.

Together, these mathematical principles help in developing models that can learn and adapt. Understanding these roots is crucial for anyone looking to advance in the field of machine learning and AI. For more on how linear algebra is used in this context, check out the Basics of linear algebra.

Integrating Linear Algebra with Other Disciplines

Linear algebra plays a crucial role in various fields. It is especially important in computer science and language processing. Understanding this integration helps in grasping the basics of machine learning and other computational tasks.

Computer Science and Vision

In computer science, linear algebra is a fundamental tool. It is particularly significant in computer vision, where it helps process and analyze images and videos. Techniques such as matrix transformations and eigenvectors are used to manipulate and understand visual data. These concepts allow computers to learn from and make sense of images, which is critical in applications like facial recognition and object detection.

Computer vision often relies on algorithms like Singular Value Decomposition (SVD) and Principal Component Analysis (PCA). These linear algebraic methods reduce image data into its key components, making data processing more efficient. They enable computers to focus on the most important features of an image, improving the accuracy of vision systems.

Natural Language Processing and Linear Algebra

Natural Language Processing (NLP) also benefits greatly from linear algebra. Vectors and matrices are used to represent and process language data. Word embeddings, which use vectors to capture semantic meanings, are foundational in NLP. These embeddings enable machines to understand context and relationships between words, which is essential for tasks like translation and sentiment analysis.

Moreover, linear algebra techniques like matrix factorization are used to improve language models. This allows systems to efficiently handle large datasets, extracting subtle patterns and correlations in text. As a result, machines can more accurately comprehend and generate human language, enhancing communication between humans and computers. Linear algebra is core to developing advanced NLP applications that are increasingly integral to technology.

Mathematical Pedagogy in Machine Learning

Understanding mathematics is crucial for developing effective machine learning models. This section explores innovative and traditional approaches to teaching math, focusing on how each contributes to the growing field of machine learning.

Innovative Pedagogy for Machine Learning

Recent advances in pedagogy emphasize the need for active learning strategies. Courses now incorporate collaborative projects where students tackle real-world problems using mathematical tools, such as linear algebra. Tools like interactive software and online simulations are used to help explain complex concepts through visualization. These methods aim to not only build understanding but also make learning math more engaging and applicable to machine learning scenarios.

Moreover, integrating computer-based resources allows students to experiment and see immediate feedback, which is vital for grasping intricate ideas related to mathematics. This approach also encourages deeper learning, as students are compelled to apply theoretical knowledge to practical tasks. The move towards blending technology with math instruction highlights the need for adaptable teaching methods in today’s digital world.

Role of Traditional Mathematics Pedagogy

Despite the push for innovative teaching, traditional pedagogy remains valuable. Carefully structured lectures and textbooks help lay a strong foundation for concepts like calculus and algebra. This approach focuses on logical progression and drilling down on fundamental principles. Traditional methods have long been effective in helping students achieve a deep understanding of essential math concepts used in machine learning.

Using methods like step-by-step problem solving and rote memorization, students can develop crucial skills. A strong grounding in classical teaching methods can complement newer, tech-driven approaches by ensuring that learners do not skip over foundational theories. Integrating such time-tested techniques ensures a balanced learning experience for newcomers to the field.

Practical Machine Learning Projects

Machine learning projects often rely on strong math skills, such as linear algebra, which involve systems of equations. These concepts are essential for solving real-world problems and optimizing processes in various industries.

Real-World Use-Cases of Linear Algebra

Linear algebra plays a crucial role in many machine learning applications. One common use is in image processing, where matrices are used to represent pixel data. This allows for efficient transformations and enhancements of images.

Another significant use is in natural language processing (NLP). Here, linear algebra is used to represent words and sentences as vectors for efficient manipulation and analysis. These techniques help in machine translation, sentiment analysis, and more complex tasks like chatbots.

Recommender systems also heavily rely on linear algebra techniques to manage and analyze large datasets. By understanding patterns in user behavior, they can make accurate suggestions. Practicing linear algebra helps in developing these fundamental skills that are crucial for implementing these systems effectively.

The Practicality of Machine Learning in Industry

Machine learning is widely adopted in industries for its efficiency and ability to tackle complex problems. In finance, it helps predict market trends and assess risk by analyzing large datasets for insights and patterns.

Healthcare benefits from machine learning through predictive analytics for disease diagnosis and personalized treatment plans. By employing mathematical models, healthcare providers can improve patient outcomes.

In manufacturing, machine learning optimizes supply chain management and enhances production efficiency. These projects rely on a solid foundation in mathematical skills, particularly with systems of equations, to handle the vast amount of data processed.

These examples highlight how the practice of doing math is integral to building successful machine learning projects that impact different sectors.

Online Learning and Machine Learning Education

Online learning has revolutionized the way people acquire skills in machine learning. Different platforms and communities offer tools and support to help learners master these skills effectively.

E-Learning Platforms and Resources

Platforms like Coursera and deeplearning.ai provide comprehensive courses on machine learning. These platforms often feature instructors like Luis Serrano, who is known for his expertise in simplifying complex concepts.

Courses often include videos, quizzes, and hands-on projects. Khan Academy and YouTube are also valuable resources. They offer free content, allowing students to learn at their own pace. The interactive format in these videos can enhance understanding.

Many platforms provide certificates, which can be added to a professional profile. This can be an added benefit for learners looking to showcase their knowledge to potential employers.

Community and Collaborative Learning Online

Online forums and communities play a vital role in machine learning education. Websites like Reddit, Stack Overflow, and specialized subreddits host discussions where learners can ask questions and share insights.

Collaborative platforms like GitHub enable students to work on joint projects. This fosters a deeper understanding of machine learning concepts. These communities often organize coding challenges and hackathons to further engage students.

Study groups on social media platforms allow global interaction. Learners can exchange resources and advice, broadening their learning experience. Engaging with others helps in overcoming challenges and finding solutions collaboratively, making learning more effective.

Frequently Asked Questions

Understanding linear algebra is crucial for machine learning, covering key concepts, the importance of a strong foundation, and the best approaches to self-study. Resources, time commitment, and courses are also discussed.

What are the key concepts of linear algebra required for machine learning?

Key concepts include vectors, matrices, and their operations like addition and multiplication. Eigenvectors, eigenvalues, and singular value decomposition are also essential. These concepts help in understanding how data is represented and manipulated in machine learning models.

How can I find resources to learn linear algebra for machine learning for free?

There are many free resources available online. Websites like Khan Academy and MIT OpenCourseWare offer comprehensive materials. Books by Jason Brownlee and other online PDFs provide valuable information as well.

What is the estimated time commitment to become proficient in linear algebra for machine learning?

The time required varies based on prior experience. For beginners, dedicating a few hours a week over several months can lead to a good understanding. More intensive efforts may shorten this time frame. Continuous practice and application are key to proficiency.

Are there any comprehensive online courses for learning math specifically geared towards machine learning?

Yes, platforms like Coursera and edX offer courses focusing on math for machine learning. Courses often cover linear algebra, calculus, and statistics, tailored to machine learning contexts. Some courses are created by top universities and include practical assignments.

How important is a strong foundation in linear algebra for pursuing machine learning?

A strong foundation in linear algebra is vital. It forms the backbone of many machine learning algorithms, enabling the understanding of how models process and learn from data. Linear algebra also assists in optimizing algorithms through matrix operations.

Can I self-study linear algebra for machine learning, and if so, what is the best approach?

Self-study is certainly possible with dedication. Start with basic concepts using textbooks and online resources.

Practice by solving problems and implementing algorithms in programming languages like Python. Joining online forums and study groups can enhance the learning experience.