Understanding the Synergy Between Python and SQL

Python and SQL work together effectively in data science by combining Python’s versatile programming capabilities with SQL’s powerful database management features. This synergy allows for efficient data manipulation, analysis, and visualization.

Significance of Python and SQL in Data Science

Python, with its rich ecosystem of libraries like Pandas and NumPy, handles data analysis and visualization. SQL, or Structured Query Language, efficiently retrieves and manages large datasets. This combination enables data scientists to extract, clean, and analyze data efficiently. Integrating these tools enables professionals to make data-driven decisions, boosting their ability to transform raw data into meaningful insights.

Overview of Python Libraries for SQL Integration

Several Python libraries facilitate integration with SQL databases. SQLAlchemy is popular for its ORM (Object-Relational Mapping), allowing Python objects to interact with SQL databases seamlessly. It abstracts database operations, making them easier to understand and use.

Pandas, with its read_sql function, enables direct SQL queries, simplifying data manipulation.

PyMySQL and psycopg2 are other useful libraries, connecting Python to MySQL and PostgreSQL databases, respectively. These libraries enhance flexibility and the efficiency of managing data tasks in Python.

Essential SQL Commands for Data Manipulation

SQL basics involve commands critical for data manipulation, such as SELECT, INSERT, UPDATE, and DELETE. The SELECT command retrieves data from one or more tables, fundamental for data analysis.

INSERT adds new data entries, while UPDATE modifies existing records. DELETE is used to remove records from a database. Mastering these commands is essential for anyone using SQL for data management, as they form the core operations needed to handle and structure data effectively.

Setting Up the Python Environment for SQL Integration

Setting up a Python environment for SQL integration involves choosing the right tools and ensuring correct configurations.

Install Python and Necessary Libraries

Installing Python is the first step. Visit the official Python website to download the latest version.

After installing, it’s important to set up a virtual environment using venv to keep dependencies organized.

Within this environment, install essential libraries for database work such as sqlite3, MySQL Connector, and psycopg2 for PostgreSQL.

To facilitate interaction between Python and databases, tools like Jupyter Notebooks and Anaconda offer a good platform.

Configuring Database Connections

Establishing a connection between Python and databases is crucial. Use a database connector specific to the database type. For MySQL, use mysql.connector; for PostgreSQL, psycopg2.

These connectors require specifying a host, database, username, and password in your Python script.

Example:

import mysql.connector

conn = mysql.connector.connect(

host="your_host",

user="your_username",

password="your_password",

database="your_database"

)

Testing the connection ensures that Python can interact with the SQL database to perform queries.

Understanding Python’s SQLite3 Library

The sqlite3 library is an internal Python library that provides an interface for SQLite databases. These are lightweight and perfect for standalone applications.

By importing sqlite3, developers can execute SQL commands and manage database operations efficiently.

For example, to create a database and table:

import sqlite3

conn = sqlite3.connect('example.db')

cursor = conn.cursor()

cursor.execute('CREATE TABLE students (id INT, name TEXT)')

This method allows storing and retrieving data locally without requiring external database management systems. Understanding the sqlite3 library helps in handling smaller scale data operations confidently.

Executing SQL Queries from Python

Integrating Python with SQL allows for executing SQL commands and automating database tasks. This process involves creating a cursor, handling complex queries, and managing transactions while avoiding risks like SQL injection.

Utilizing Cursors to Interact with SQL Databases

A cursor is a crucial tool when executing SQL queries in Python. It acts as an intermediary between Python and the database.

When a connection to the database is established, the programmer creates a cursor to execute SQL commands and retrieve data.

The Python sqlite3 library, for example, provides the cursor() method to generate cursors.

The cursor can iterate over query results and execute multiple operations without needing to reconnect to the database. This makes tasks like fetching large datasets more efficient.

By utilizing cursors, developers can maintain an active connection to the database, which is vital for running several SQL queries successively.

Writing and Executing Complex Queries

Executing complex queries is often necessary to extract valuable insights from databases. Python, when paired with libraries like pandas, can handle these queries effectively.

Conditions, joins, and aggregations can be combined to form intricate queries that are directly executed from Python.

For precise query execution, Python and SQL allow embedding SQL commands within Python scripts. This can also include parameterized queries, which bind parameters to variables in Python.

This approach not only streamlines the process of executing SQL queries but also ensures clarity and accuracy when dealing with large datasets.

Managing Transactions and Handling SQL Injection Risks

While executing SQL commands, managing transactions is critical to ensure data integrity.

Python provides transaction support through methods like commit() and rollback() within the database connection. This allows programmers to control the state of data changes, especially when executing multiple related queries.

SQL injection poses significant security risks when executing queries from Python. To mitigate these risks, using parameterized queries is essential.

This technique allows for safe inclusion of user input in SQL commands by treating input values as parameters rather than executable code. This reduces the likelihood of SQL injection attacks, keeping the database secure from harmful input.

Data Management with Python and SQL

Integrating Python with SQL databases allows users to automate data management tasks. This combination provides a seamless way to create and modify tables, efficiently insert or delete records, and retrieve or update data for robust analysis and application development.

Creating and Modifying Tables via Python

Python can be used alongside SQL to create and modify tables in relational databases. Using libraries such as SQLite, MySQL Connector, or SQLAlchemy, developers can establish a connection to their database. This connection enables them to execute SQL commands directly from Python scripts.

To create a table, a simple SQL CREATE TABLE statement can be run through a Python function. Python scripts allow for the automation of table modifications and can add, remove, or alter columns as needed.

This approach simplifies the process by using Python’s straightforward syntax. It also aids in error handling by using exceptions to catch and manage SQL errors efficiently.

Efficient Data Insertion and Record Deletion

Python makes inserting and deleting records in SQL databases efficient. Through batch processing methods, users can upload large datasets in a single transaction, reducing the time needed for data insertion.

The executemany() function is particularly useful for this purpose, as it allows multiple rows to be inserted at once.

For deleting records, Python uses SQL DELETE commands executed through database connections. Incorporating conditional statements within these commands ensures only specific records are removed, maintaining data integrity.

Automating these tasks with Python scripts not only speeds up the processes but also ensures precision and helps avoid manual errors. This is crucial in large-scale data management when dealing with massive databases.

Performing Data Updates and Retrieval

Data updates and retrieval are key components of managing information within SQL databases, and Python provides an effective way to accomplish these tasks.

By connecting to the database, users can issue UPDATE SQL commands to modify existing data. The ability to use variables in Python can help dynamically change data based on program logic.

Data retrieval is facilitated through SQL SELECT statements. Python can filter, sort, and even join multiple tables to extract precisely the information needed.

This capability is especially useful for data analysts gathering insights from large datasets, as it allows quick and efficient querying.

Advanced Data Analysis Techniques

Advanced data analysis combines SQL and Python to manipulate and analyze datasets effectively. This approach offers powerful tools for handling large datasets and performing detailed statistical analysis.

Leveraging Pandas for SQL Data Manipulation

Pandas is a Python library that offers robust data manipulation capabilities. It can be used to import data directly from SQL databases, making it easy to perform complex transformations.

With Pandas, users can filter, sort, and aggregate data efficiently. The library’s DataFrame structure allows for operations similar to SQL, such as grouping and merging datasets.

For data analysts, using Pandas to handle SQL data offers advantages like streamlined workflows and the ability to perform operations such as calculating means and standard deviations directly on the data.

Statistical Analysis using SQL and Python

Combining SQL and Python enables comprehensive statistical analysis on large datasets. Using SQL, users can perform initial data queries to filter relevant information.

This data is then analyzed in Python, where libraries like Pandas are used for further statistical operations.

Analysts can calculate descriptive statistics like mean, median, and quartiles, and apply more complex statistical models.

By leveraging SQL’s filtering capabilities and Python’s statistical functions, professionals can derive meaningful insights from extensive datasets.

Working with Large Datasets and Performing Aggregations

Handling large datasets requires efficient data processing techniques. SQL is adept at managing big data through its powerful querying capabilities, including aggregations like summing, averaging, and counting rows.

Large datasets often necessitate scalable solutions for effective analysis.

Using Python with libraries such as Pandas can enhance SQL’s capabilities, offering streamlined aggregation processes and in-depth data exploration.

This combination is especially useful for tasks that require summary statistics or complex calculations on massive datasets, allowing analysts to gain valuable insights efficiently.

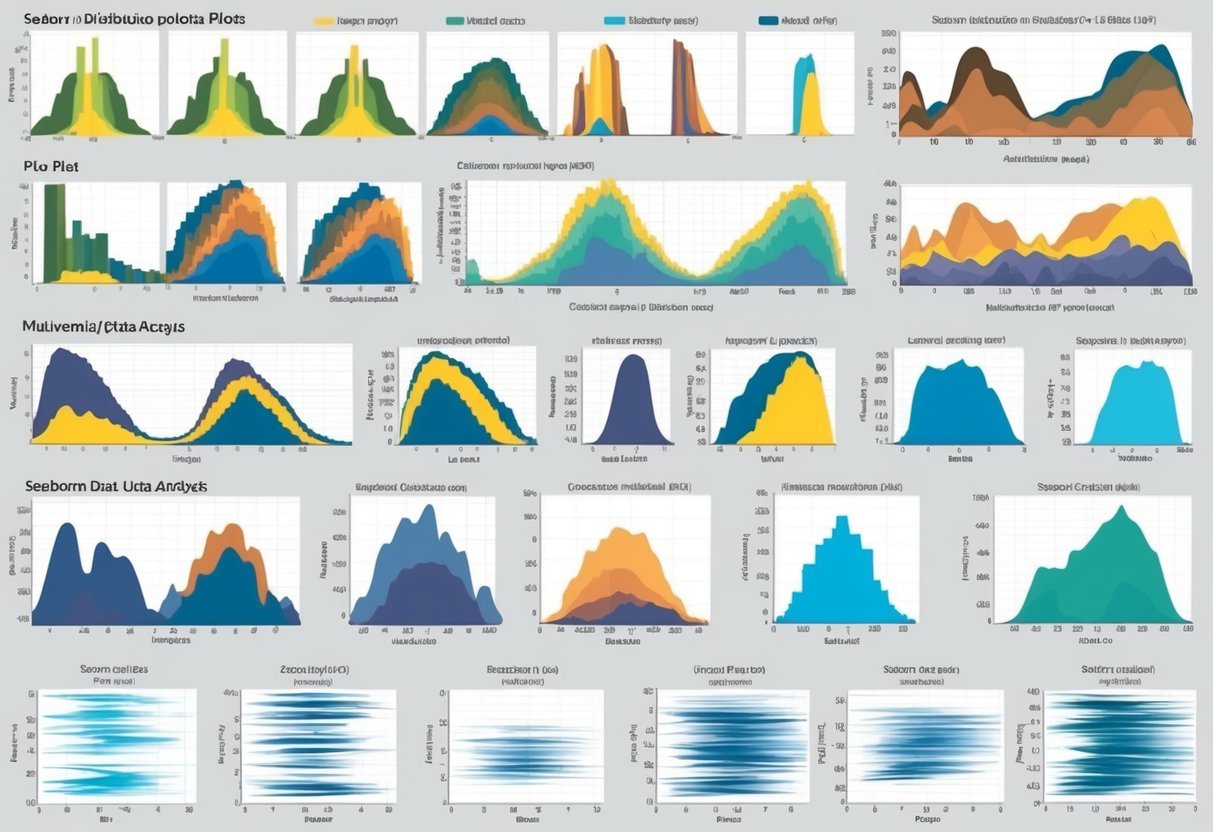

Data Visualization with Python and SQL

Combining Python with SQL enhances data visualization by leveraging Python’s powerful libraries and SQL’s efficient data management. Visual insights are created by using structured data from databases and advanced visualization tools.

Creating Visualizations with Matplotlib

Matplotlib is a popular library used in Python for creating static, interactive, and animated visualizations. It is especially known for its ability to create simple plots with a few lines of code.

Users can generate bar graphs, scatter plots, and histograms, turning raw data into visual stories. Moreover, customizing colors, labels, and styles adds clarity to the information presented.

Matplotlib is also flexible, allowing integration with other Python libraries to enhance visualization potential. This adaptability makes it a preferred choice for many when displaying structured data.

Integrating SQL Data with Python Visualization Libraries

Integrating SQL data into Python libraries involves fetching data from databases using SQL queries.

Python libraries like Pandas work well with SQL, allowing users to easily load database data into Python data frames. These data frames can be directly used in visualization libraries like Matplotlib, Seaborn, or Plotly to generate detailed visual outputs.

For example, Dataquest demonstrates how Python’s ease and SQL’s strength combine for tackling data visualization challenges.

This integration makes large datasets more insightful and accessible for decision-makers.

Data Visualization Best Practices

When creating visualizations, clarity and accuracy are crucial. Visualizations should effectively communicate the data’s message without overwhelming the audience.

Using clear labels, legends, and titles ensures the audience understands key points at a glance.

It is important to choose appropriate types of graphs or charts for the data being presented. Consistent use of colors and styles helps maintain a cohesive look and feel throughout the visuals.

Applying these best practices makes structured data more understandable and actionable.

A well-structured visualization enables faster and better decision-making by making complex data more digestible.

Machine Learning with Structured Data

Machine learning with structured data involves the use of organized data, often found in tables or databases, to build predictive models. This process typically involves creating pipelines for efficient data handling, preprocessing data with SQL and Python, and training models on the processed data.

Building Machine Learning Pipelines

Building machine learning pipelines is essential for managing structured data efficiently. Pipelines streamline the process by automating tasks such as data extraction, transformation, and loading.

They help in organizing data flow, ensuring each step—like data preprocessing or model training—receives the necessary input.

Python libraries, such as Scikit-Learn and TensorFlow, often support pipeline creation. Using these libraries, developers can define a sequence of operations that handle data preparation and modeling tasks seamlessly.

By linking these tasks together, pipelines help maintain consistency and accuracy in machine learning projects.

Data Preprocessing with SQL and Python

Data preprocessing is crucial for machine learning tasks, especially with structured data. SQL databases are ideal for retrieving and manipulating data. SQL queries can extract and clean data, addressing null values or outliers.

Python complements SQL by providing libraries like Pandas and NumPy, enhancing data manipulation capabilities.

Python’s Pandas library works well with SQL by converting query results into DataFrames. This conversion allows further data cleaning and transformation using Python’s vast array of data processing functions.

By integrating SQL and Python, data preprocessing becomes more efficient, preparing the data optimally for model training.

Training Models on SQL Data

Training models on SQL data involves accessing structured data stored in relational databases, cleaning it as needed, and then applying machine learning algorithms. Python libraries, like Scikit-Learn, facilitate this process by allowing easy integration with SQL data sources.

Once the data is preprocessed, it can be fed into machine learning models designed for classification, regression, or clustering.

Libraries such as TensorFlow enable complex model architectures, enhancing predictive accuracy. Utilizing SQL ensures that training data is consistent, high-quality, and readily accessible, which is critical for developing reliable models.

Database Management and Optimization

Effective database management and optimization are crucial for leveraging Python with SQL. Key aspects include indexing and performance tuning, ensuring robust backup strategies and security protocols, and scaling SQL databases like PostgreSQL and MySQL.

Indexing and Performance Tuning

Indexing is essential for speeding up queries in SQL databases. Without indexes, databases might perform slower, especially with large datasets. PostgreSQL and MySQL use indexes to improve search times by allowing the database engine to find data more quickly.

Performance tuning involves modifying database settings. Techniques such as query optimization and adjusting memory settings are common.

For instance, reducing the complexity of SQL queries can result in fewer resources required, leading to faster performance.

Regular monitoring and updating index statistics help maintain the efficiency of the database. Performance tuning should be an ongoing process as data grows and access patterns evolve.

Backup Strategies and Database Security

Implementing reliable backup strategies ensures data safety. Regular backups prevent data loss and can save time during recovery. For SQL databases like MySQL, setting a schedule using tools or automated scripts is effective.

Database security involves restricting unauthorized access. Using user roles and permissions, administrators can control who sees and changes data.

PostgreSQL and SQL Server 2017 offer robust security features, like encryption, to protect sensitive information.

Maintaining logs of database activities serves as an audit trail. Strong passwords and limiting server access also strengthen security strategies.

Scaling SQL Databases with Python

As data grows, scaling databases can become necessary. Horizontal scaling involves adding more machines to handle increased load, while vertical scaling enhances machine power. Python aids in scripting and automation of scaling processes.

For large-scale applications, using database replication or sharding can be beneficial. Tools like SQLAlchemy facilitate building these scalable applications with Python by abstracting database interaction.

PostgreSQL and MySQL offer native support for scaling options. Using Python with these databases can help automate tasks and handle scaling more effectively.

Preparing for growth ensures that performance stays reliable even as user demands increase.

Practical Integration Scenarios

Integrating Python with SQL can optimize various operations, enhance data management, and simplify complex analytics tasks. This is especially beneficial in e-commerce data handling and when working with older database systems, as demonstrated with the AdventureWorks sales data.

E-commerce Data Management

E-commerce businesses can streamline operations by integrating SQL databases with Python. Python helps automate tasks like inventory updates, order tracking, and customer data management.

For instance, using Python scripts, companies can seamlessly pull sales data from SQL databases to analyze purchase patterns.

Visualizing trends with Python libraries such as Matplotlib is straightforward, enabling businesses to make data-driven decisions.

By combining SQL’s robust querying capabilities with Python’s flexibility, e-commerce platforms can enhance their data analytics, resulting in improved customer experiences and optimized inventory management.

Integrating Python with Legacy Database Systems

Legacy database systems often pose challenges due to outdated frameworks. Python serves as a bridge for integrating modern operations with these older systems.

With libraries like PyMySQL or SQLite3, developers can execute CRUD (Create, Read, Update, Delete) operations efficiently. This enables organizations to maintain older databases while incorporating new data handling methods.

By leveraging Python in legacy systems, businesses can automate routine tasks and improve data accessibility.

This integration not only preserves valuable historical data but also modernizes data management practices, ensuring that legacy systems remain viable and useful.

Case Study: AdventureWorks Sales Data

AdventureWorks, a sample database often used for learning and testing, demonstrates the potential of integrating Python with SQL. Through Python, users can process the FactInternetSales table, extracting meaningful insights into sales performance.

Simple queries can transform raw sales data into structured formats suitable for in-depth analysis.

Using Python libraries, one can compute statistics, plot sales trends, or predict future sales. This approach illustrates how integrating Python enhances data analytics capabilities, allowing for more detailed and actionable insights into business operations.

By following these methods, analysts can unlock the full potential of their AdventureWorks data.

Optimizing SQL Queries in Python

Combining Python with SQL can greatly enhance the efficiency of data operations. This synergy helps in analyzing query performance, refactoring for greater efficiency, and using powerful tools like SQLAlchemy for ORM-based queries.

Analyzing Query Performance

To optimize SQL queries, it’s important to first analyze how they perform. This involves using tools to measure execution time and resource utilization.

Techniques such as query profiling can identify bottlenecks by highlighting inefficient operations. For instance, checking execution plans can reveal whether indexes are being utilized effectively or if a query is running sequential scans unnecessarily.

Visualization tools can also help by representing this data graphically.

Understanding these performance metrics is critical in refining and optimizing queries. Also, regularly monitoring these aspects ensures that any changes in data or query complexity are addressed swiftly and accurately.

Refactoring for Efficiency

Refactoring involves rewriting SQL queries to enhance their performance while maintaining their function. This process may include rewriting complex joins or subqueries into simpler forms.

Indexing is another vital strategy; adding or adjusting indexes can drastically reduce query time for large datasets.

Moreover, breaking down larger queries into smaller, manageable parts simplifies processing and can improve speed. Batch processing is beneficial for handling large volumes of data, minimizing the strain on databases.

These strategies collectively lead to more efficient querying and improved performance across applications.

Using SQLAlchemy for ORM-Based Queries

SQLAlchemy is a popular Python toolkit that simplifies interaction with databases. It supports handling databases via an Object-Relational Mapping (ORM) approach, which allows developers to work with SQL databases in Pythonic terms.

This abstraction can make complex querying data tasks easier to understand and maintain.

Through SQLAlchemy, one can streamline query building, making it easier to compose and execute SQL operations.

Furthermore, it facilitates integrating Python scripts with databases, improving both performance tuning and productivity. This encourages writing cleaner, more efficient code that performs well across different environments.

Using SQLAlchemy not only optimizes query execution but also enhances overall application logic and architecture, making the database interactions robust and scalable.

Python and SQL in Web Development

Python plays a crucial role in web development, especially when integrating SQL databases. By using frameworks like Flask and Django, developers can create dynamic web applications. Integrating SQL databases allows for effective data management, while handling user data facilitates personalized experiences.

Developing Web Applications with Flask and Django

Flask and Django are popular Python frameworks for web development. Flask is known for its simplicity and flexibility, making it ideal for small to medium projects. Django is more robust, providing a lot of built-in features to speed up development.

Both frameworks allow for easy integration with SQL databases. Flask can use extensions like Flask-SQLAlchemy to work with databases, while Django comes with an ORM (Object-Relational Mapping) system that simplifies database interactions.

These features empower developers to build feature-rich applications that scale well.

Integrating SQL Databases in Web Projects

Integrating SQL databases in web projects is essential for data storage and manipulation. Python offers multiple libraries like sqlite3, mysql.connector, and psycopg2 for this purpose. Each library caters to different database systems, such as SQLite, MySQL, and PostgreSQL, respectively.

Developers use these libraries to execute SQL queries and manage database operations efficiently.

For example, sqlite3 is lightweight and suitable for applications with smaller datasets, while mysql.connector and psycopg2 provide robust solutions for MySQL and PostgreSQL databases.

Proper integration ensures smooth data flow and reliability in web applications.

Managing User Data and Session Information

Handling user data and session information is critical for building interactive web applications. Both Flask and Django offer methods to manage sessions, track user activity, and secure data.

These frameworks allow developers to store and retrieve user information from SQL databases effectively.

Using SQL databases ensures data integrity and security. For instance, Django’s authentication system offers ready-to-use features to manage users, while Flask can leverage extensions like Flask-Login for user session management.

These tools help maintain personalized user experiences and efficient data handling, which are vital for modern web applications.

Security and Best Practices

When integrating Python with SQL, focusing on security and best practices is crucial. Addressing SQL injection, ensuring data integrity, and adhering to industry standards strengthen the application’s security and reliability.

Protecting Against SQL Injection Attacks

SQL injection attacks pose a significant risk when integrating Python with SQL databases. It’s essential to validate user inputs to prevent malicious code from being executed.

Using parameterized queries or prepared statements is vital. Libraries like psycopg for PostgreSQL provide these features, making it easier to secure queries.

Avoid using dynamic queries constructed with user inputs. It’s also important to keep software updated to mitigate vulnerabilities.

Regular security audits help identify and fix potential weaknesses promptly. Overall, these practices significantly reduce the risk of SQL injection attacks.

Ensuring Data Integrity and Consistency

Maintaining data integrity and consistency is crucial in any database application.

Proper use of transactions with commit and rollback ensures that databases maintain a consistent state, even when errors occur. This is particularly important when handling multiple database operations simultaneously.

Validating data before it is entered into the database helps preserve data integrity. Constraints such as foreign keys and unique keys enforce rules at the database level, minimizing errors.

It’s also advisable to regularly back up data to prevent loss due to hardware failures or other issues.

Adhering to Industry Standards

Following industry standards ensures that Python and SQL integrations are secure and efficient. Adhering to standards like ISO/IEC 27001 for information security or OWASP for web applications provides guidance on implementing best practices.

These standards offer frameworks for securing applications and data, protecting businesses and users alike.

Incorporating Python security practices into development is another key consideration. This may include limiting the use of risky functions such as eval(), using the latest stable Python version, and following best practices from libraries used in the integration process.

Compliance with these standards fosters trust and reliability in software applications.

Frequently Asked Questions

Integrating Python with SQL for database operations can significantly enhance efficiency and functionality. This section will cover best practices, automation methods, scenarios where Python shines over SQL, data manipulation differences, Excel integration, and essential libraries.

What are the best practices for using Python to connect to an SQL Server database?

When connecting Python to an SQL Server database, it’s crucial to use libraries like pyodbc or SQLAlchemy for reliable connections.

Always ensure that database credentials are kept secure and connections are properly closed after use to prevent leaks.

How do Python scripts automate and enhance SQL database management?

Python scripts can automate repetitive database tasks such as backups, data extraction, and transformation.

They can also trigger specific SQL operations at scheduled intervals, greatly reducing manual workload. Libraries like pandas can be used to manage large datasets efficiently.

In what scenarios is it more beneficial to process data using Python rather than SQL alone?

Python is better suited for complex data analysis and manipulation tasks that are cumbersome in SQL.

When operations require advanced mathematical computations or integration with machine learning models, Python provides more flexibility and capabilities.

What are the differences in data manipulation capabilities between Python and SQL?

SQL excels at handling structured queries and relational database interactions.

In contrast, Python offers a wider range of data manipulation through its extensive libraries for statistical analysis and visualization. While SQL is efficient for database querying, Python supports dynamic and advanced data processing workflows.

How to integrate Python with SQL for data analysis in Excel?

Python can access and process SQL database data, which can then be exported to Excel using libraries like openpyxl or xlsxwriter.

This integration allows data analysts to leverage Python’s computational power and combine it with Excel’s accessibility for both analysis and presentation.

Which Python libraries are recommended for effective SQL database interactions?

For seamless SQL database interactions, recommended libraries include SQLAlchemy for ORM and query management, and pandas for data manipulation and analysis. Additionally, pyodbc is recommended for connecting to SQL Server. These tools provide robust functionalities to integrate SQL operations within Python scripts efficiently.