Understanding SQL Transactions

SQL transactions are a key part of database management. They ensure data integrity by grouping operations that must succeed or fail together. This concept is based on the ACID properties: Atomicity, Consistency, Isolation, and Durability.

Atomicity ensures that all operations within a transaction are completed. If any part fails, the whole transaction is rolled back. This means the database remains unchanged if something goes wrong.

Consistency guarantees that a database remains in a valid state after a transaction. Each transaction moves the database from one valid state to another, ensuring correct data.

Isolation prevents concurrent transactions from interfering with each other. Each transaction appears to occur in isolation, even if others happen simultaneously.

Durability ensures that once a transaction is committed, changes are permanent, even if the system crashes. Data remains reliable and stored safely.

An SQL transaction starts with a BEGIN TRANSACTION command. This marks where the work begins. To save changes, use COMMIT; to undo them, use ROLLBACK.

This control over transactions gives users the ability to manage data securely within databases.

In systems like SQL Server, there are different transaction modes. Autocommit mode automatically commits every transaction. In contrast, explicit transactions require starting with BEGIN TRANSACTION and ending with COMMIT or ROLLBACK. Learn more about these modes at SQL Shack’s Modes of the Transactions in SQL Server.

Transaction Statements and Commands

SQL transactions play a crucial role in managing data integrity by grouping multiple operations into a single unit. This section explores key transaction commands that allow users to start, commit, and roll back transactions effectively.

The Begin Transaction Statement

The BEGIN TRANSACTION statement marks the start of a database transaction. It ensures that a sequence of operations is executed as a single unit. If any operation within this transaction fails, the results can be undone to maintain data consistency.

This is essential when working with multiple SQL statements that depend on each other. By using BEGIN TRANSACTION, developers can isolate changes until they decide to finalize them. This isolation is critical for applications requiring high data reliability and consistency. The ability to control when a transaction begins allows for precise management of complex operations.

Committing Transactions With Commit Command

The COMMIT command is used to save all changes made during the current transaction. When a transaction is committed, it becomes permanent, and all modifications are applied to the database.

This step is crucial after the successful completion of SQL statements grouped under a transaction. By committing, users ensure that the database reflects all desired changes.

The ability to commit transactions is vital for maintaining a stable and reliable database environment. Developers should carefully decide when to use COMMIT to confirm that all transaction steps have been verified and are accurate.

Rolling Back Transactions With Rollback Command

The ROLLBACK command is used to undo changes made during the current transaction, reverting the database to its previous state. This command is essential in scenarios where errors or issues are detected during transaction execution.

Rolling back transactions helps prevent unwanted database changes that could lead to data corruption or inconsistency. It is a safeguard to maintain data accuracy and integrity, especially in complex operations involving multiple SQL statements.

By using ROLLBACK, developers and database administrators can handle exceptions gracefully and ensure that any problems are rectified before the transaction affects the database state. This proactive approach in SQL management is critical for robust database applications.

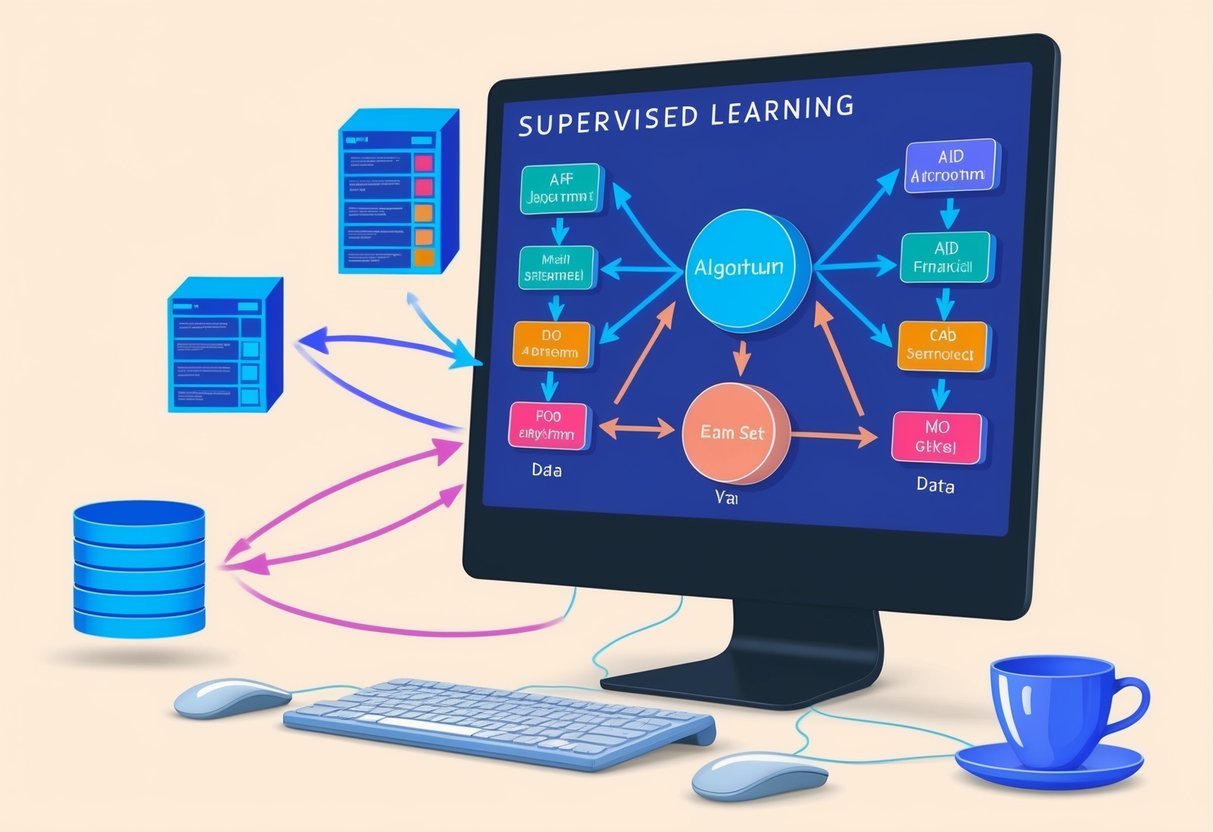

Implementing ACID Properties in SQL

Implementing ACID properties is essential for keeping SQL databases reliable. The four key attributes are Atomicity, Consistency, Isolation, and Durability. They ensure that database transactions are processed reliably.

Atomicity guarantees that all steps in a transaction are completed. If one step fails, the entire transaction is rolled back. This ensures no partial updates occur, keeping the database stable.

Consistency ensures that a transaction takes the database from one valid state to another. This means all data rules, constraints, and validations are upheld after the transaction completes.

Isolation keeps transactions separate from others, preventing unexpected results. Each transaction appears isolated and does not interfere with another. This keeps simultaneous operations from conflicting.

Durability ensures that once a transaction is committed, the changes are permanent. Even in cases of system failures, these changes are saved to disk, maintaining data integrity.

Managing these properties involves choosing the right isolation levels. Isolation levels include:

- Read Uncommitted

- Read Committed

- Repeatable Read

- Serializable

Choosing the right level depends on balancing performance and data integrity. For more details on these concepts, check ACID Properties in DBMS.

Optimizing for performance while ensuring data integrity requires carefully implementing these properties. Proper management helps in building robust database systems.

Isolation Levels and Concurrency

Transaction isolation levels in a database management system control how transactional data is accessed and modified concurrently. Each level offers different balances between data consistency and availability, impacting phenomena like dirty reads and phantom reads.

Read Uncommitted

The lowest isolation level is Read Uncommitted. Transactions can read changes made by others before they are committed. This means uncommitted, or dirty, reads are possible. It is fast because it doesn’t require locks but can result in inconsistency.

Dirty reads can lead to unreliable data, as transactions might be reverted. This level is often used where speed is prioritized over data accuracy, which can be risky for critical data.

Read Committed

Read Committed is a more restrictive isolation level. It ensures that any data read is committed at the time of access, preventing dirty reads. Transactions hold locks only for the duration of the read.

This level provides a balance between performance and consistency. It is commonly used where a moderate level of concurrency is acceptable. Although it reduces dirty reads, non-repeatable reads may still occur.

Repeatable Read

The Repeatable Read level extends Read Committed by preventing non-repeatable reads. Once a transaction reads data, no other transaction can modify it until the initial transaction completes. This ensures stability for the duration of the transaction.

However, this does not prevent phantom reads, where new rows appear in between transactions. Repeatable Read is useful in scenarios with moderate data consistency needs where phantom reads are less concerning.

Serializable

The most restrictive isolation level is Serializable. It ensures complete isolation by serializing transactions. No other transactions can read or write until the current transaction is finished. This level eliminates dirty reads, non-repeatable reads, and phantom reads.

Serializable is ideal for critical operations needing maximum consistency. It can cause significant overhead and decrease concurrency, as it requires extensive locking. For databases needing absolute consistency, this level is effective.

Error Handling in SQL Transactions

Managing errors in SQL transactions is crucial to maintain data integrity. Utilizing tools like TRY…CATCH blocks and understanding @@TRANCOUNT helps in efficiently handling issues and rolling back transactions when necessary.

Using Try…Catch Blocks

TRY…CATCH blocks in SQL provide a way to handle errors gracefully during transactions. When an error occurs within the TRY block, control is immediately transferred to the CATCH block. Here, functions like ERROR_NUMBER() and ERROR_MESSAGE() can be used to get details about the error.

This approach allows developers to include logic for rolling back transactions, ensuring that any partially completed transaction does not leave the database in an inconsistent state. Learn more about using these blocks with code examples on Microsoft Learn.

Understanding @@TRANCOUNT

The function @@TRANCOUNT is vital in determining the current transaction count. It helps in understanding if a transaction is active. When @@TRANCOUNT is greater than zero, it indicates that a transaction is open, and a rollback is possible.

This is particularly useful for nested transactions, as it helps in deciding the necessity of a rollback transaction.

By checking @@TRANCOUNT before committing or rolling back, developers can avoid accidental data loss. This function proves invaluable in complex transactional operations. For detailed examples, refer to the SQL Shack article.

Working with Savepoints

In SQL, a savepoint is a powerful tool within a transaction. It allows users to set a specific point to which they can later return if needed. This feature is very useful in complex transactions where partial rollbacks are required. By creating checkpoints, users can avoid rolling back an entire transaction if only part of it encounters errors.

The SAVE TRANSACTION command creates savepoints in an ongoing transaction. When executing this command, a unique identifier is assigned to the savepoint, allowing it to be referenced later. This identifier is crucial for managing complex operations efficiently.

Here is a simple example of the save transaction command:

BEGIN TRANSACTION

// Some SQL operations

SAVE TRANSACTION savepoint1

// More SQL operations

To backtrack to a specific point, users can use the ROLLBACK TO command. This command reverses all operations performed after the savepoint. It helps in managing errors without affecting the whole transaction:

ROLLBACK TRANSACTION savepoint1

The release savepoint command can be used to free resources associated with a savepoint. Once released, the savepoint can no longer serve as a rollback point.

In managing database transactions, combining savepoints with SQL commands like ROLLBACK can provide effective control over data processes. Understanding these commands is vital for efficiently managing SQL databases and ensuring data integrity. For more detailed information on savepoints, refer to this comprehensive guide.

Transaction Modes and Their Usage

Various transaction modes are used in SQL Server, each serving distinct purposes. Understanding these modes helps ensure data integrity and optimize database operations by controlling how transactions are executed. This section explores explicit, implicit, and autocommit transactions.

Explicit Transactions

Explicit transactions give users full control over the transaction lifecycle. The user initiates a transaction with a BEGIN TRANSACTION statement. Following this, all operations belong to the transaction until it ends with a COMMIT or ROLLBACK command.

This approach allows precise management of data, making it useful for critical updates that require certainty and control over changes.

If an error occurs, a rollback ensures no partial changes remain. This atomicity guarantees that all steps complete successfully or none occur at all. Explicit transactions are favored when precise control over transaction scope is needed. They are especially useful in complex operations that must treat multiple statements as a single unit of work.

Implicit Transactions

Implicit transactions are automatically generated as each previous transaction ends. SQL Server implicitly starts a new transaction once a transaction is committed or rolled back, without an explicit BEGIN TRANSACTION statement. However, the user must still use COMMIT or ROLLBACK to finalize the transaction.

This mode can be advantageous for ensuring that transactions wrap certain types of operations automatically. However, forgetting to commit or roll back can lead to transaction lingering, affecting performance. The SET IMPLICIT_TRANSACTIONS command controls this mode, switching it on or off as required. Implicit transactions are beneficial in environments where transaction management is part of the process.

Autocommit Transactions

Autocommit transactions are the default mode in SQL Server. Every individual statement is treated as a transaction and automatically commits upon completion, unless an error occurs.

This mode simplifies transaction management by removing explicit control from the user. Users do not need to define the transaction scope, which allows quick and simple statement execution.

Contrary to explicit and implicit modes, autocommit ensures changes are saved instantly after each operation, reducing the chance of uncommitted transactions affecting performance.

It is ideal for scenarios where each statement is independent and does not require manual transaction management, making it efficient for routine data manipulations.

DML Operations in Transactions

DML operations in transactions ensure that SQL statements like INSERT, UPDATE, and DELETE are executed as a single unit. This guarantees data integrity and consistency, allowing multiple operations to succeed or fail together.

Inserting Records With Transactions

In a transaction, the INSERT statement adds new records to a table. Transactions help maintain data integrity by ensuring that each insert operation completes fully before committing to the database.

For example, if an application needs to add orders and update inventory in one go, using a transaction will prevent partial updates if a failure occurs.

A typical transaction example that involves inserting records may include steps to begin the transaction, execute multiple insert statements, and commit. If an error arises, a rollback can reverse the changes, maintaining consistency.

This approach is crucial in applications where foreign key constraints and multiple related table updates occur, making the process efficient and reliable.

Updating Records Within a Transaction

UPDATE commands modify existing data within tables. When executed inside a transaction, they ensure that all changes are atomic, consistent, and isolated.

This means that either all updates are applied, or none are, preventing data corruption.

Consider a transaction that must adjust user account balances following a bank transfer. All updates to the sender and receiver accounts would be enclosed in a transaction block.

If any error, like a network issue, disrupts this process, the transaction can rollback to its original state, thus avoiding any partial updates that could lead to discrepancies.

Deleting Records in the Context of a Transaction

Deleting records through a transaction allows multiple deletions to be treated as one inseparable action.

For instance, when removing outdated customer data across related tables, the transaction ensures that all deletions occur seamlessly or not at all.

In scenarios where foreign key relationships exist, a transaction provides a safeguard. If a delete operation affects multiple related tables, executing these deletions within a transaction ensures that referential integrity is preserved.

This means if any part of the delete process encounters an error, the transaction rollback feature will revert all changes, thus keeping the database consistent and free from orphaned records.

Using transactions for deletes is vital in managing critical business processes.

Working with SQL Server Transactions

SQL Server transactions are essential for ensuring data integrity. They treat a series of operations as a single unit of work. If all the operations in the transaction are successful, the changes are committed. Otherwise, they are rolled back.

Transact-SQL (T-SQL) is the language used to execute these transactions. It includes several statements such as BEGIN TRANSACTION, COMMIT, and ROLLBACK. These commands allow control over the transaction process.

There are three main transaction modes in SQL Server:

- Autocommit: This is the default mode where each T-SQL statement is treated as a transaction.

- Explicit: Transactions start with

BEGIN TRANSACTIONand end withCOMMITorROLLBACK. - Implicit: Set by a specific command, and the next T-SQL statement automatically starts a transaction.

In SQL Server, named transactions can be used. Each BEGIN TRANSACTION can have a name, which helps in managing multiple or nested transactions.

Example:

BEGIN TRANSACTION Tran1

-- SQL statements

COMMIT TRANSACTION Tran1

Proper use of transactions ensures that the database remains consistent despite system failures or errors. They are central to maintaining data accuracy and reliability. Using transactions wisely in SQL Server can help manage large and complex databases efficiently.

Nested Transactions and Their Scope

In SQL Server, nested transactions are not truly separate transactions. They depend on the outcome of the outermost transaction. If the outer transaction rolls back, all nested ones do too. When the outermost transaction commits, only then does any part of the nested transaction take effect.

Nested transactions look like this:

BEGIN TRAN OuterTran

-- some SQL statements

BEGIN TRAN InnerTran

-- more SQL statements

COMMIT TRAN InnerTran

COMMIT TRAN OuterTran

Even though InnerTran is committed, if OuterTran rolls back, all actions revert.

Batch-scoped transactions are another way to handle SQL operations. These transactions span multiple SQL commands executed together as a single batch. Unlike nested transactions, batch-scoped transactions depend on the SQL Server session context rather than individual transaction commands.

When considering using nested transactions, some guidelines include:

- Use them when dealing with complex procedures that may need to isolate specific parts of data processing.

- Be aware that they don’t protect inner transactions if an outer transaction fails.

- Understand that they are useful for organizing and structuring SQL statements but don’t create independent transaction control.

For more detailed examples and explanations, one might check out resources like SQL Server Nested Transactions to get insights from experts in the field.

Managing Transactions in SQL Databases

Transactions play a crucial role in SQL databases, ensuring data integrity and consistency. A transaction is a sequence of operations performed as a single unit. If successful, changes are saved to the database permanently.

To begin managing a transaction, the BEGIN TRANSACTION command is used. This marks the starting point of the transaction. It helps in tasks like database management by handling operations efficiently.

COMMIT is vital as it saves all changes made during the transaction. If there are errors, a ROLLBACK can undo changes, helping maintain database consistency.

BEGIN TRANSACTION;

-- SQL operations

COMMIT;

In inventory management, managing transactions is essential. They ensure stock levels are accurate, reflecting real-time changes, and preventing errors due to simultaneous updates.

Proper transaction management helps prevent deadlocks, ensuring smooth operations. Transactions should be kept as short as possible to reduce the chances of conflicts.

Handling transactions in an SQL database requires understanding isolation levels. These levels control how transaction changes are visible to others, affecting database performance and consistency.

Effective use of transactions is crucial for database reliability. Techniques like Explicit Transactions offer control over the transaction process, ensuring that data changes are only committed when all operations proceed without error. This approach is especially useful in large-scale database applications, ensuring robust data management and integrity.

Implementing Transactions in a Sample Customers Table

Implementing transactions in a SQL database helps ensure data integrity. In a Customers table, transactions can be used to manage changes such as inserting new customers or updating existing ones.

Consider a scenario where you need to add a new customer and address to ensure that both entries link correctly. If there is an issue like a duplicate primary key, the transaction should roll back to prevent incomplete data.

A typical transaction involves these steps:

- Begin Transaction: Start a new transaction.

- Perform Operations: Use SQL statements like

INSERTorUPDATE. - Commit or Rollback: Commit the transaction if successful or rollback if any statement fails.

Example: Adding a New Customer

Suppose a new customer needs to be added. The process might look like this:

BEGIN TRANSACTION;

INSERT INTO Customers (CustomerID, Name, Email)

VALUES (102, 'Jane Doe', 'jane.doe@example.com');

INSERT INTO Addresses (AddressID, CustomerID, Street, City)

VALUES (201, 102, '123 Elm St', 'Springfield');

IF @@ERROR <> 0

ROLLBACK TRANSACTION;

ELSE

COMMIT TRANSACTION;

In this example, the CustomerID acts as a primary key in the Customers table and a foreign key in the Addresses table. If something goes wrong in the process, the transaction ensures that partial data is not saved.

By carefully managing transactions, database administrators can maintain consistent and reliable data across tables. More detailed examples can be explored at Implement transactions with Transact-SQL.

Frequently Asked Questions

In learning about SQL transactions, it’s important to understand how transactions work, their structure, and how they are used in SQL databases. Key topics include transaction principles, specific SQL statements, and best practices.

What are the fundamental principles of transactions in SQL databases?

Transactions are based on the ACID principles: Atomicity, Consistency, Isolation, and Durability. These ensure that a series of database operations either all occur or none do, maintain data integrity, manage concurrent access, and persist changes.

How does the BEGIN TRANSACTION statement work in SQL?

The BEGIN TRANSACTION statement marks the starting point of a transaction. It signals the database to begin recording operations as a single unit of work that can be either committed or rolled back as needed.

Can you provide an example of an SQL transaction with a ROLLBACK operation?

Consider a transaction that updates two related tables. If an error occurs after the first update, a ROLLBACK operation undoes all changes made within the transaction, ensuring the database returns to its state prior to the transaction’s start.

What is the difference between a simple SQL query and a transaction?

A simple SQL query typically involves a single operation. In contrast, a transaction consists of a series of operations executed as a single unit, providing control over execution to ensure data integrity and error recovery through commits and rollbacks.

How are transactions implemented in SQL Server stored procedures?

In SQL Server, transactions can be implemented within stored procedures by using BEGIN TRANSACTION, followed by SQL commands, and concluded with either COMMIT or ROLLBACK. This structure manages data operations effectively within procedural logic.

What are the best practices for managing SQL transactions effectively?

Effective transaction management includes keeping transactions short and using proper locking strategies. It also involves ensuring that error handling is robust to avoid data inconsistencies. Monitoring transaction log sizes and handling long-running transactions is also essential for optimal performance.