In the ever-evolving world of data analysis, Power BI stands out as a powerful tool for transforming data formats efficiently. This tool allows users to manage vast amounts of data with relative ease, leading to actionable insights.

Learning pattern recognition in data transformation is crucial for maximizing the potential of Power BI, as it aids in identifying trends and anomalies quickly.

By mastering data transformations and pattern recognition within Power BI, analysts can streamline their processes and enhance data-driven decision-making. Understanding these concepts helps in unraveling complexities in datasets, making important information more accessible and useful.

1) Mastering Data Type Conversion

Data type conversion is crucial in Power BI to ensure accurate data analysis and reporting. When importing data, each column should have the correct data type to prevent errors.

In Power BI, the Transform menu provides options to change data types efficiently. Users can select a column and apply the appropriate data type, such as text, number, or date, ensuring calculations work correctly.

Choosing the wrong data type can lead to calculation errors. For instance, if a numerical value is treated as text, it might not be used in arithmetic operations, affecting analytics results.

Properly setting data types helps avoid such issues, ensuring reliable data outcomes.

Different views in Power BI like the Data View or Report View offer distinct data type options. Some types, like Date/Time/Timezone, are only convertible in Power Query and adapt to common types like Date/time when loaded into the model.

Practical examples include converting dates stored as text into date formats for time-based analyses. Similarly, changing monetary values stored as strings to decimal formats will support financial calculations.

These conversions streamline data processes and ensure consistency across reports.

Understanding the conversion of complex data types, such as Duration converting to Decimal, assists in maintaining data integrity within a dataset. This capability enriches the data transformation process, making it easier for users to navigate and manipulate data in Power BI confidently.

2) Utilizing Power Query for Data Transformation

Power Query is a powerful tool in Power BI that helps users shape and prepare data without coding. Users can connect to different data sources like Excel, SQL Server, and more. This wide support makes it easier to gather data from multiple places, ready for analysis.

The tool provides numerous options for transforming data. Users can filter rows, pivot columns, or merge tables to suit their needs. Creating custom columns adds flexibility for specific calculations or rearrangements.

These features allow for tailored data preparation, ensuring it fits the intended analysis.

One notable feature is the Query Editor. It offers an intuitive interface for applying transformations. Users can see each step and revert changes as needed. This ensures easy tracking of modifications, enhancing data accuracy and reliability.

Another useful feature is the advanced editor for complex transformations. Users can fine-tune their queries by adding comments for clarity. These comments make revisiting or collaborating on projects more straightforward.

Such transparency aids in maintaining a well-organized data transformation process.

Power Query is integrated directly into Power BI, enabling seamless data management. The integration allows for streamlined processes and efficient handling of data, ultimately improving productivity.

Using Power Query simplifies the task of managing large datasets and prepares the data for insightful analysis.

For more tips on using Power Query, check out some best practices in transforming data, which can streamline data preparation and improve workflow efficiency.

3) Implementing Column Pattern Matching

Column pattern matching in Power BI is a useful technique for transforming data. It enables users to identify and replicate patterns across datasets. This method can help automate the process of formatting and cleaning data, saving time and reducing errors.

Power Query in Power BI supports this feature and offers a user-friendly interface. Users can create custom columns based on examples. By inputting example data, Power Query uses pattern matching to generate the necessary formula.

This feature can be particularly helpful for tasks like data concatenation. For example, when you want to merge names or addresses from multiple columns into a single column, pattern matching simplifies this process.

To start using column pattern matching, open Power BI Desktop. When importing data, navigate to the Power Query Editor through the “Transform Data” option. In this editor, users can select a column and add a new one from example data. This helps in crafting the desired pattern.

The M language, which powers Power Query, writes the formulas needed for the desired transformations. This approach not only makes tasks more efficient but also gives users more control over data manipulation.

With the help of pattern matching, users can handle complex data scenarios with ease.

When done correctly, column pattern matching enhances data accuracy. It ensures consistency across datasets, which is crucial for reliable insights and decision-making in Power BI reports.

4) Leveraging DAX Functions for Recognition

DAX functions play a vital role in Power BI, helping users discover patterns within data. These functions enable the recognition of trends and facilitate deeper insights by manipulating data.

One key area is using DAX to create calculated columns, which allow users to develop new data points from existing datasets.

By using DAX aggregation functions, analysts can summarize data effectively. Functions like SUM, AVERAGE, and COUNT help in aggregating data points to uncover meaningful patterns. This is crucial for identifying overall trends in sales, production, or other metrics.

DAX also provides time intelligence functions, which helps in analyzing data across different time periods. These functions assist in recognizing seasonal patterns or changes over time, aiding in forecasting and decision-making.

Such capabilities are essential for businesses to plan ahead with confidence.

For more advanced data manipulation, the ROLLUP function in DAX creates layered aggregations. This is particularly useful for multi-level data analysis, where understanding details at different levels is necessary.

Furthermore, DAX’s ability to handle relationships within data tables is powerful for pattern recognition. Creating and managing relationships helps in connecting various data points, revealing insights that are not visible when data is isolated.

5) Optimizing M Code for Efficiency

Efficient use of M code in Power BI can significantly speed up data processing. One practical approach is to minimize the number of steps in the query. Reducing steps helps decrease the complexity of data transformation, leading to faster performance.

Using native queries also optimizes M code. Incorporating database-specific commands allows Power BI to push operations to the source. This practice reduces the workload on Power BI and speeds up data retrieval.

Avoiding unnecessary columns and rows is another effective strategy. Filtering and selecting only the needed data can have a big impact on performance. By focusing on relevant data, Power BI processes information more quickly.

Managing data types correctly can optimize efficiency. Ensuring that each column is set to the appropriate data type reduces query execution time. This practice also ensures that the data used is accurate and aligns with intended calculations.

Incorporating buffered tables is beneficial when multiple transformations are applied to the same dataset. By reading the data into memory only once, it reduces redundant processing. This technique helps maintain performance when dealing with large datasets.

Finally, using the Power Query Editor can help identify areas for improvement. By reviewing the query steps and ensuring they are streamlined and efficient, users can optimize their M code.

Efficient M code contributes to faster updates and more responsive Power BI dashboards.

Exploring data transformation with Power Query M can provide more insights into this process.

Exploring Power BI’s Dataflows

Dataflows in Power BI are a tool for managing and transforming large sets of data. They allow users to prepare data by ingesting it from various sources such as databases, files, and APIs. This process helps streamline the data preparation tasks, ensuring that data is ready for analysis.

A significant feature of Power BI Dataflows is their integration with the Power Platform, offering a centralized solution for data preparation across an organization. This integration enables data reuse and creates a shared understanding among users in an enterprise setting.

One of the key benefits of dataflows is their ability to work with large volumes of data. With the right configuration, they provide users with a scalable way of handling big data, making them suitable for businesses with extensive data processing needs. Users can configure storage options using Azure Data Lake for enhanced capabilities.

In Power BI, dataflows support automation in machine learning processes. Analysts can train and validate machine learning models within the platform using their dataflows as input. This feature simplifies the development of predictive models by offering direct connections between data preparation and machine learning steps.

To make the most of dataflows, it is recommended to adhere to best practices. These include carefully planning dataflow structures and ensuring proper data quality checks. By following these practices, users can maximize efficiency and maintain accuracy in their data operations.

With these capabilities, Power BI’s dataflows are a powerful tool in handling data transformations and preparing data for insightful analysis. For more detailed guidance and best practices, refer to Dataflows best practices.

7) Creating Custom Patterns for Advanced Needs

In Power BI, creating custom patterns for advanced needs helps tailor data processing to specific analytical goals. Users can design these patterns to manage complex datasets or unique transformation requirements. This customization offers flexibility beyond standard procedures, enabling fine-tuned control over data handling practices.

Custom patterns often involve advanced transformations, such as merging different datasets or creating new calculated columns. By designing these patterns, users can streamline data preparation processes, ensuring data is in the optimal format for analysis. This can improve efficiency and accuracy in data reports.

Programming languages such as DAX or M help in constructing and applying these custom patterns. These languages enable data analysts to set rules or scripts for specific transformations. For example, users might write functions to clean or reshape data, making it easier to work with in subsequent analysis stages.

For professionals focused on data modeling, custom patterns can integrate various data sources seamlessly. Techniques like importing data with Azure Synapse allow for a unified approach when setting up a data model. This ensures that data from different origins can be manipulated uniformly, maintaining consistency across reports.

When addressing complicated datasets, using custom patterns also enhances the ability to save and reuse these transformations. This can significantly cut down on repeated work, as patterns can be applied across multiple projects or datasets. Thus, users gain not only insight but also efficiency as they work with Power BI’s advanced features.

Incorporating AI for Enhanced Pattern Detection

Incorporating AI into Power BI can significantly boost pattern detection abilities. AI tools can analyze data efficiently, revealing patterns that may not be immediately obvious to human analysts.

These patterns help businesses predict trends and make informed decisions.

Power BI integrates with AI services to enhance its capabilities. One feature is AI Insights in Power BI Desktop, which can leverage Azure Machine Learning.

This integration allows users to apply machine learning models to their data, improving pattern recognition accuracy.

Through machine learning, AI can sift through vast datasets to identify meaningful patterns, enabling more precise predictions. For example, pattern recognition technology can examine edges, colors, and shapes within images, adding depth to data analysis in computer vision.

Pattern recognition is a critical element in AI as it mimics the human brain’s ability to distinguish intricate patterns. This feature is particularly useful in sectors like finance and healthcare, where predicting outcomes based on data patterns can drive vital decisions.

AI-equipped systems can filter through huge datasets, detect significant trends, and automate decisions.

By connecting AI tools with Power BI, users enhance their data processing and analytical abilities. AI’s computational power provides insights that go beyond traditional methods, offering detailed analysis and increased efficiency.

Incorporating AI ensures businesses are better equipped to handle large data volumes, facilitating seamless handling and comprehension of complex information.

Designing User-Friendly Data Models

Designing user-friendly data models in Power BI requires a clear organization of data. It’s important to simplify complex information, making it easier for users to understand and interact with the data.

Using a star schema is an effective strategy, as it organizes data into clear, related groups.

Data should be organized based on the needs of business users. This organization helps users quickly find relevant information, aiding in faster decision-making.

When designing models, consider the user’s perspective, ensuring the model reflects their needs and daily operations.

A well-structured data model enhances performance and usability. Choosing the correct data granularity is crucial. It ensures that reports are responsive and provide detailed insights without overwhelming users with unnecessary details, supporting efficient data retrieval and analysis.

Visual elements in data models enhance understandability. Using clear labels, intuitive hierarchies, and straightforward relationships allows users to easily navigate and interpret the data model.

These practices improve user interaction with the reports and dashboards.

Documentation and training are key to making data models user-friendly. Providing users with guides and tutorials can help them understand how to best use the model.

This increases their confidence and ability to generate valuable insights from the data.

Creating user-friendly models requires ongoing evaluation and updates. Keeping the model aligned with evolving business needs ensures it remains relevant and useful.

Regular feedback from users can guide these improvements, making the model a valuable and effective tool for the organization.

10) Harnessing Advanced Analytics in Power BI

Power BI offers robust tools for advanced analytics, empowering users to gain deeper insights from their data. One of the key features is Quick Insights, which allows users to automatically get insights from their data with a single click.

This feature uses machine learning algorithms to find patterns and trends, helping users uncover hidden insights.

Another powerful tool in Power BI is AI Insights. This feature integrates artificial intelligence into data analysis, enabling users to apply machine learning models to their datasets.

It enhances the ability to make predictions and automate data analysis, which is useful for forecasting future trends and outcomes. This can be especially valuable for businesses aiming for strategic growth.

Power BI also provides the Analyze feature that helps users understand data patterns by providing explanations for data changes. When users notice a sudden change in their data, Analyze can break down these fluctuations and offer insights into potential causes.

This makes it easier to trace back to the root of any unexpected shifts, enhancing decision-making processes.

Time series analysis is another integral part of advanced analytics in Power BI. It allows users to evaluate data trends over a period of time, which is essential for businesses that rely on temporal data to make informed decisions.

By understanding past patterns and predicting future trends, organizations can better prepare for upcoming challenges and opportunities.

Finally, data binning and grouping are valuable techniques in Power BI. These methods help in organizing data into manageable segments, making analysis more effective.

By categorizing data into bins, users can identify outliers and focus on specific areas of interest. This improves the clarity and accuracy of insights, enabling more precise data-driven strategies.

Understanding Pattern Recognition

Pattern recognition is key in transforming data formats in Power BI, facilitating smarter data analysis. By identifying patterns, users can uncover meaningful trends and relationships within datasets, enhancing data-driven decision making.

Basics of Pattern Recognition

Pattern recognition involves detecting patterns or regularities in data, which is crucial for analyzing large datasets efficiently. It often uses algorithms to identify recurring themes or trends.

This process begins with input data, which the system processes to classify based on previously identified patterns.

Understanding the basics can improve operations like data categorization and anomaly detection. It helps in simplifying complex data formats into understandable elements.

An important aspect is categorization, which allows users to organize data effectively. Recognizing patterns simplifies decision-making and prioritizes significant data points.

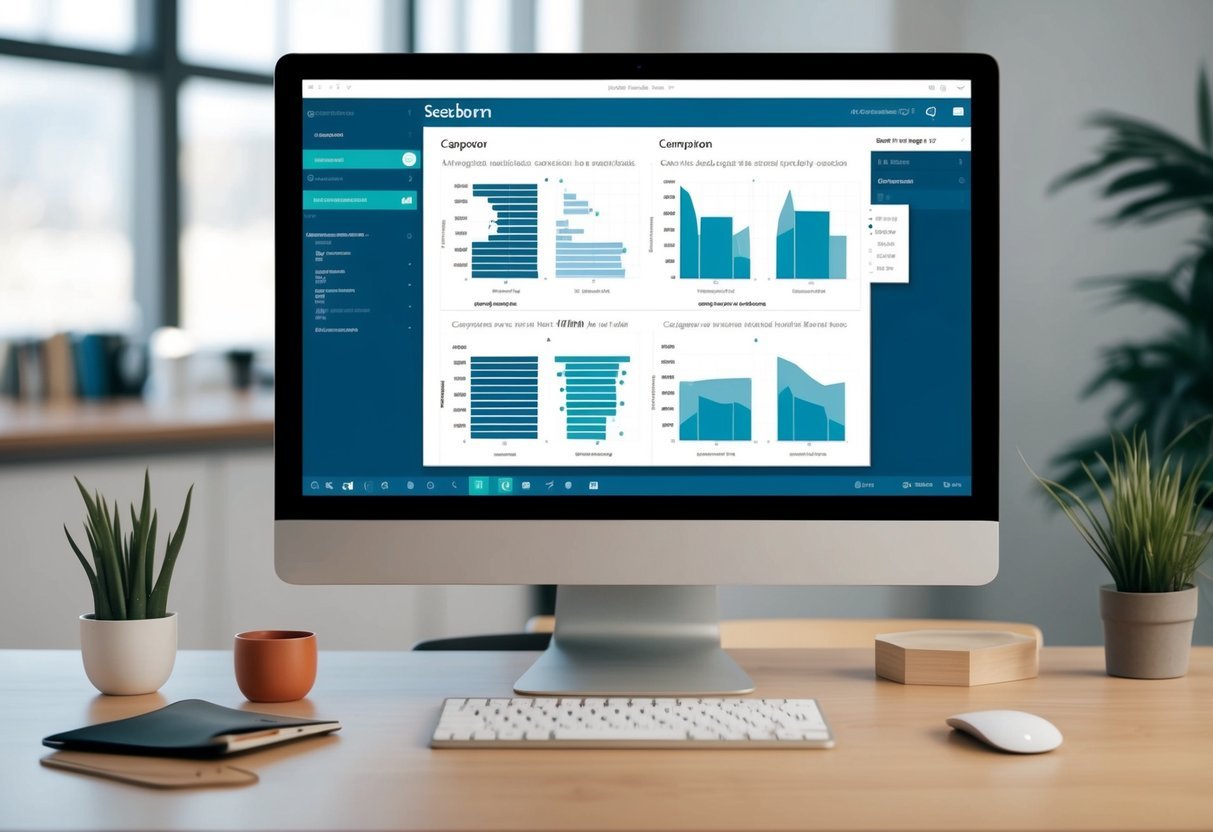

Applications in Power BI

In Power BI, pattern recognition enables users to transform and model data effectively. It helps in identifying key trends and relationships within datasets, which is crucial for creating insightful visualizations and reports.

Power BI’s advanced capabilities, like the ability to showcase patterns, play a vital role here.

Users benefit from tools like Power Query, which simplifies data cleaning and transformation tasks.

This ability to detect and showcase patterns allows for more accurate data analysis and reporting. The focus on visualization ensures patterns are easily communicated, enhancing the overall data storytelling process.

Transforming Data Formats in Power BI

Transforming data formats in Power BI involves various techniques that help users handle diverse data sources. This process can be complex, but with the right strategies, many common challenges can be overcome effectively.

Techniques for Data Transformation

Power BI offers several powerful tools for data transformation. Users can leverage Power Query to clean and format data. Power Query allows users to change data types, split columns, and merge datasets easily.

The Pivot and Unpivot features help reshape tables by adjusting columns and rows, making data suitable for analysis.

Another key technique is the use of calculated columns. This feature enables users to create new data dimensions through simple formulas, enhancing the dataset’s depth.

DAX (Data Analysis Expressions) is also a useful tool, providing powerful functions to manipulate data and create new insights.

Challenges and Solutions

Data transformation isn’t without challenges. Some users might encounter issues with inconsistent data formats. A common solution is to standardize data formats across the dataset using Power Query’s format tools, such as date or number formatting options.

Another challenge is dealing with large datasets, which can slow down processing. To address this, users can utilize data reduction techniques like filtering or summarizing data in smaller subsets before transformations.

Power BI’s performance optimization features also help maintain efficiency.

Keeping data up-to-date is tricky, too. By using scheduled refreshes and connecting data directly to live databases, users can ensure their transformations reflect the latest available information.

This approach helps maintain data accuracy and relevance in reports.

Frequently Asked Questions

In Power BI, mastering data transformation techniques is essential for uncovering and showcasing patterns and trends. Users can apply advanced methods to efficiently reshape their data, leveraging tools like Power Query and DAX formulas.

How can advanced data transformation techniques be applied in Power BI?

Advanced techniques in Power BI allow users to streamline their data processing. This includes using Power Query to clean and shape data by removing unnecessary elements and organizing data in a way that reveals insightful patterns.

What are the methods to handle data transformation in Power BI?

Data transformation in Power BI can be handled with tools like Power Query and M Code. These tools help in converting data types, renaming columns, and filtering datasets, which are key to preparing the data for analysis and pattern recognition.

In what ways can Power BI display trends and patterns through visuals?

Power BI provides robust visualizations to display data trends and patterns. Users can take advantage of features that allow them to create dynamic charts and graphs, highlighting key data points and making it easier to identify trends over time. Techniques are shared in community blogs like those on pattern showcasing.

What steps should be followed to reshape and transform data in Power BI?

To reshape and transform data, users can use Power Query to filter, pivot, and aggregate data efficiently. Changing data formats and applying M Code can optimize data models and make complex datasets easier to work with. Understanding these steps is crucial as described in clean data modules.

How can data types in Power BI be changed using DAX formulas?

DAX formulas in Power BI are used to change data types by creating calculated columns and measures. This involves converting text into dates or numbers, for example, to ensure data consistency across reports. Using DAX improves accuracy in data analysis.

What are best practices for showing trend analysis over time in Power BI?

Best practices for trend analysis in Power BI include optimizing data models and using time-based calculations.

By organizing data chronologically and applying appropriate filters, users can create clear visualizations that demonstrate trends over time.

Visual stories are essential for informed decision-making as outlined in courses about data analysis with Power BI.