Fundamentals of K-Nearest Neighbors

The K-nearest neighbors (K-NN) algorithm is a popular method used in both classification and regression. This algorithm is part of supervised machine learning, which involves learning from labeled data to predict an outcome for new data points.

Understanding K-NN Algorithm

The K-NN algorithm operates by identifying the ‘k’ closest data points, or neighbors, in a dataset. These neighbors are used to determine the classification or value of a new data point. The algorithm is non-parametric, meaning it makes no assumptions about the data distribution.

It is important in pattern classification as introduced by Fix and Hodges in 1951. The value of ‘k’ affects the model’s accuracy and complexity. A smaller ‘k’ is sensitive to noise, while a larger ‘k’ provides smoother decision boundaries. Choosing the right ‘k’ is vital for optimizing the performance of K-NN.

Supervised Machine Learning Basics

Supervised machine learning relies on learning from a training dataset that includes input-output pairs. The K-NN algorithm fits within this framework because it requires a labeled set of data. It learns by example, which allows it to make decisions about unclassified data.

K-NN is an example of how algorithms in supervised learning need past data to predict future outcomes. It learns by finding similarities between the new data point and its nearest neighbors in the training data. This simplicity makes it a straightforward method to apply but also places importance on selecting representative trial data.

Classification vs. Regression

In K-NN, classification and regression differ in their purpose. Classification aims to predict categorical outcomes. For K-NN classification, the majority class among neighbors determines the class label of new data.

On the other hand, regression focuses on predicting continuous values. In K-NN regression, the average or weighted average of the nearest neighbors is used to estimate the value. Both tasks showcase the adaptability of the K-NN algorithm in handling various types of prediction problems, emphasizing its role in machine learning.

Preparing the Data Set

Preparing a data set involves several important steps to ensure accurate and efficient machine learning classifications using the K-Nearest Neighbors (k-NN) algorithm. The process includes handling missing data and choosing the right features, as well as normalizing the data for consistency.

Data Preprocessing Steps

Preprocessing is crucial for cleaning the data set before using it for training. This step involves collecting data points from various sources and organizing them into a structured format.

Steps include:

- Removing duplicates: Ensure each data point is unique to prevent bias.

- Cleaning data: Eliminate any irrelevant information that may affect the model.

- Splitting data: Divide into training and testing subsets, typically in a 70-30 ratio, to evaluate performance.

These steps improve the efficiency and accuracy of the classification model by providing a consistent and relevant data set.

Feature Selection Techniques

Figuring out which features are important is key to building an effective model. Feature selection reduces the number of input variables to make the classification process faster and more accurate.

Common techniques include:

- Filter Methods: Use statistics to rank features by importance. Techniques like correlation and chi-square test fall under this category.

- Wrapper Methods: Involve using a subset of features to train a model and evaluate performance. Techniques like recursive feature elimination are popular here.

- Embedded Methods: Perform feature selection as part of the model training process. Examples include decision tree algorithms, which select features based on their importance to the model’s accuracy.

Choosing the right features ensures that the model focuses on the most relevant data points.

Handling Missing Data

Missing data can lead to inaccurate predictions if not addressed properly. There are various strategies to handle this issue, each depending on the nature and extent of the missing data.

Methods include:

- Deletion: Remove instances with missing values if they form a small portion of the data set.

- Imputation: Replace missing values with meaningful substitutes like the mean, median, or mode of the feature.

- Predictive Modeling: Use other data points and features to predict the missing values. Techniques like regression models or nearest neighbors can be useful here.

Properly managing missing data is essential to maintain the integrity and effectiveness of the data set.

Normalizing Data

Normalization scales the data into a consistent range, typically between 0 and 1, to ensure all features contribute equally to the distance calculations used in k-NN.

Key normalization techniques:

- Min-Max Scaling: Rescales features to a range with a minimum of 0 and maximum of 1.

- Z-Score Normalization: Standardizes data by scaling based on standard deviation and mean.

- Decimal Scaling: Moves the decimal point to make values fall within a specified range.

Normalization is necessary when features in the data set have different units or scales, ensuring that calculations for k-NN are fair and reliable.

K-NN Algorithm Implementation

The K-Nearest Neighbors (K-NN) algorithm involves identifying the closest data points to make predictions. Implementing it involves using programming libraries to manage data efficiently, including setting parameters like the number of neighbors.

Developing a K-NN Model in Python

Developing a K-NN model in Python requires understanding basic coding and data structures. First, import relevant libraries like numpy for numerical operations and pandas for handling datasets.

Next, load and preprocess data, ensuring any inconsistencies are handled. Then, assign variables for features and labels. After that, split the data into training and test sets.

Use the numpy library to calculate the Euclidean distance between data points. Finally, decide the optimal number of neighbors. This step is crucial for accuracy, often involving visualizing accuracy trends via matplotlib to find the point where additional neighbors do not improve results.

Using Scikit-Learn Library

The Scikit-Learn library simplifies implementing the K-NN algorithm due to its robust set of tools.

Start by importing KNeighborsClassifier from sklearn.neighbors. Prepare your dataset, similar to other machine learning tasks, by cleaning and normalizing data.

Then, create a K-NN model instance using KNeighborsClassifier(n_neighbors=k), choosing k based on cross-validation or domain knowledge.

Fit the model to the training data with the fit method. Then, evaluate the model using the predict method on the test data to assess its performance.

Scikit-Learn also offers methods to calculate accuracy, helping to fine-tune the number of neighbors after reviewing initial results.

Distance Metrics in K-NN

In K-Nearest Neighbors (K-NN), choosing the right distance metric is crucial as it affects the accuracy of the model. Different datasets may require different metrics to ensure the most accurate classification.

Euclidean Distance and Its Alternatives

Euclidean distance is the most common measure used in K-NN. It calculates the straight-line distance between two points in a multi-dimensional space. It is suitable for datasets where the underlying data is continuous and has similar scales.

Manhattan distance, also known as L1 distance, measures the distance by the absolute differences across dimensions. It is useful for grid-like data, where movements are along axes.

Minkowski distance is a generalization of both Euclidean and Manhattan distances. It is defined by a parameter ( p ). If ( p=2 ), it becomes Euclidean; if ( p=1 ), it converts to Manhattan.

Hamming distance is used for categorical data, measuring the number of differing elements between two strings. It is effective in scenarios where data points are considered as strings or bit arrays.

Choosing the Right Distance Measure

Selecting a distance measure depends on the nature of the dataset. Euclidean distance is ideal for continuous variables that exhibit consistent scaling. However, when dealing with categorical data or variables on different scales, using alternative metrics like Manhattan or Hamming may result in better performance.

A study on distance function effects for k-NN classification highlights that the choice of metric can significantly impact the accuracy of the classification. Testing multiple metrics, such as robust distance measures, is recommended to identify the best fit.

In some cases, combining different metrics could also enhance the model’s accuracy. Utilizing cross-validation can help find the optimal distance measure, tailored to specific data characteristics, thereby improving K-NN’s effectiveness.

Optimizing the ‘K’ Value

Choosing the right ‘K’ value in K-Nearest Neighbors (KNN) impacts accuracy and performance. Different methods help fine-tune this parameter to enhance predictions.

Cross-Validation for Parameter Tuning

Cross-validation is vital for determining the optimal ‘K’ in KNN. The process involves splitting data into subsets to evaluate model performance. By testing various ‘K’ values across these subsets, one can identify an optimal value that balances bias and variance.

K-fold cross-validation is commonly used, where the data is divided into ‘K’ parts. Each part is used as a validation set while the others form the training set. This method ensures the model isn’t overfitting and provides a reliable ‘K’ for better accuracy.

Effective parameter tuning through cross-validation leads to more generalizable models and improved predictions.

The Impact of K on Model Performance

The choice of ‘K’ significantly affects KNN’s model performance. A small ‘K’ may lead to overfitting, capturing noise in the data, while a large ‘K’ may cause underfitting, overlooking important patterns.

Using majority voting, KNN bases its predictions on the most common class among the nearest neighbors. As ‘K’ changes, so does the influence of individual data points on this decision. A sweet spot ensures that minority classes are not overshadowed in majority vote calculations.

Selecting an appropriate ‘K’ can maintain a balance, ensuring the model accurately reflects underlying patterns without being too sensitive to noise. Making informed adjustments to ‘K’ ensures robust and dependable model outcomes.

Avoiding Common Pitfalls

When using the K-Nearest Neighbors (KNN) algorithm, several challenges can arise. These include dealing with outliers and noisy data, managing overfitting and underfitting, and addressing the curse of dimensionality. Handling these issues carefully improves model performance and reliability.

Handling Outliers and Noisy Data

Outliers and noisy data can skew results in KNN classification. It’s crucial to identify and manage these anomalies effectively.

Data preprocessing steps, like removing or correcting outliers and smoothing the data, are essential. For instance, using z-score normalization or interquartile ranges (IQR) can help identify outliers.

Noise in data can affect the distance calculations in KNN, leading to misclassification. Implementing techniques like data smoothing and error correction can enhance results. Consider using robust algorithms or transforming the features to reduce noise impact.

Overfitting and Underfitting

Overfitting occurs when a model performs well on training data but poorly on unseen data. This can happen when K in KNN is too low, causing the model to capture noise. To counteract this, increase the K value to allow more neighbors to influence the decision.

Underfitting means the model is too simple, failing to capture data patterns. Here, K is too high, leading to a biased model. Lowering K can make the model sensitive enough to reflect data trends better. Cross-validation is helpful in finding the optimal K value, balancing bias and variance effectively.

The Curse of Dimensionality

The curse of dimensionality refers to the challenges that arise as the number of features increases. In KNN, this can lead to a significant decrease in model performance because distance measures become less meaningful in high dimensions.

Dimensionality reduction techniques like Principal Component Analysis (PCA) can help alleviate this problem by reducing the feature space.

It’s also important to perform feature selection to include only the most relevant features. By reducing the number of irrelevant dimensions, the model’s performance can be improved. This also avoids unnecessary complexity and ensures faster computation.

Analyzing K-NN Results

K-Nearest Neighbors (K-NN) is a simple yet powerful classification tool. Understanding how it draws decision boundaries and measures accuracy can enhance its effectiveness in predictions.

Interpreting Decision Boundaries

Decision boundaries in K-NN are influenced by the chosen value of k, determining how the algorithm classifies data points. A smaller k results in more complex and flexible boundaries, potentially capturing subtle patterns but also increasing the risk of overfitting.

Conversely, a larger k tends to create smoother boundaries, better generalizing data but possibly missing finer patterns.

Visualizing these boundaries is crucial. Graphically representing them can help identify misclassified points and regions where model performance is weak.

The boundaries affect the prediction of unknown data points, determining which class they belong to based on the nearest neighbors.

Measuring Accuracy and Probability

Accuracy evaluation in K-NN involves comparing predicted class labels with actual labels. A confusion matrix can outline true positives, false positives, true negatives, and false negatives, providing a comprehensive look at performance.

The calculation of metrics like precision, recall, and F1-score further refines this assessment.

Probability estimation in K-NN involves analyzing how frequently a point is classified into a particular category by its neighbors. This probability gives an idea of the confidence in predictions.

While K-NN itself provides deterministic class labels, the relative distances of neighbors offer insight into the likelihood or probability of a data point belonging to various classes.

Practical Applications of K-NN

K-Nearest Neighbors (K-NN) is a versatile algorithm used in many real-world applications. Its ability to adapt to different data types makes it valuable in areas like recommender systems, finance, cyber security, and data mining.

Recommender Systems and Finance

In recommender systems, K-NN is crucial for predicting preferences by analyzing user behavior and item similarities. Retailers and streaming services use recommendation engines to suggest products and content. These engines compare customer profiles to identify items a user might like.

In finance, K-NN assists in risk assessment and stock price predictions. It evaluates historical data to identify similar market conditions or investor behaviors.

This allows investors to make informed decisions based on past patterns, increasing the chances of successful investments.

Intrusion Detection in Cyber Security

K-NN plays a significant role in intrusion detection to protect computer networks. By classifying network activities as normal or suspicious, it helps identify threats early.

The algorithm scans data traffic, comparing it to known intrusion patterns to spot anomalies.

This method is effective in recognizing both known and unknown threats. It adapts easily to changes in network behavior, making it a preferred choice for organizations to safeguard sensitive information and maintain system integrity as cyber threats evolve.

Pattern Recognition in Data Mining

K-NN is widely used in pattern recognition within data mining. It segments data into groups based on characteristics, facilitating tasks like image classification and handwriting recognition.

By evaluating the proximity of data points, K-NN identifies patterns that might otherwise be missed.

This approach is beneficial for uncovering trends in large datasets, helping businesses and researchers to comprehend complex data structures. It’s highly valued in fields like healthcare and marketing, where understanding patterns quickly and accurately can lead to critical insights and innovations.

The Role of K-NN in Data Science

K-nearest neighbors (K-NN) is a simple yet powerful classification model used in data science. Learn about its role among machine learning models and how it offers a practical way for hands-on learning for aspiring data scientists.

K-NN’s Place Among Machine Learning Models

K-NN stands out as one of the simplest machine learning models, relying on distance metrics to classify data. It classifies data points based on the majority label of their nearest neighbors.

Despite its simplicity, K-NN is effective for both classification and regression tasks. It is particularly useful in scenarios where the relationships between data points are not easily defined by mathematical equations.

In data science, K-NN is often applied when datasets are small and computation power is high, as it requires storing the entire training dataset in memory. Its effectiveness depends on the choice of k, the number of neighbors considered, and the distance metric used.

For further reading on K-NN’s applications, explore the review of k-NN classification.

Hands-On Learning for Aspiring Data Scientists

K-NN’s straightforward implementation makes it ideal for hands-on learning. Aspiring data scientists can easily understand its mechanism, from loading data to classifying it based on proximity.

By engaging with K-NN, learners develop a fundamental understanding of pattern recognition and decision-making processes.

Practical use of K-NN includes medical data mining, where classification of patient data helps in diagnosis. This real-world application bridges learning and practical execution, allowing students to see immediate results.

More about its application can be found in the context of medical data mining in Kenya. This approach fosters a deeper comprehension of both theoretical and application-based aspects of data science.

Advanced Topics in K-NN

K-Nearest Neighbors (K-NN) is not only used for classification but also adapted for various advanced tasks. Methods like weighted K-NN enhance prediction accuracy, and adaptations make K-NN suitable for regression.

Weighted K-NN for Enhanced Predictions

In traditional K-NN, each of the k nearest data points contributes equally to predicting a new data point’s classification. Weighted K-NN improves this by assigning different weights to neighbors based on their distance from the query point.

The closer a neighbor, the higher the weight assigned. This method increases prediction accuracy by prioritizing neighbors that are more similar to the query point.

To implement weighted K-NN, commonly used weighting functions include inverse distance weighting. This means that a closer neighbor in the dataset will have a larger impact on the outcome.

This approach allows for more nuanced classification and is particularly effective in datasets where points are unevenly distributed.

Adapting K-NN for Regression Tasks

While K-NN is typically associated with classification, it can be adapted for regression tasks. In regression, the goal is to predict a continuous target value rather than a class label.

K-NN for regression calculates a prediction value by taking an average of the target values from the k nearest neighbors.

This adaptation requires careful selection of k, as it can significantly impact the prediction accuracy. Additionally, employing a weighted approach, like in weighted K-NN, where closer neighbors have more influence, can refine predictions.

Implementing these strategies allows K-NN to handle regression tasks effectively, expanding its usability in different data science applications.

Performance and Scalability

Understanding how K-Nearest Neighbors (K-NN) performs and scales is vital for tackling big data challenges. Key factors include algorithm efficiency for large datasets and optimization methods like approximate nearest neighbors.

Efficient K-NN Algorithms for Big Data

Efficient algorithms are essential when using K-NN with large datasets. K-NN, known for its simplicity, faces challenges with scalability due to the need to compare each new data point with the entirety of the training dataset.

In big data contexts, improvements in algorithm design help tackle these issues.

Optimizations can include parallel processing and distributed computing. For instance, systems like Panda provide extreme scale parallel implementation.

Techniques such as k-d trees or ball trees also help by reducing the number of comparisons necessary, thus increasing speed.

Optimizing with Approximate Nearest Neighbors

Approximate Nearest Neighbors (ANN) is a strategy employed to enhance the performance of K-NN in large-scale applications. It focuses on increasing speed by trading off some accuracy for much faster query response times.

Techniques like locality-sensitive hashing can efficiently determine similar data samples in high-dimensional spaces.

These algorithms balance maintaining result accuracy while dramatically improving scalability and processing times.

This approach is particularly useful for machine learning tasks requiring rapid classification, exemplified by implementations like FML-kNN, which achieve scalability without drastically compromising performance accuracy, making it practical for real-world big data applications.

Frequently Asked Questions

K-nearest neighbor (KNN) is a simple yet effective machine learning method for classification. It works by examining the closest data points to a query point and deciding its class based on these neighbors.

How does the k-nearest neighbor algorithm classify new data points?

The KNN algorithm classifies new data points by looking at the closest ‘k’ neighbors in the training set. It assigns the most common class among these neighbors to the new data point.

If there are four neighboring points and two belong to class A while the other two belong to class B, the point might be assigned randomly or based on additional rules.

What steps are involved in preparing data for a KNN classifier?

Data preparation involves several steps. First, it’s important to handle missing values and outliers.

Next, features should be normalized or standardized to ensure that the algorithm accurately assesses distances between data points.

Finally, preparing the data involves dividing it into training and testing sets.

How do you choose the optimal value of ‘k’ for KNN?

Choosing the best value for ‘k’ is crucial for KNN performance. This is often done using a process like cross-validation.

A smaller ‘k’ may be more sensitive to noise, while a larger ‘k’ can smooth the classification boundaries. A good practice is to try different ‘k’ values and select the one with the best accuracy on a validation set.

What are the common distance measures used in KNN for assessing similarity?

KNN often uses distance measures to determine how similar or different data points are. Common measures include Euclidean distance, which calculates the straight-line distance between points, and Manhattan distance, which sums the absolute differences along each dimension.

Cosine similarity is sometimes used when the data is sparse or represents frequency counts.

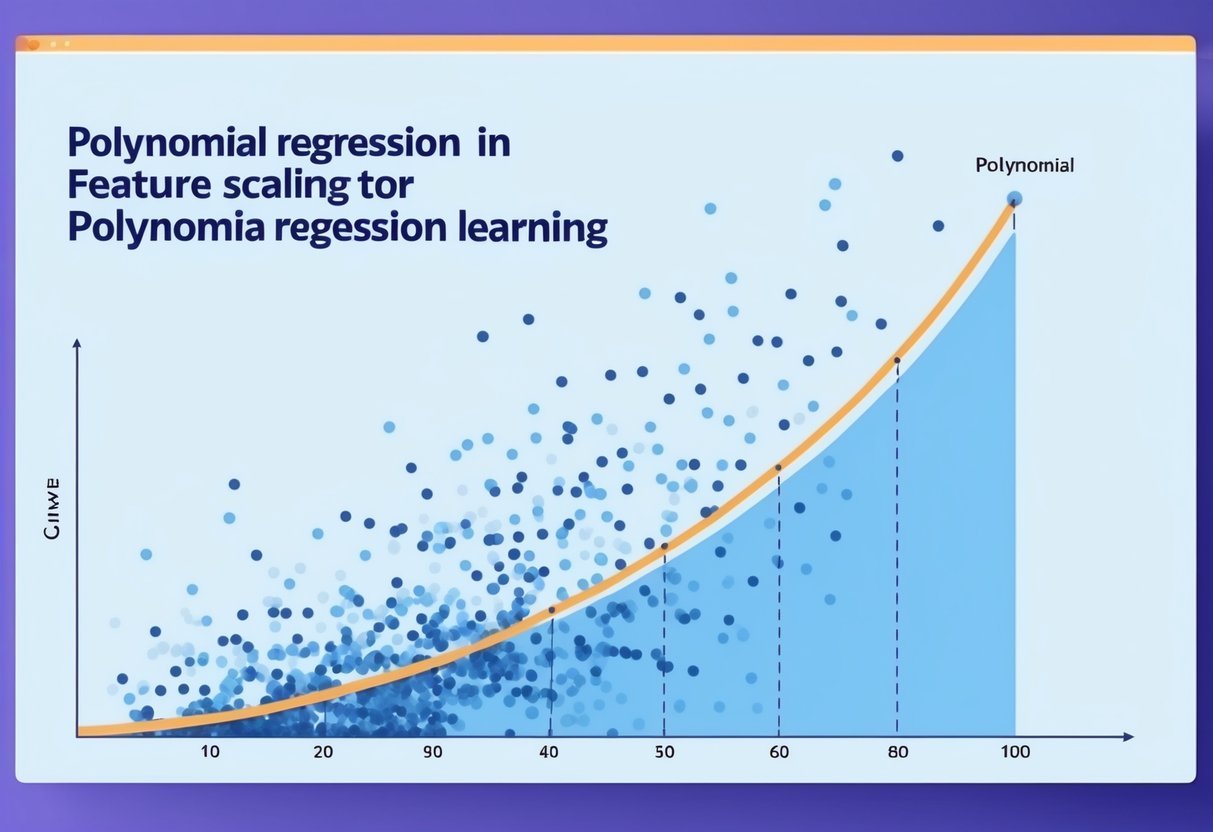

How does feature scaling impact the performance of a KNN classifier?

Feature scaling is critical for KNN because the algorithm relies on distance calculations. Without scaling, features with larger ranges can dominate distance computations, leading to biased results.

Scaling ensures all features contribute equally to the distance measure, improving accuracy.

What are the advantages and limitations of using a KNN algorithm for classification tasks?

KNN is simple and intuitive. It requires no assumptions about data distribution and adapts well to different problems. However, it can be computationally expensive with large datasets. This is due to the need to compute distances for each prediction. Additionally, it may be sensitive to irrelevant or redundant features, making accurate feature selection important.