Foundations of T-SQL

T-SQL, or Transact-SQL, extends SQL by adding programming constructs. It’s crucial for managing databases on Microsoft SQL Server.

Key aspects include understanding its syntax and how it compares with standard SQL. This helps in making effective database queries and operations.

Introduction to SQL and T-SQL

SQL, or Structured Query Language, is the standard language for managing and manipulating databases. It allows users to create, update, delete, and query data.

In contrast, T-SQL builds upon SQL by providing additional features such as procedural programming and functions. These are essential for complex database operations.

While SQL is used across various database systems, T-SQL is specific to Microsoft SQL Server. It adds capabilities like exception handling and transaction control.

Understanding these distinctions is key for database administrators and developers who work in Microsoft environments. By enhancing SQL, T-SQL allows for more efficient and powerful database management.

Understanding Transact-SQL Syntax

Transact-SQL (T-SQL) syntax closely resembles standard SQL but includes extensions that add power and flexibility. Basic commands like SELECT, INSERT, UPDATE, and DELETE are central to both SQL and T-SQL. These are used to perform common operations on databases.

T-SQL includes control-of-flow language such as BEGIN...END, IF...ELSE, and loops like WHILE, which are not part of standard SQL. In comparison to SQL, T-SQL’s syntax supports transactions and error handling using TRY...CATCH, making it suitable for robust applications.

Mastery of T-SQL syntax enables developers to write efficient queries and handle complex business logic directly within the database.

Database and Table Operations

Understanding how to perform database and table operations in SQL is crucial for managing and manipulating data efficiently. These operations include selecting specific data and inserting new data into tables, allowing users to maintain and update their SQL databases effectively.

Basic Select Commands

The SELECT command is fundamental in SQL for retrieving data from a database. It helps users specify the columns to display from one or more tables. When combined with conditions, it fine-tunes data retrieval.

For example, using SELECT * FROM table_name fetches all columns from a specified table. This flexibility is further enhanced by conditions with the WHERE clause to filter records.

Using ORDER BY, data is sorted in ascending or descending order. Advanced options like JOIN connect multiple tables, providing detailed insights.

When using SQL Server Management Studio, the query editor simplifies writing and executing SELECT queries. These tools streamline querying, enhancing data management efficiency in large databases.

Inserting Data with ‘Insert Into’

The INSERT INTO command is used to add new rows to a table. Users specify the table name, the columns to populate, and the corresponding values.

A basic syntax is INSERT INTO table_name (column1, column2) VALUES (value1, value2). Omitting column names adds data to all columns in their defined order.

SQL Server Management Studio aids in executing these insertions, providing an intuitive environment for new data entries.

When adding multiple rows, use INSERT INTO with SELECT to copy rows from another table. This is efficient for transferring data between tables.

Understanding and applying INSERT INTO helps maintain the database’s integrity and ensures the accuracy of new data entries.

Learning Select Statements

Understanding select statements is crucial for managing data in databases. They allow retrieval of specific records and information, offering flexibility and control over data queries.

Writing Effective Select Statements

Creating effective select statements involves key components such as select, from, where, and other clauses. The basic structure starts with the SELECT keyword, followed by columns or expressions needed. The FROM keyword specifies the table containing the data.

Accurate column naming or use of * for all columns is important. However, selecting specific columns ensures better performance and clarity.

Use the WHERE clause for filtering results based on specific conditions. Aggregations (like SUM or COUNT) and grouping can further refine results.

Understanding and applying these can optimize query performance and readability.

Selecting From Multiple Tables

Combining data from multiple tables requires the use of joins. Common join types include INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL JOIN. Each type determines how records from two tables are matched.

An inner join returns records with matching values in both tables. A left join keeps all records from the first table and matched records from the second, filling missing parts with NULL.

Choosing the right join type depends on the desired outcome. Use aliases to simplify queries, especially when tables have similar column names.

Applying conditions in the ON clause ensures precise data retrieval, enabling more complex data manipulation and integration.

Advanced Query Techniques

Mastering advanced query techniques in T-SQL involves understanding how to effectively use subqueries and join operations. These methods allow users to combine, compare, and manipulate tables to extract meaningful data from a database.

Utilizing Subqueries

Subqueries are queries nested inside other queries. They allow data to be used from one query to help form the results of another.

These are particularly helpful when data needs to be fetched from a table based on conditions that depend on data from another table.

Subqueries can appear in different clauses such as SELECT, FROM, and WHERE. For instance, filtering data based on conditions satisfied by another set of results is a common use.

This technique enhances query flexibility by allowing complex searches within a table’s results.

Subqueries can be scalar, returning a single value, row-based, returning a single row, or table-based, returning a set of rows. They can also be correlated, meaning they refer to columns from the outer query, making them more dynamic but sometimes less performant.

Implementing Join Operations

Join operations are crucial for connecting tables in a database. They allow for combining rows from two or more tables based on a related column. The most common types are INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL JOIN.

- INNER JOIN: Combines rows when there are matching values in both tables.

- LEFT JOIN: Returns all rows from the left table and matched rows from the right table.

- RIGHT JOIN: Returns all rows from the right table and matched rows from the left table.

- FULL JOIN: Returns rows when there is a match in one of the tables.

These operations are essential for complex queries involving multiple tables, allowing more comprehensive analysis and reporting. They play a vital role in data integration, enabling users to create a complete dataset from various sources.

Refining Data with Clauses

Refining data in T-SQL involves effectively using different clauses to filter and group information. This process helps users extract meaningful insights from data sets by applying conditions and aggregating results.

Using the Where Clause

The where clause is essential for filtering data in SQL queries. It allows users to specify conditions that must be met for records to be included in the result set.

By applying conditions to fields, users can retrieve specific data subsets.

For instance, if a database contains a table of sales transactions, applying a where clause such as WHERE transaction_date = '2024-11-28' will return only transactions that occurred on that date.

The where clause helps in narrowing down data, making it easier to work with large datasets. It ensures that only relevant records appear in results, saving time and allowing for more precise analysis.

Grouping Data with ‘Group By’

Grouping data using group by lets users organize similar data into categories. This clause is useful for summarizing information, especially when combined with aggregate functions like SUM, COUNT, or AVG.

For example, in a sales table, using GROUP BY product_id groups all sales for each product together. This method makes it easy to calculate total sales or average sales price per product.

Users must ensure that fields in the select statement are either aggregated or included in the group by clause. This organization is crucial for generating meaningful, accurate summaries from large datasets.

Filtering Groups with ‘Having Count’

The having count clause works with group by to filter grouped data. It allows users to specify conditions that apply to aggregated data, which is essential when filtering results based on those aggregates.

For example, to find products with more than 50 sales, one could use HAVING COUNT(product_id) > 50. This command filters groups based on the number of items within each group.

The having clause is used after group by, distinguishing it from where which precedes group operations. This difference ensures more granular data filtering based on aggregate calculations, providing valuable insights into data groups.

Set Operations in T-SQL

Set operations in T-SQL are important tools for combining or excluding rows from different tables or queries. They offer ways to manage and compare data effectively. Some of the key operations include UNION, UNION ALL, and EXCEPT.

Combining Results with ‘Union’ and ‘Union All’

The UNION operator combines the result sets of two queries, removing duplicate rows and presenting a distinct set. For example, if two tables list customers, UNION will merge them into one list with all unique entries. Use cases often involve consolidating reports or unified data views from multiple sources.

In contrast, UNION ALL keeps all duplicates. This is useful when the count of all items matters, such as total sales figures from different departments. Unlike UNION, which processes extra steps to remove duplicates, UNION ALL is generally faster due to reduced processing time, making it a preferred choice for performance-critical applications.

Comparing Data Sets Using ‘Except’

The EXCEPT operator is used to compare two datasets. It returns the rows present in the first query but missing from the second. This operator is useful for identifying discrepancies or missing items, such as records in a master list not found in an update.

Writing Conditional Statements

In T-SQL, the IF statement is a fundamental way to implement conditional logic. It lets the system decide which block of code to execute based on a specified condition.

For example, one might use the IF statement to check if a table exists by using the OBJECT_ID function. This ensures that any actions only occur when certain conditions are met.

Another powerful tool is the CASE expression. The CASE statement allows developers to evaluate multiple conditions and return specific values based on the outcome.

This can be useful in queries where different outcomes are needed based on varying data conditions.

These conditional structures help to control the flow of execution in SQL scripts, making it possible to perform actions only under desired conditions.

Employing Logic Functions

Logic functions in T-SQL serve as another way to implement conditional logic. Functions like ISNULL and COALESCE are used to handle null values, ensuring that queries return meaningful data even when some fields are missing.

ISNULL checks if a field is null and provides an alternative value if true. This can be essential when designing robust databases where data integrity is critical.

Similarly, COALESCE evaluates multiple expressions and returns the first non-null value. This function is particularly useful in scenarios where several fields might provide the needed data, but any single one of them could be null.

By using these logic functions, T-SQL enables developers to write queries that can adapt to different data conditions gracefully and efficiently.

Creating and Using Functions

Understanding SQL functions is essential for efficient data manipulation and retrieval. Functions in SQL include a variety of built-in methods that simplify common operations such as counting entries or calculating sums.

This section delves into two critical aspects: an introduction to SQL functions and a detailed look at the count function’s applications.

Introduction to SQL Functions

SQL functions are predefined operations used to perform calculations, modify data, and enhance queries. They come in different types, such as scalar functions which return a single value and aggregate functions designed to process multiple values and return a summary result.

Scalar functions include methods like ROUND() for rounding numbers, UPPER() for converting text to uppercase, and LEN() for finding the length of a string. Aggregate functions are widely employed in data analysis, and examples include SUM() for total values, AVG() for averages, and COUNT() for counting records.

By integrating these functions into queries, users can make SQL scripts more powerful and flexible. This enables more complex data analysis and ensures code efficiency.

Count Function and Its Applications

The COUNT() function in SQL is an aggregate function that returns the number of entries in a table or a view. This function is invaluable for data analysis, allowing users to determine the size of datasets or the frequency of specific attributes.

To count all rows in a table, COUNT(*) is used, giving a total row count regardless of nulls. When only non-null values are needed, the syntax COUNT(column_name) is applied, which counts entries in a specific column that are not null.

This can be essential for understanding the number of completed entries in databases with optional fields.

Using COUNT() allows quick insight into datasets, enabling informed decisions based on the volume of data or the number of unique occurrences of a particular attribute. This makes it a fundamental tool for anyone working with SQL.

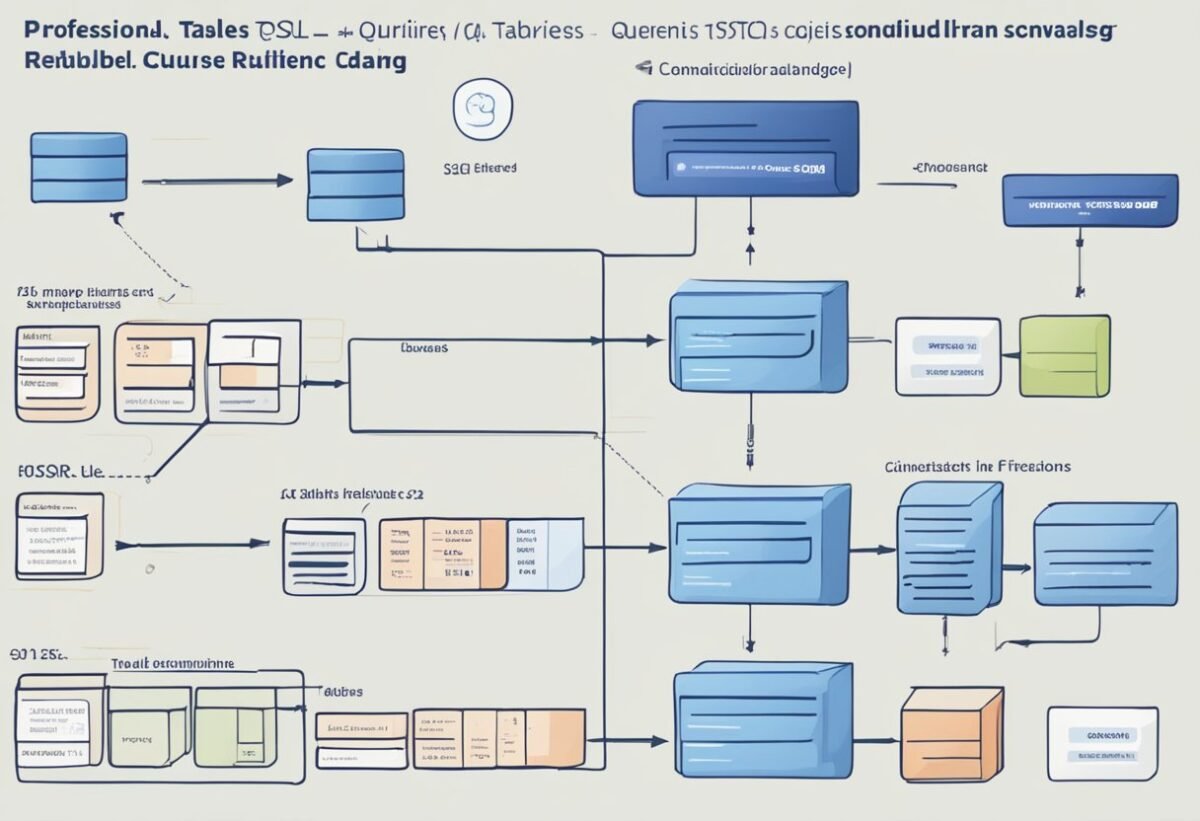

Stored Procedures and Automation

Stored procedures play a key role in automating tasks in T-SQL programming. They help in reducing repetitive code and improving execution time. This section will discuss developing stored procedures and using them alongside cursors for automating tasks.

Developing Stored Procedures

Developing a stored procedure involves writing a batch of T-SQL code that performs a specific task. Stored procedures can include control-of-flow statements that guide execution based on certain conditions. They are stored in the database and can be reused multiple times without rewriting code.

Creating a stored procedure starts with the CREATE PROCEDURE statement followed by the procedure name and parameters. Inside, T-SQL statements are written to accomplish tasks like querying or modifying data. Parameters allow procedures to be flexible and adaptable for different inputs.

After defining, stored procedures offer advantages such as increased performance and security. They also help in maintaining consistency because all users execute the same code.

Automating Tasks with Procedures and Cursors

Stored procedures can automate complex tasks by executing multiple statements in a sequence. They are particularly useful for automation when paired with cursors. Cursors allow processing of individual rows returned by queries, which is handy for row-by-row operations.

A stored procedure can open a cursor, fetch data, and perform operations like updates or calculations. This capability enables the automation of tasks that would otherwise require manual intervention.

Automation streamlines processes, reduces errors, and ensures tasks are completed quickly. Through smart design, stored procedures combined with cursors can maximize efficiency in database management. This approach makes handling repetitive tasks easier and less time-consuming.

Relational Database Management

Relational database management involves the systematic organization and handling of data using a relational model. Central to this approach are tables that store data in rows and columns, allowing for efficient retrieval and manipulation. This management is crucial for maintaining data integrity and optimizing query performance.

Designing Relational Databases

Designing relational databases is critical for efficient data storage and retrieval. A key principle is the use of tables that represent real-world entities, where each table has a primary key to uniquely identify its rows. This structure ensures that logical relationships between different tables are defined using foreign keys.

It’s essential to normalize data to eliminate redundancy and ensure data integrity. Normalization involves splitting data into smaller tables and defining relationships among them.

For instance, Microsoft’s SQL Server 2022 emphasizes eliminating redundant data to improve efficiency. Tools like MySQL and Oracle provide guidelines for maintaining these standards, making data processing faster and more secure.

Database Administration Essentials

Database administration is about maintaining the overall health and performance of a database system. A major responsibility includes backup and recovery procedures to protect data against loss or corruption.

With tools from Microsoft Learn, administrators can automate many of these tasks to ensure consistency.

Monitoring database performance is also essential. Administrators regularly check for slow-running queries and optimize them for better speed. They also ensure that security measures, like user access controls and permission settings, are in place to protect data.

For larger systems, platforms like SQL Server 2022 and MySQL offer advanced analytics to guide optimization and management decisions, keeping the system running smoothly.

T-SQL for Analyzing Online Interactions

T-SQL can be a powerful tool for analyzing how people interact online. By using specific queries, one can track user activity and enhance personalized advertising strategies.

Tracking Online Activity With SQL

Tracking online activity is essential for understanding user behavior. With T-SQL, data from various sources like website logs and social media can be collected and analyzed. SQL queries help in selecting and organizing data to reveal patterns in user interactions.

For instance, using JOIN statements, analysts can combine user activity data from multiple platforms.

Example Query:

SELECT users.username, activity.page_visited, activity.timestamp

FROM users

JOIN activity ON users.user_id = activity.user_id

WHERE activity.timestamp > '2024-01-01';

Analysts can identify popular pages, visit frequency, and other trends, which provide insights into user interests.

Personalized Advertising Analytics

For marketers, T-SQL aids in understanding consumer preferences and refining advertising efforts. By analyzing social media connections and browsing history, T-SQL can pinpoint buying intentions.

This data is used to tailor ads to individual interests, increasing the chances of engagement.

Example T-SQL Elements:

- WHERE clause to filter engaging content.

- GROUP BY for segmenting users based on similar behaviors.

SELECT user_id, COUNT(*) as ad_clicks

FROM ad_activity

WHERE ad_category = 'electronics'

GROUP BY user_id

ORDER BY ad_clicks DESC;

Using these techniques, companies enhance their advertising effectiveness, leading to more efficient targeting and improved returns.

Privacy and Cookie Management in Databases

In the realm of databases, managing privacy and cookies is crucial for protecting user data. Understanding privacy statements and effectively managing cookie data in SQL ensure compliance and enhance data security. These practices help in building trust and maintaining user confidentiality.

Understanding Privacy Statements

A privacy statement explains how an organization collects, uses, and protects personal information. It clearly communicates what data is stored and why, offering transparency to users.

Privacy statements typically cover information like data collection methods, third-party data sharing, and user rights.

Organizations must comply with legal standards, ensuring these statements are easy to understand. This includes details on opting out of data collection and the use of optional cookies. Users are often given choices to accept or reject optional cookies, maintaining control over their data.

Managing Cookie Data in SQL

Managing cookie data in SQL involves storing and retrieving information efficiently while maintaining privacy. Cookies are small data files stored on a user’s device, often containing preferences or login details. These can include first-party and third-party cookies, which might track browsing activity.

Developers use SQL queries to handle this data, ensuring security by applying encryption and access controls.

Managing cookies also involves providing users with options to manage or reject optional cookies, preserving user autonomy.

Effective cookie management enhances privacy and supports security frameworks, ensuring sensitive information is not exposed unnecessarily.

Frequently Asked Questions

This section covers common queries about T-SQL, including the use of the MERGE statement, combining query results, comparing tables, joining tables, applying multiple conditions, and using conditional logic in SQL.

What is the correct syntax for using the MERGE statement in SQL Server?

The MERGE statement in SQL Server allows for insertions, updates, or deletions in a target table based on a source table. The basic syntax involves specifying the target table, source data, and the conditions for each action within the MERGE statement.

How can you combine the results of two SELECT queries from different tables?

To combine results from two different tables, the UNION operator is commonly used. This operator requires that the SELECT queries have the same number and type of columns and combines the results into a single dataset.

In SQL, how do you compare two tables to identify column differences?

Comparing two tables in SQL to find column differences can be done using a FULL JOIN along with conditional checks for NULL values. This method highlights rows that exist in one table but not the other.

What method allows you to join two tables based on a specific condition?

Using the JOIN clause, specifically an INNER JOIN, allows tables to be joined based on a related column. This method selects rows with matching values in both tables for the specified condition.

How can you implement multiple WHERE conditions on a single column in SQL?

Multiple conditions on a single column can be implemented using AND or OR within the WHERE clause. This provides refined search results based on specified criteria, allowing more precise data retrieval.

Can you use conditional logic within SQL to combine two IF statements, and if so, how?

Conditional logic in SQL can be applied using the CASE statement. The CASE statement allows combining multiple conditions. It evaluates expressions and executes the set actions when conditions are met, providing flexibility in query logic.