Foundations of Python for Data Analysis

Learning the foundational aspects of Python is essential for data analysis.

This involves understanding Python’s programming basics, its data structures and algorithms, and practical skills that are crucial for working effectively with data.

Overview of Python Programming

Python is an accessible programming language known for its readability and versatility.

It’s widely used in data science thanks to its ease of use and robust libraries.

A strong introduction to Python involves grasping its syntax, data types, and functions. Knowing these basics helps individuals to script more efficiently and solve problems quickly.

Functions in Python allow for reusable code, aiding in quicker data manipulations.

Conditionals and loops are also key elements. They enable the execution of tasks based on conditions and repetitive actions without redundant code.

Understanding these fundamentals provides the groundwork necessary for progressing to intermediate and advanced tasks in data analysis with Python.

Data Structures and Algorithms

Python offers powerful data structures such as lists, dictionaries, and sets. These structures store data efficiently and allow for easy manipulation, which is vital for data analysis.

Lists are ordered collections while dictionaries store data in key-value pairs, perfect for quick lookups. Sets hold unique items, beneficial for exclusion operations.

Algorithms play a critical role in processing and analyzing data. Sorting and searching algorithms are foundational as they help in organizing and retrieving data efficiently.

By combining effective data structures and algorithms, individuals can handle large datasets and perform complex data analysis operations in Python, a key skill for data scientists.

Practical Python Skills

Practical Python skills for data analysis include working with libraries like Pandas, NumPy, and Matplotlib.

Pandas provide tools for data manipulation and analysis, offering structures like DataFrames. NumPy supports large multidimensional arrays and matrices, along with math functions to operate on these arrays.

For data visualization, Matplotlib and Seaborn are important. They make it possible to create revealing charts and plots that uncover insights from the data.

Developing these skills is crucial as they allow an analyst to present data findings clearly and effectively, ultimately supporting decision-making processes in real-world applications.

Core Python Programming Concepts

Python programming involves foundational techniques like control structures, loops, object-oriented programming (OOP), and error handling. These building blocks help create efficient and organized code.

Control Structures and Loops

Control structures allow for decision-making and repetition in code. If-else statements are used to execute code based on conditions. With loops, Python can repeat code segments efficiently.

For loops iterate over sequences, providing an easy way to traverse lists or strings. While loops continue until a condition is satisfied, which can be useful for indefinite iterations.

These tools are crucial for automating repetitive tasks and handling data in bulk.

Object-Oriented Programming

Object-oriented programming (OOP) enables developers to create classes and objects for structuring code. OOP helps organize complex code by grouping related properties and methods. It promotes code reuse through inheritance, where new classes derive attributes from existing ones.

Encapsulation ensures data hiding, while polymorphism allows methods to operate differently based on object type. This approach leads to more maintainable and scalable applications.

Error Handling and Debugging

Error handling in Python uses try-except blocks to catch and manage exceptions without crashing programs. This tool allows for a graceful response to errors, such as logging an error message or retrying an operation.

Debugging involves finding and fixing bugs through print statements or using tools like the Python debugger (PDB).

Effective error handling and debugging are vital for creating reliable software.

Function Definitions and Generators

Functions in Python encapsulate logic for reuse and clarity. Functions take inputs, perform actions, and return results, making programming modular.

Generators are a special type of function that use yield instead of return. They produce a sequence of results lazily, saving memory by generating items on-the-fly.

This technique is beneficial for working with large datasets, as it avoids storing entire sequences in memory.

Effective Data Handling in Python

Efficient data handling is crucial when working with Python for data analysis. Key components include powerful libraries that simplify data manipulation and cleaning tasks. Managing dates and times effectively also plays a significant role in organizing data for analysis.

Python Libraries for Data Analysis

Python is equipped with numerous libraries that enhance data analysis processes.

Libraries like NumPy provide support for large, multi-dimensional arrays and matrices, along with mathematical functions.

Pandas is another essential library that offers data structures and operations for manipulating numerical tables and time series. For data visualization, Matplotlib and Seaborn are highly popular.

They enable the creation of static, animated, and interactive visualizations, helping make sense of raw data more visually.

Data Manipulation with Pandas

Pandas offers versatile data structures known as DataFrames that allow users to easily manipulate and analyze data.

With Pandas, one can efficiently handle missing data and perform tasks like data cleaning. It also allows easy filtering, grouping, and sorting of data.

Handling categorical variables becomes straightforward with Pandas, as it converts them into discrete values, making them easier to analyze and interpret.

Working with Dates and Times

Handling dates and times accurately is crucial in data analysis.

Python’s standard library includes the datetime module which simplifies date and time manipulation. Pandas builds on this with its own methods to handle date and time data efficiently.

This includes converting date strings into datetime objects and working with different time zones.

Date handling is vital when organizing time series data or analyzing data trends over time.

Data Analysis Techniques

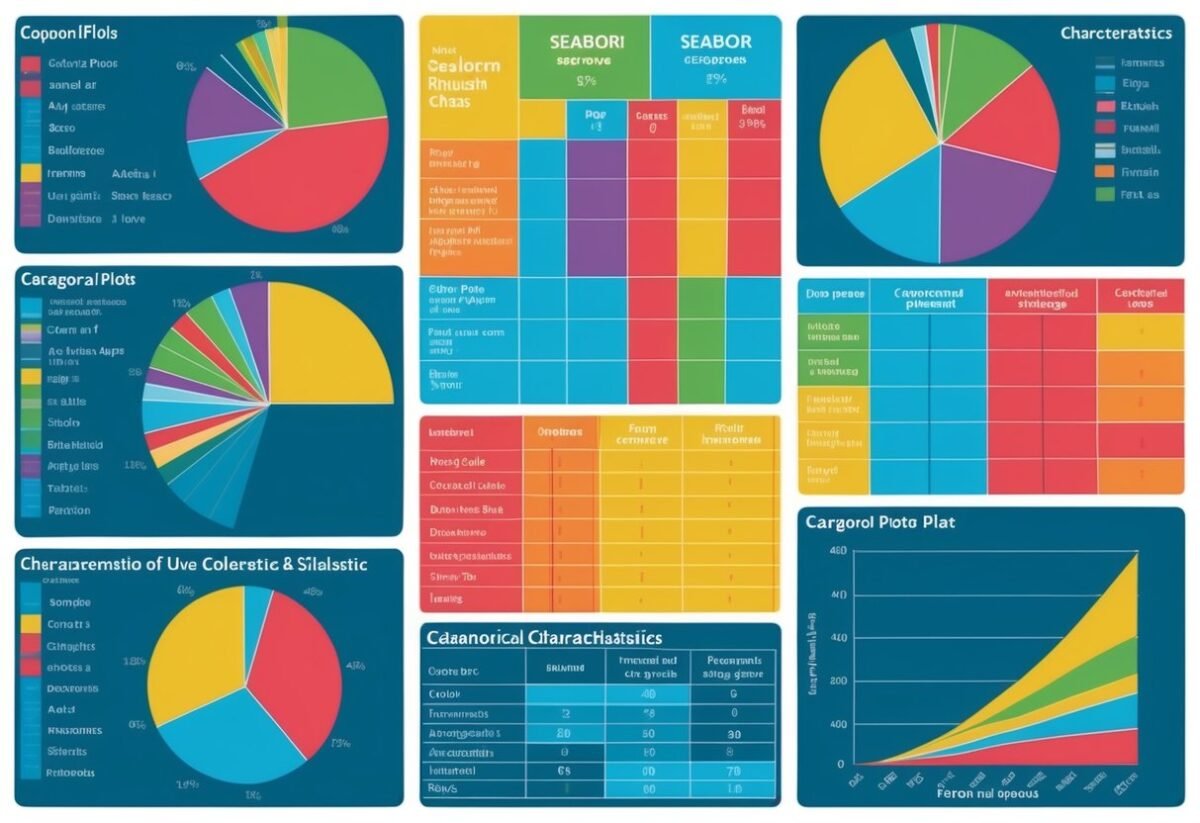

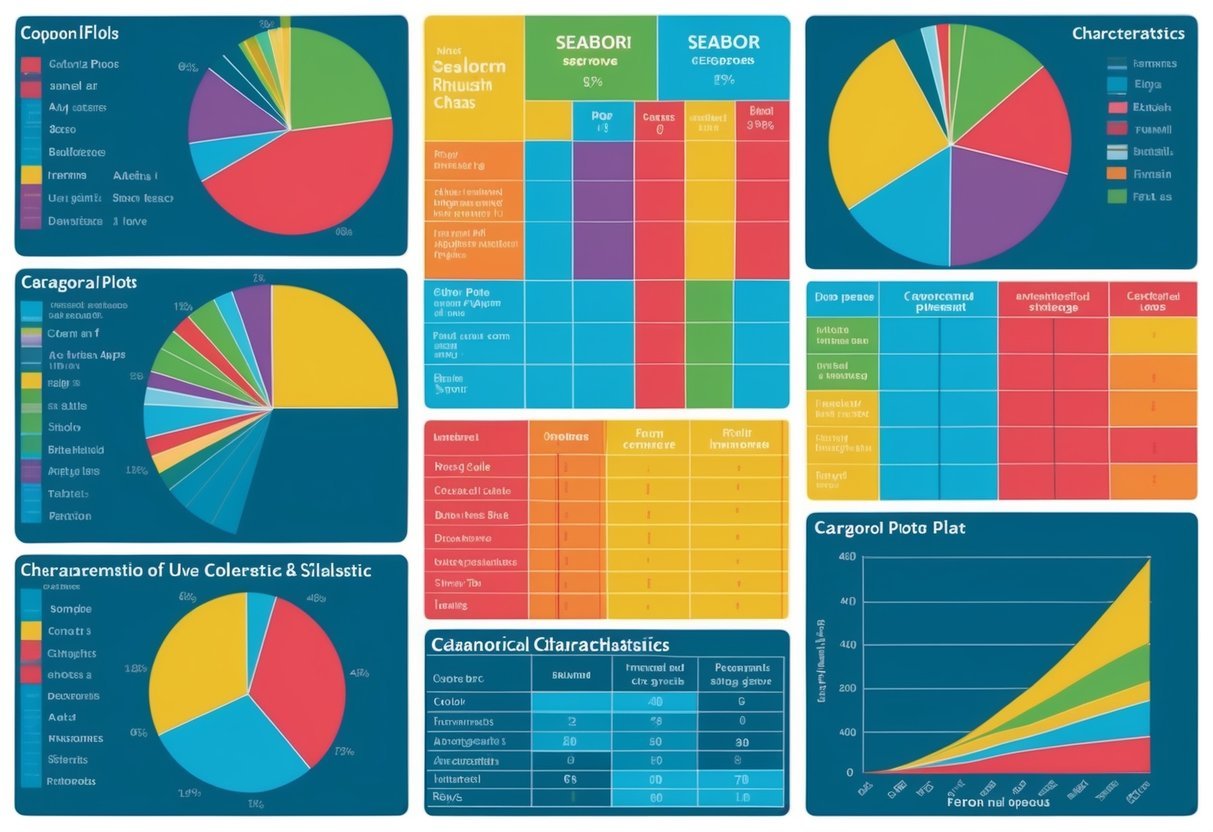

Data analysis requires a strong understanding of various techniques that are essential for extracting insights from data. These techniques include exploratory data analysis, descriptive statistics, and interpreting data distributions.

Exploratory Data Analysis (EDA)

Exploratory Data Analysis is a crucial first step in data analysis. It involves looking at datasets to summarize their main characteristics, often using visual methods.

EDA allows analysts to identify patterns, detect outliers, and find relationships between variables.

Charts like histograms, scatter plots, and box plots are commonly used. These tools help in revealing underlying structures in the data.

EDA is about making sense of data by employing a hands-on approach. It emphasizes visualization, encouraging analysts to view data from different perspectives.

While performing EDA, one often starts by cleaning the data. This involves handling missing values and checking for data entry errors.

Only after this can the true power of EDA unfold in revealing meaningful insights.

Descriptive Statistics in Python

Descriptive statistics provide a summary of the main features of a dataset. In Python, libraries like NumPy and Pandas are widely used for this purpose.

These statistics include measures of central tendency like mean, median, and mode. Each measure gives a different view of the data’s center.

Mean is calculated as the average of values. It is helpful in understanding the overall level of a dataset but can be skewed by extreme values.

The median gives the middle value of ordered data, making it more robust to outliers. Mode is the most frequently occurring value and is useful for categorical data.

Python makes it easy to compute these statistics with functions such as mean() and median(), providing a clear picture of the data with minimal code.

Understanding Distributions of Data

Grasping the distribution of data is key to advanced analysis. Distributions describe how values are spread across a dataset.

Common distributions include normal, skewed, and uniform. Recognizing them helps in choosing the right statistical tests and models.

Visual tools like histograms and kde plots in Python can reveal the shape of a dataset’s distribution.

A normal distribution is symmetric and bell-shaped, while a skewed distribution is asymmetrical. Understanding these properties helps predict kurtosis and variability.

By analyzing the distribution, data scientists can detect anomalies and potential biases in datasets. This knowledge enhances predictive modeling and improves the reliability of conclusions drawn from the data.

Data Visualization Fundamentals

Data visualization is a crucial tool for interpreting complex datasets. Understanding library functions, like Matplotlib, helps in creating clear and effective visual representations. Additionally, learning to create interactive visualizations can enhance user engagement and data analysis depth.

Charting with Matplotlib

Matplotlib is a versatile library used to create static, animated, and interactive visualizations in Python. It supports a range of plot types like line charts, bar graphs, histograms, and scatter plots. These charts help in identifying trends, patterns, and outliers in data.

One of the standout features of Matplotlib is its customization capability.

Users can adjust color schemes, line styles, and fonts to make charts more informative and visually appealing. It also integrates well with Jupyter notebooks, making it ideal for sharing insights interactively.

The library supports various output formats, enabling the saving of visualizations for reports or presentations.

By mastering Matplotlib, users can enhance their ability to communicate data insights effectively.

Creating Interactive Visualizations

Interactive visualizations engage users by allowing them to explore data dynamically.

Libraries like Plotly and Bokeh, in conjunction with Matplotlib, can produce these types of visualizations.

Interactive features might include zooming, panning, and tooltips that display data values on hover.

Interactive elements help users investigate data without needing extensive coding knowledge. This is valuable in fields like business intelligence and data science, where interpreting large datasets quickly is crucial.

Creating interactive visualizations requires understanding both the data and the audience’s needs.

By offering an engaging way to explore data, interactive visualizations can lead to more insightful conclusions and better decision-making.

Leveraging Advanced Python Features

Advanced Python features can significantly enhance data analysis capabilities. By utilizing advanced data structures, and mastering decorators and context managers, data handling and code efficiency are greatly improved.

Advanced Data Structures

Using advanced data structures in Python can transform the way data is stored and accessed.

Dictionaries and sets are pivotal for efficient data manipulation. Dictionaries allow storing data in key-value pairs, enabling quick lookups and modifications. This feature is crucial for tasks requiring rapid access to data, such as indexing large datasets.

Lists and tuples offer flexible ways to handle ordered data. Lists are mutable, allowing changes to data. Tuples, being immutable, provide a secure way to store data that must not change.

Using these data structures effectively requires understanding their strengths and weaknesses. This understanding improves code performance and resource management in larger projects.

Mastering Decorators and Context Managers

Decorators and context managers add functionality to Python code without modifying its structure.

Decorators allow the enhancement of functions or methods, offering a powerful way to modify behavior or add logging features seamlessly.

Context managers manage resources like file or network connections. By using a with statement, they ensure resources are adequately handled, avoiding potential issues like memory leaks.

They are invaluable in scaling projects where resource handling becomes complex.

Mastering these tools enhances Python’s readability and maintainability. Implementing them effectively requires a solid understanding of Python syntax and practice in real-world scenarios.

These skills not only streamline code but also contribute to more robust and efficient applications.

Machine Learning with Python

Machine learning with Python offers a powerful combination of flexibility and simplicity for developing data-driven solutions. Python skills are crucial for data scientists to implement machine learning algorithms efficiently and solve complex problems.

Fundamentals of Machine Learning

Understanding the basics of machine learning is essential for any aspiring data scientist.

Machine learning involves teaching computers to recognize patterns and make decisions based on data. It primarily consists of supervised and unsupervised learning.

In supervised learning, the system is trained on a labeled dataset, such as classifying emails as spam or not spam.

Unsupervised learning, by contrast, involves finding hidden patterns without predefined labels, like grouping customers based on purchasing behavior.

Python is widely used for these tasks due to its vast library support, including tools like scikit-learn for model training and Pandas for data manipulation. Model evaluation metrics like accuracy and F1 score are also crucial here.

Implementing Machine Learning Algorithms

Implementing machine learning algorithms involves using Python’s rich ecosystem of libraries.

Libraries like SciPy and scikit-learn provide interfaces for running classic algorithms.

For instance, decision trees and support vector machines (SVM) are common in supervised learning tasks.

Python makes it easy to test and apply different algorithms to find the best fit for specific problems.

Project work often includes data preprocessing, feature selection, and model tuning.

Efficient use of these libraries requires solid Python skills, focusing on writing clear, maintainable code.

Machine learning projects often conclude with model evaluation and deployment, where Python’s versatility shines, enabling seamless integration into larger data analytics pipelines.

Project and Workflow Management

Effective management of projects and workflows is crucial for successful data analysis.

By leveraging tools like Jupyter Notebooks and embracing version control, data scientists and software engineers can enhance productivity and promote seamless collaboration.

Using Jupyter Notebooks Effectively

Jupyter Notebooks are powerful tools that help in organizing code, visualizations, and notes in an integrated environment.

They provide an interactive platform for experimenting with data, making it easier to document and share insights.

Notebooks support various programming languages but are most commonly used for Python, making them ideal for data analysis projects.

Key features include:

- Interactive data visualizations

- Easy modification and testing of code snippets

- Inline documentation capabilities

Best practices involve structuring notebooks with clear headings, meaningful comments, and segregating code into understandable blocks.

This ensures that analyses and results are presented clearly, assisting others in replicating or reviewing the work.

Version Control and Collaboration

Version control systems like Git play a crucial role in managing code changes and collaboration among team members.

This ensures that all project stakeholders can access the latest updates without erasing past work.

Key features of version control include:

- Tracking changes over time

- Facilitating collaborative editing

- Reverting to previous code states if needed

For effective collaboration, using platforms like GitHub allows for distributed development, where software engineers and data scientists can work simultaneously on different aspects of the project.

Tools such as pull requests and issue tracking further streamline workflow management, fostering a collaborative environment where everyone can contribute efficiently and confidently to the project’s goals.

Building a Professional Portfolio

A professional portfolio in data analysis demonstrates proficiency in Python and technical skills and shows creativity and problem-solving abilities. This resource is essential for standing out to employers and advancing in a data analyst career.

Hands-On Projects and Case Studies

Hands-on projects and case studies are vital for an appealing portfolio. They show potential employers the ability to apply theoretical knowledge to real-world problems.

Projects should include tasks like data cleaning, analysis, and visualization.

Case studies can demonstrate skills in Python coding, machine learning, and data analytics.

When creating a project portfolio, variety is key. Include projects that showcase different skills, such as statistical analysis or creating visualizations from datasets.

Collaborating with others on group projects can also add depth. Participating in data hackathons or online competitions is a great way to gather diverse experiences and enhance project work.

Showcasing Your Work to Employers

Effectively showcasing a portfolio is crucial for catching the eye of potential employers.

Use platforms like GitHub to host and share projects. Each project should have clear, concise documentation. Include details about the problem solved, the methods used, and the results achieved.

Additionally, consider writing blogs or articles that explain projects in more detail. This not only demonstrates communication skills but also highlights expertise.

Another approach is to present portfolio work on a personal website. This offers a central place for all projects and can include a resume and contact information. Using links to guide potential employers to specific projects can make it easier for them to navigate and understand the work.

Advancing Your Career in Data Analysis

To grow in data analysis, it’s essential to focus on certifications and targeted learning paths. Online courses and interactive lessons are key resources for building skills efficiently and effectively.

Certifications and Learning Paths

Certifications can greatly boost a data analyst’s career. They validate one’s skills to employers and can distinguish an individual from other candidates.

Key certifications like the Microsoft Certified: Data Analyst Associate or the Data Science Professional Certificate offer technical and analytical learning paths.

These certifications often cover data visualization, database management, and statistical analysis.

Pursuing a certification shows a commitment to continuous education, which is highly valued in the industry.

Choose a certification that aligns well with career goals and areas of interest. These structured paths provide a clear framework for skill development.

Online Courses and Interactive Lessons

Online courses provide accessible ways to learn new skills at your own pace.

Platforms like Coursera and Dataquest offer courses focused on data analysis with Python, which is highly recommended due to Python’s widespread use in the industry.

Interactive lessons engage learners with real-world scenarios, enhancing understanding.

These platforms often offer practice problems and projects that mimic actual data challenges.

Many courses also include community forums where learners can discuss topics and resolve doubts, making learning more collaborative.

Look for ones that offer a Dataquest guarantee, which ensures satisfaction through quality education and practical experience.

Working with Real-world Data

When dealing with real-world data, understanding how to manipulate text, analyze large datasets, and visualize results is essential. These skills are crucial for transforming raw information into valuable insights.

String Manipulation and Text Data

String manipulation is key in cleaning and organizing data. Text data can be messy, containing irregular spacing, punctuation, and mixed cases.

Using Python’s string methods, one can modify text by removing unwanted characters, splitting strings into lists, or changing letter cases to achieve uniformity.

Regular expressions (regex) are powerful in identifying patterns in text. They can extract emails, dates, or any specific format within strings, making data cleaner and more usable.

Python libraries like re simplify regex tasks, helping to automate complex text processing efficiently.

Processing text data often involves using data structures like lists and dictionaries to store and iterate over text collections. These tools make it easier to organize, search, and retrieve data quickly.

Analyzing and Visualizing Large Datasets

Handling large datasets requires efficient analysis techniques to uncover trends and patterns.

Python libraries like pandas and NumPy enable users to perform complex calculations and transformations on extensive data collections swiftly.

To make datasets more understandable, data visualization tools such as matplotlib and seaborn are invaluable.

These libraries can create a wide range of graphs and charts, like histograms or scatter plots, which present data visually, highlighting key insights.

When dealing with large datasets, efficient data structures like DataFrames provide a way to manage and manipulate data without excessive memory use.

This enhances performance, allowing for quick access and processing, crucial when working with real-world data complexities.

Staying Current with Python Developments

Continuous learning is crucial for professionals in Python-related fields. Staying informed about new updates and connecting with the community are keys to maintaining expertise. This ensures data scientists and software engineers are always up-to-date.

Continuing Education for Python Professionals

Python professionals should focus on ongoing education to keep their skills sharp.

Courses from platforms like DataCamp and Codecademy offer valuable resources.

These platforms provide structured learning paths covering advanced modules, libraries, and data analysis techniques.

For data scientists, enrolling in specialized training like machine learning or data visualization can expand expertise.

Participating in webinars and workshops presents an opportunity to learn from industry leaders.

Keeping abreast with the latest Python versions and updates is essential, ensuring they know recent language enhancements and best practices.

Community Engagement and Networking

Joining Python communities offers great benefits for learners and professionals.

Forums like Reddit’s Python community and Stack Overflow provide opportunities for problem-solving and exchanging knowledge.

Engaging with these platforms allows professionals to ask questions, share experiences, and obtain solutions quickly.

Networking at industry conferences, such as PyCon, lets data scientists and software engineers meet peers and industry leaders.

These events offer sessions on trends and innovations. Community involvement ensures professionals build a support system, gaining insights and potential career opportunities.

Participants in online study groups can also collaborate on projects, fostering skill development and idea exchange.

Frequently Asked Questions

Intermediate Python skills are crucial for anyone looking to excel in data analysis. Key areas include proficiency in essential libraries, data manipulation, data visualization, statistical analysis, and the implementation of machine learning techniques.

What are the essential Python libraries one should be proficient in for data analysis?

Python libraries play a vital role in data analysis. Libraries such as Pandas and NumPy are essential for data manipulation and numerical computations.

For data visualization, Matplotlib and Seaborn are widely used, while SciPy offers advanced statistical functions to enhance data analysis capabilities.

Which Python data structures are most commonly used in data analysis tasks?

Data analysis often involves working with data structures like lists, dictionaries, and data frames.

Lists and dictionaries are fundamental, but data frames, primarily through Pandas, provide a flexible way to store and manipulate large datasets, making them crucial for data analysis tasks.

How can one efficiently handle data manipulation in Python?

Efficient data manipulation can be achieved using Pandas for organizing and cleaning data.

Techniques like filtering, aggregation, and handling missing values are straightforward with this library. Understanding these operations enhances one’s ability to prepare data for further analysis.

What are the best practices for visualizing data in Python?

Data visualization is key to understanding data insights.

Best practices include using Matplotlib for creating basic charts and plots, while Seaborn is suited for more advanced visualizations. Both libraries help in presenting data in a clear and understandable way.

In what ways can Python be used to perform statistical data analysis?

Python offers a range of tools for statistical analysis.

Libraries like SciPy make it possible to perform complex statistical operations. This includes hypothesis testing, regression analysis, and descriptive statistics, all of which are essential for thorough data analysis.

How does one implement machine learning techniques in Python for data analysis?

Machine learning in Python involves using libraries like scikit-learn. This library provides tools for implementing algorithms such as classification, regression, and clustering.

These techniques can enhance data analysis by allowing predictions and insights from complex datasets.